Qlik Compose Setup and User Guide

Qlik Compose

TM

May 2022

Last updated: December 21, 2023

Copyright © 1993-2021 QlikTech International AB. All rights reserved.

HELP.QLIK.COM

© 2023 QlikTech International AB. All rights reserved. All company and/or product names may be trade names,

trademarks and/or registered trademarks of the respective owners with which they are associated.

Setup and User Guide - Qlik Compose, May 2022 3

1 What's new? 9

1.1 What's new in Data Warehouse projects? 9

Keeping changes in the Change Tables 9

Referenced dimensions 9

Data mart enhancements 10

Microsoft Azure Synapse Analytics Enhancements 10

Uniform source consolidation 11

Environment variables 11

Support for data profiling and data quality rules when using Google Cloud BigQuery 12

Attributes case sensitivity support 12

Associating a Replicate task that writes to a Hadoop target 12

Performance improvements 12

Support for Redshift Spectrum external tables 13

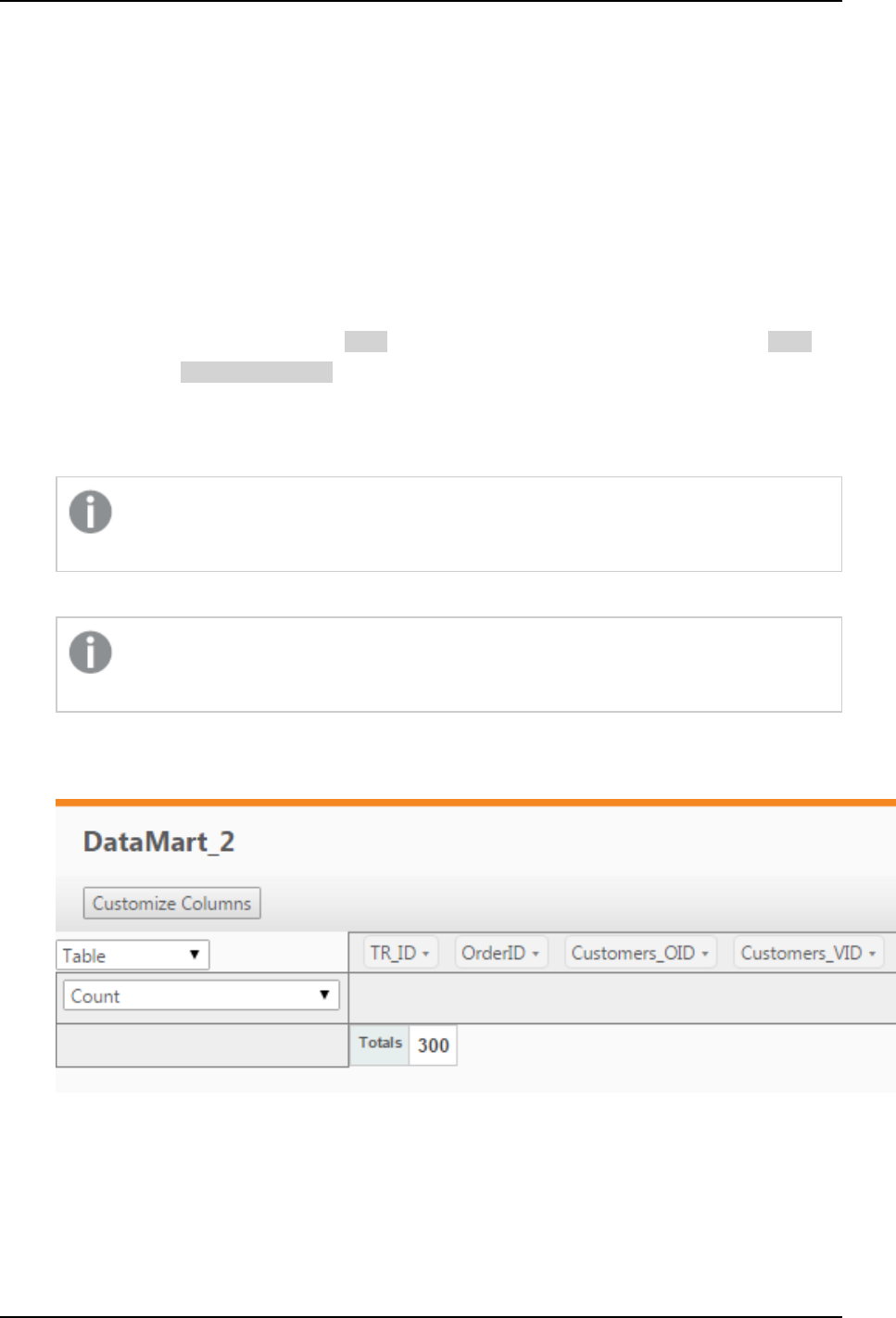

Data mart UX improvement 13

Support for updating custom ETLs using the CLI 13

Support for defining a custom data mart schema in Microsoft Azure Synapse Analytics 13

1.2 What's new in Data Lake projects? 13

Support for excluding deleted records from ODS views 13

Improved Historical Data Store resolution 14

Associating a Replicate task that writes to a Hortonworks Data Platform target 14

Databricks projects 14

1.3 New features common to both Data Warehouse projects and Data Lake projects 15

New Project title setting 15

Support for Microsoft Edge Browser 15

Windows Server 2022 (64-bit) support 15

Security Hardening 15

Managing user and group roles using the Compose CLI 16

2 Introduction 17

2.1 Data warehouse projects 17

Data warehouse projects architecture 17

Key features 18

2.2 Data lake projects 18

Easy data structuring and transformation 18

Continuous updates 18

Historical data store 18

Data lake project architecture 19

3 Qlik Compose installation and setup 20

3.1 Preparing your system for Compose 20

Hardware prerequisites 20

Software and network prerequisites 21

Required permissions for the Compose service 21

Reserved system names 21

3.2 Installing or upgrading Compose 21

Installation Instructions 21

Upgrade Instructions 22

3.3 Installing and upgrading Compose silently 22

Silently installing Compose 23

Contents

Setup and User Guide - Qlik Compose, May 2022 4

Silently upgrading Compose 24

Silently uninstalling Compose 24

3.4 Determining the required number of database connections 24

3.5 Accessing Qlik Compose 25

4 Security considerations 26

4.1 Setting up HTTPS for the Compose console 26

Checking if an SSL certificate is installed 26

Using the self-signed certificate 27

Replacing the self-signed certificate on Windows 29

4.2 Setting the hostname and changing the HTTPS port 30

To set the hostname: 30

To change the HTTPS port: 30

4.3 Setting up HSTS on Compose 31

Enabling HSTS 31

Disabling HSTS 31

4.4 Setting Single Sign-On Authentication with Kerberos 32

4.5 Changing the master user password 32

5 Data Warehouse projects 35

5.1 Defining a Qlik Replicate task 36

Prerequisites 36

Limitations and considerations 36

Setting up the task 37

5.2 Adding and managing data warehouse projects 37

Adding data warehouse projects 38

Managing and monitoring projects 39

Project settings 40

Resetting projects 46

Project deployment 47

Migrating objects as CSV files 49

Exporting and importing projects using the CLI 78

Working with environment variables 88

Generating projects using the CLI 98

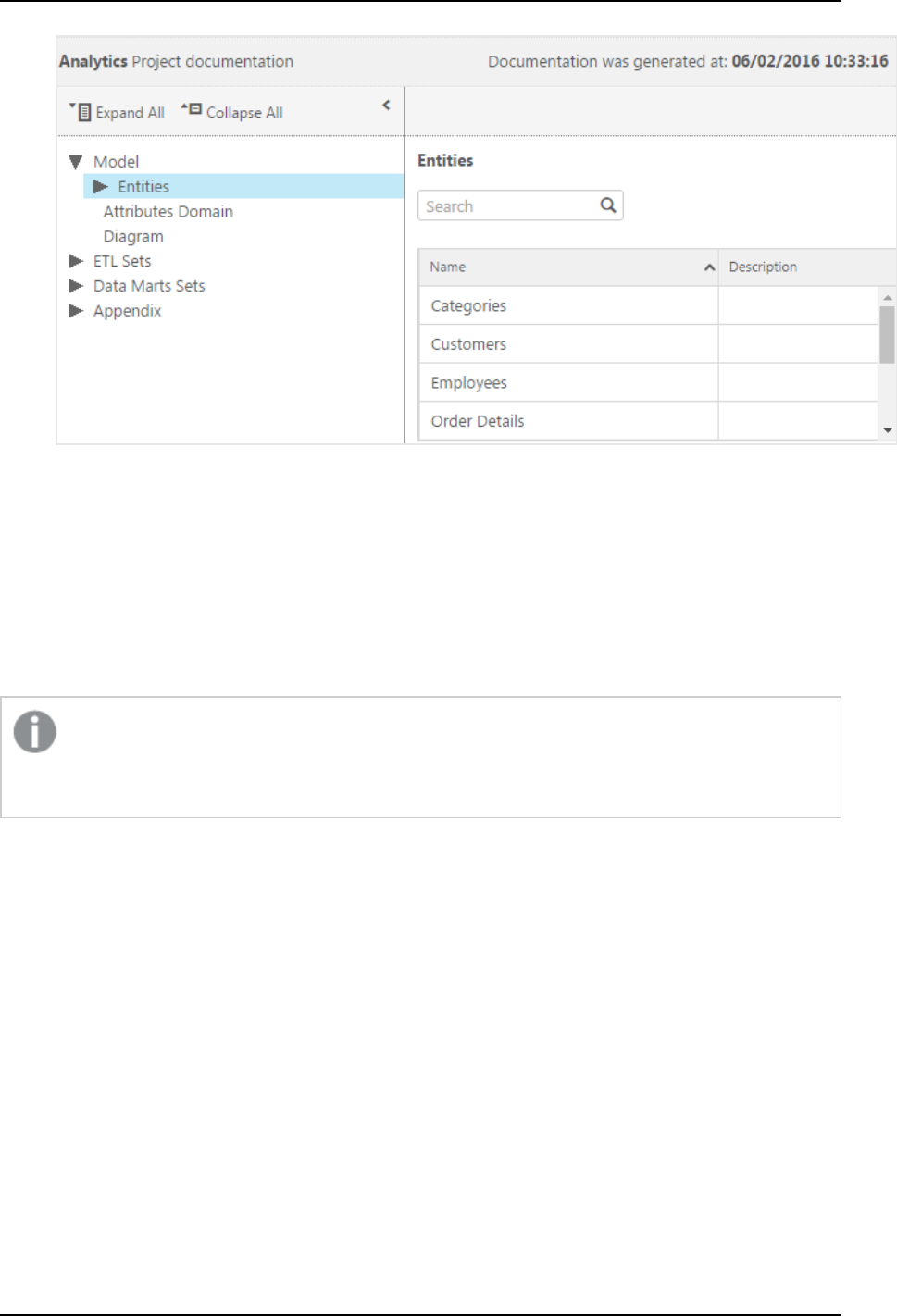

Exporting project documentation 99

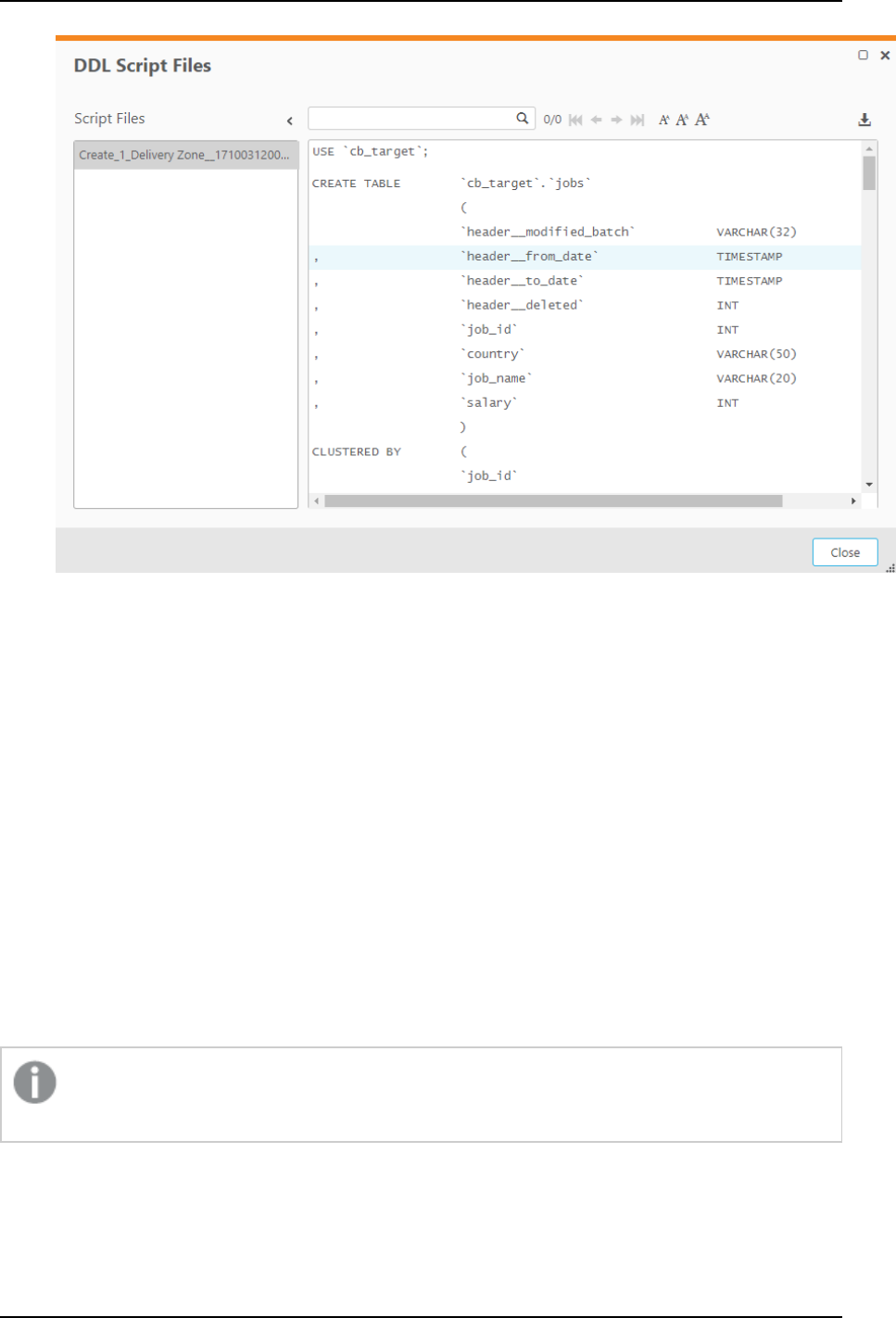

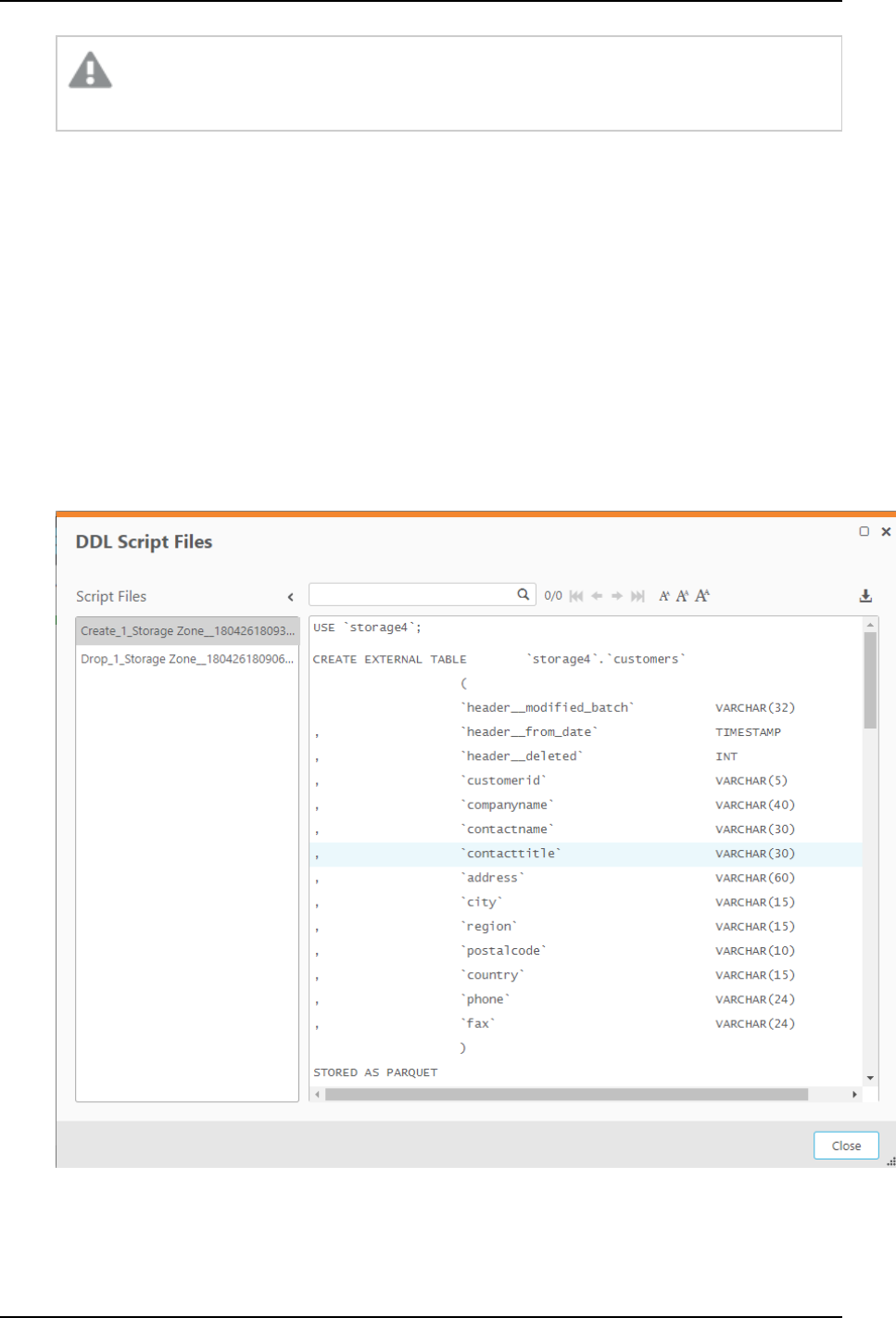

Viewing and downloading DDL scripts 100

Project versioning 101

Creating a diagnostics package 103

5.3 Getting started with Data Warehouse projects 104

High-level flow 104

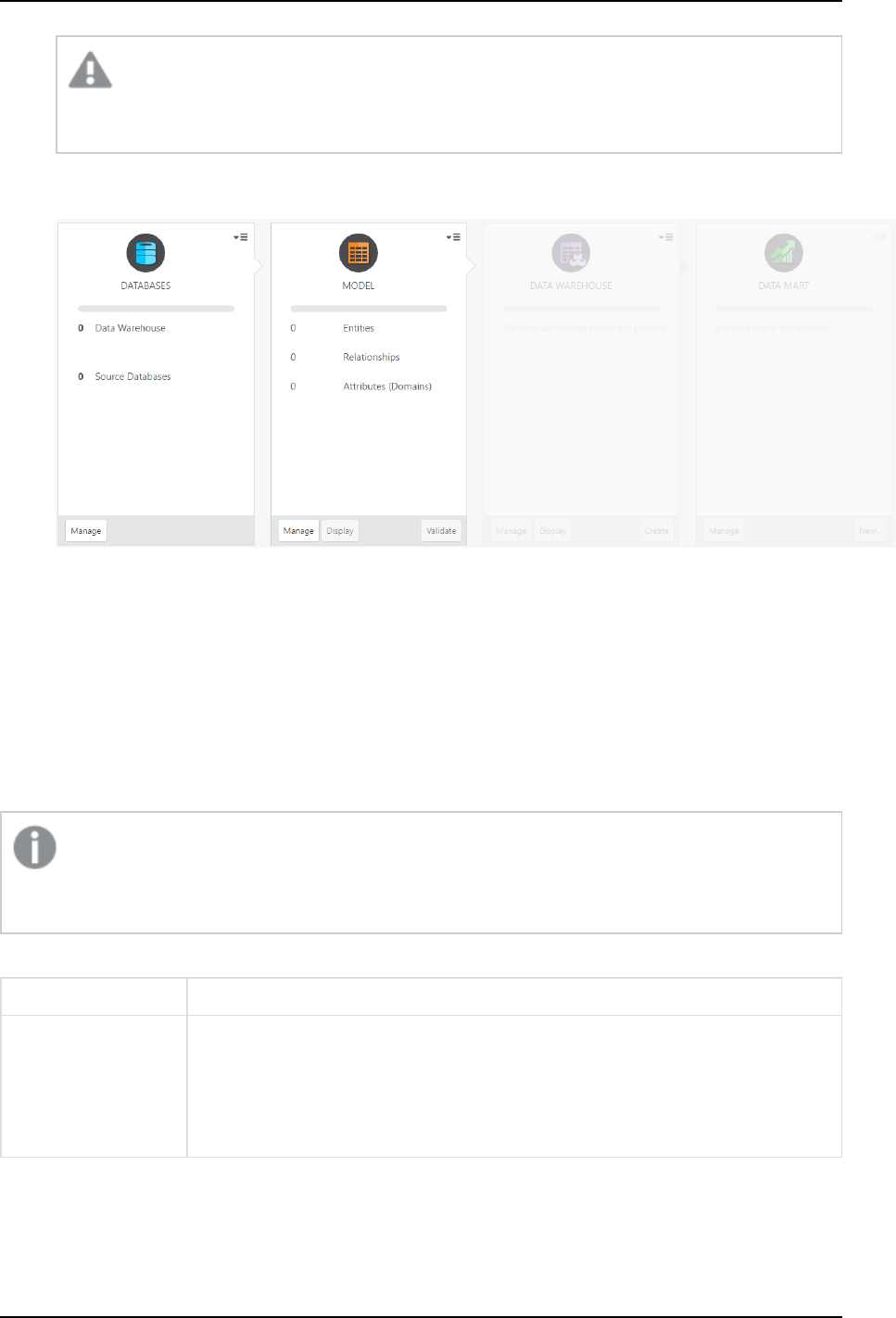

Console elements 104

Data warehouse project tutorial 107

5.4 Setting up a data warehouse connection 111

Using Microsoft SQL Server as a data warehouse 112

Using Oracle as a data warehouse 115

Using Snowflake as a data warehouse 118

Using Amazon Redshift as a data warehouse 121

Using Microsoft Azure Synapse Analytics as a data warehouse 124

Using Google Cloud BigQuery as a Data Warehouse 128

Contents

Setup and User Guide - Qlik Compose, May 2022 5

Managing databases 131

5.5 Setting up Landing Zone and Data Source connections 131

Reserved column names and suffixes 131

Permissions 132

Data type mappings 133

Defining landing zones 140

Defining Replicate data source connections 147

Managing databases 154

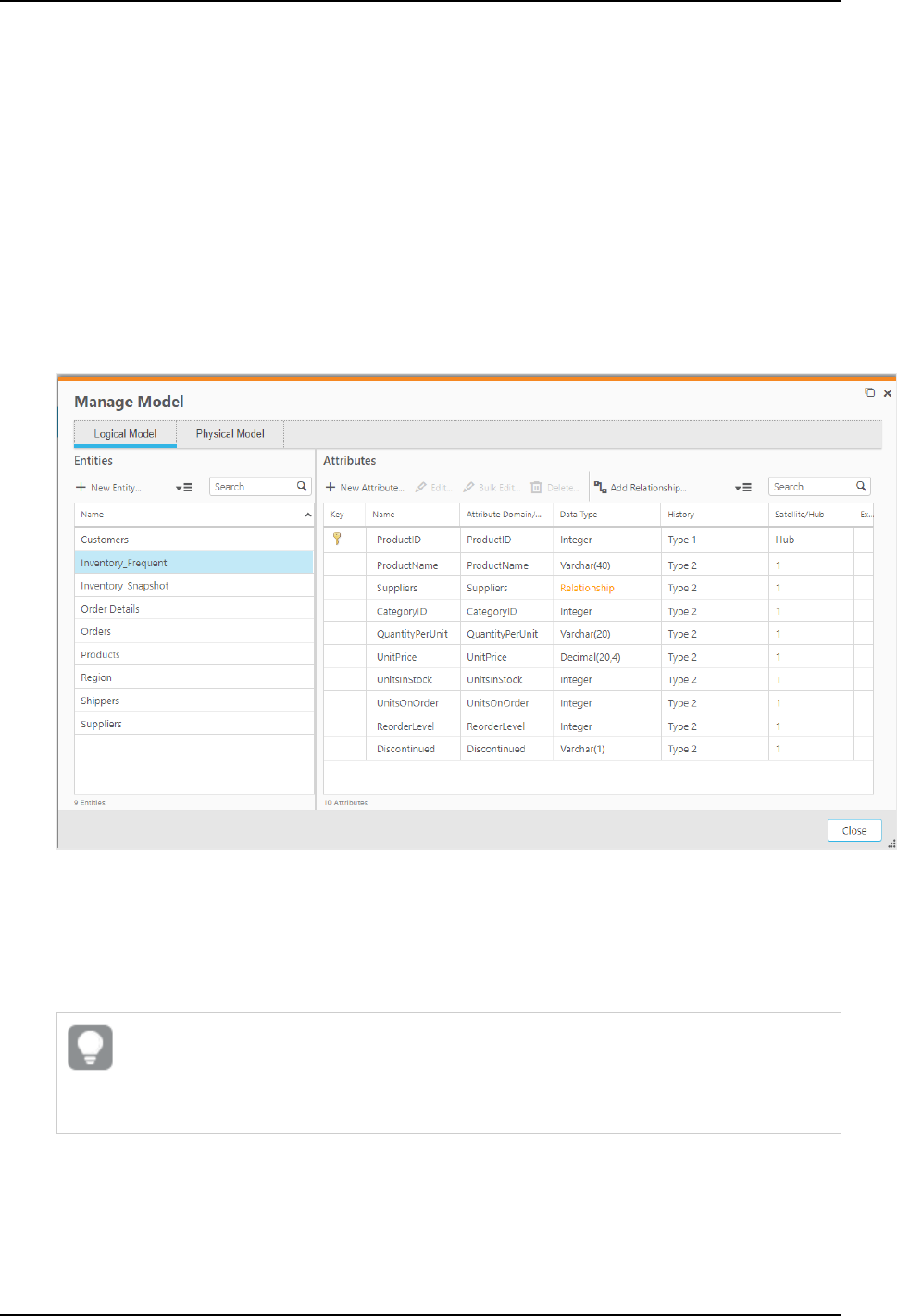

5.6 Creating and managing the model 154

Reserved column names 155

Generating the model 155

Model limitations 162

Validating the model 163

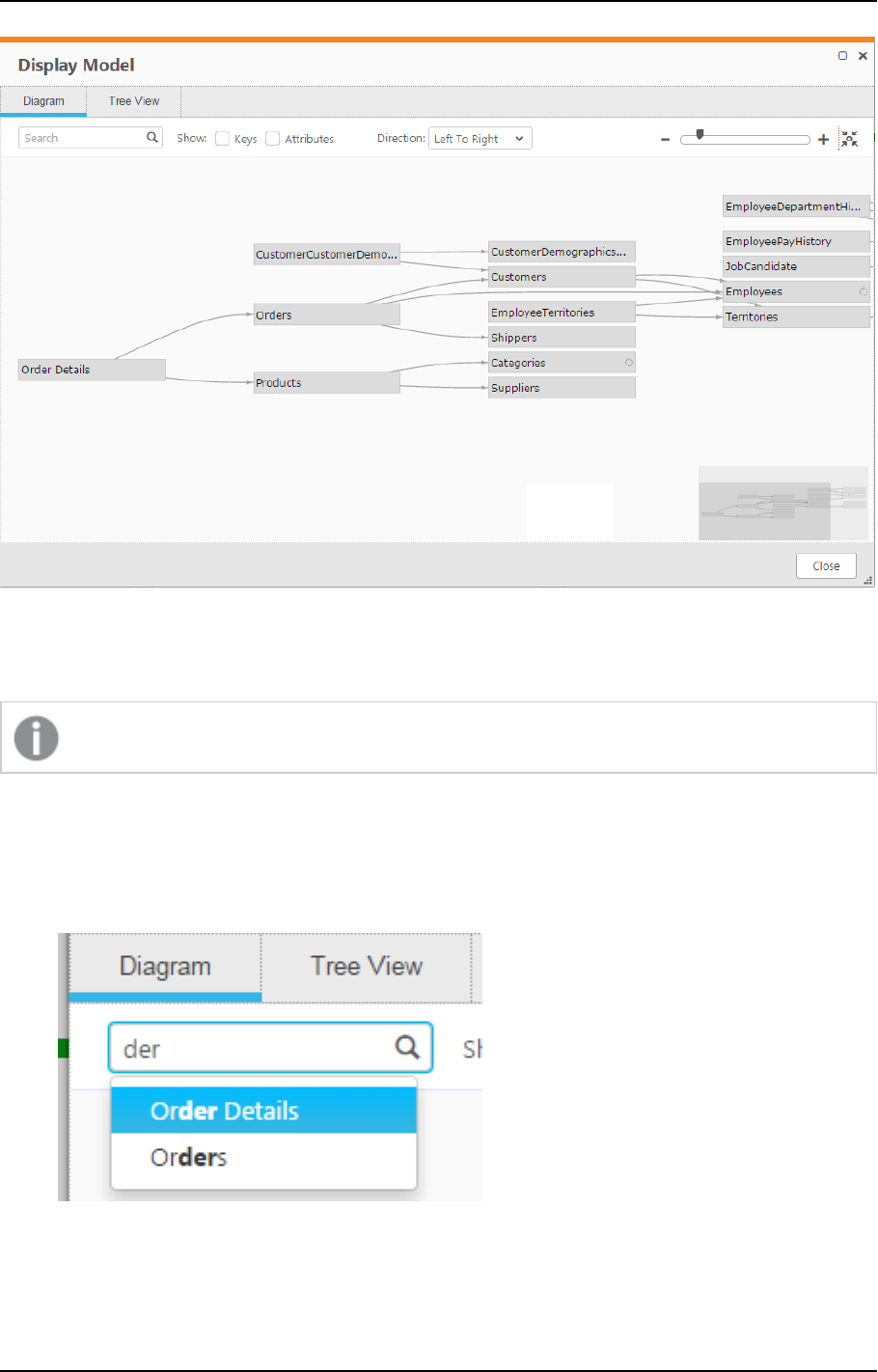

Displaying the model 163

Managing the model 166

Creating expressions 181

Opening the expression builder 182

Defining reusable transformations 188

5.7 Creating and managing the data warehouse 190

Data warehouse tasks 191

Managing tasks 201

Viewing and exporting task statements 216

Modifying task settings 217

Validating the data warehouse 222

Clearing the data warehouse metadata cache 224

5.8 Creating and managing data marts 225

Adding data marts and star schemas 225

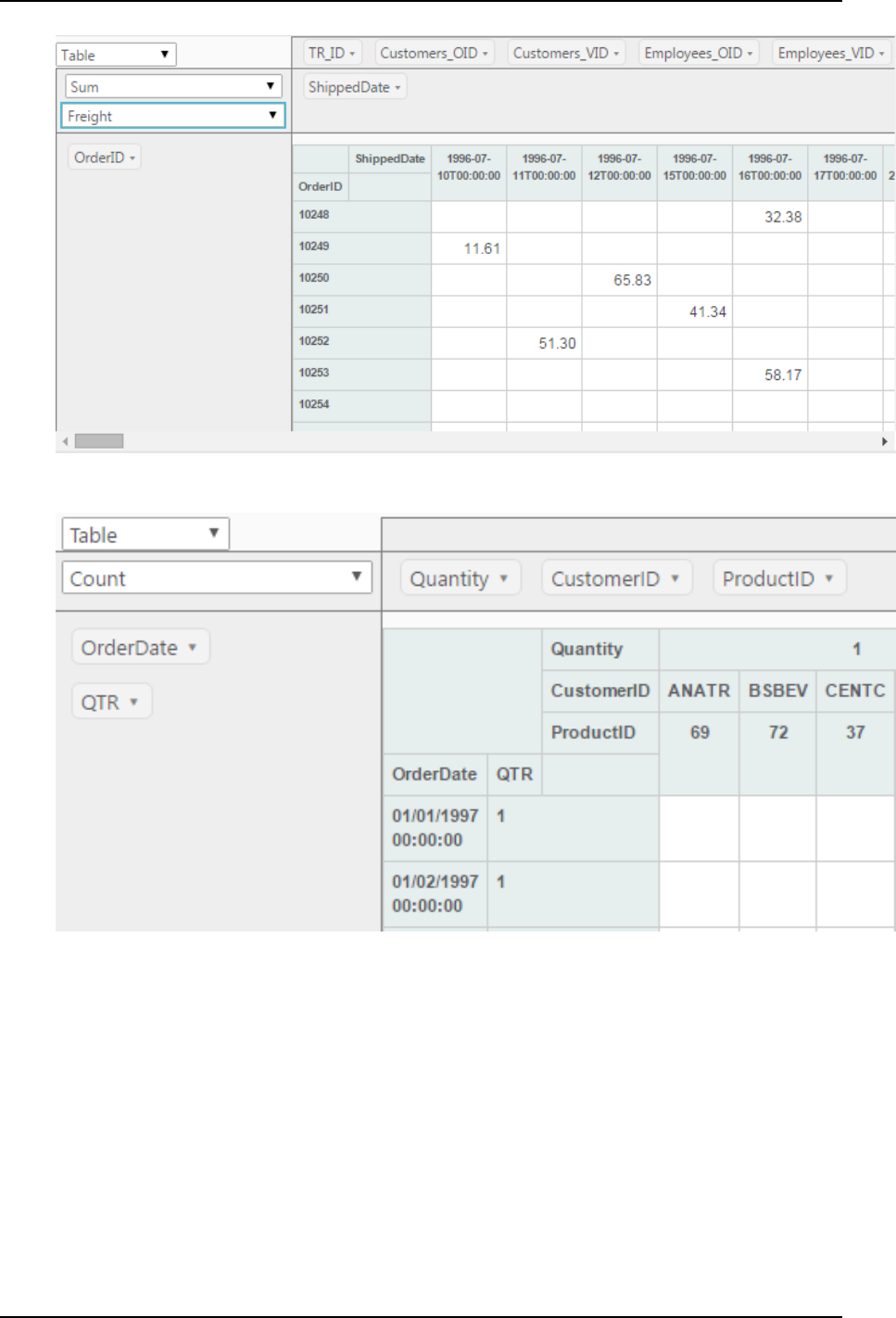

Displaying data in a pivot table 231

Managing data marts 234

Example of a Valid Table Creation Modifier 241

Example of a Valid Table Creation Modifier 246

Creating and managing custom ETLs 250

Viewing and exporting task statements 252

Validating and adjusting the data mart 252

Reloading the data mart 254

Modifying data mart settings 255

The "Obsolete" indicator 257

5.9 Creating and managing command tasks 258

Defining command tasks 258

Managing command tasks 259

Controlling and monitoring command tasks 259

5.10 Controlling and monitoring tasks and workflows 260

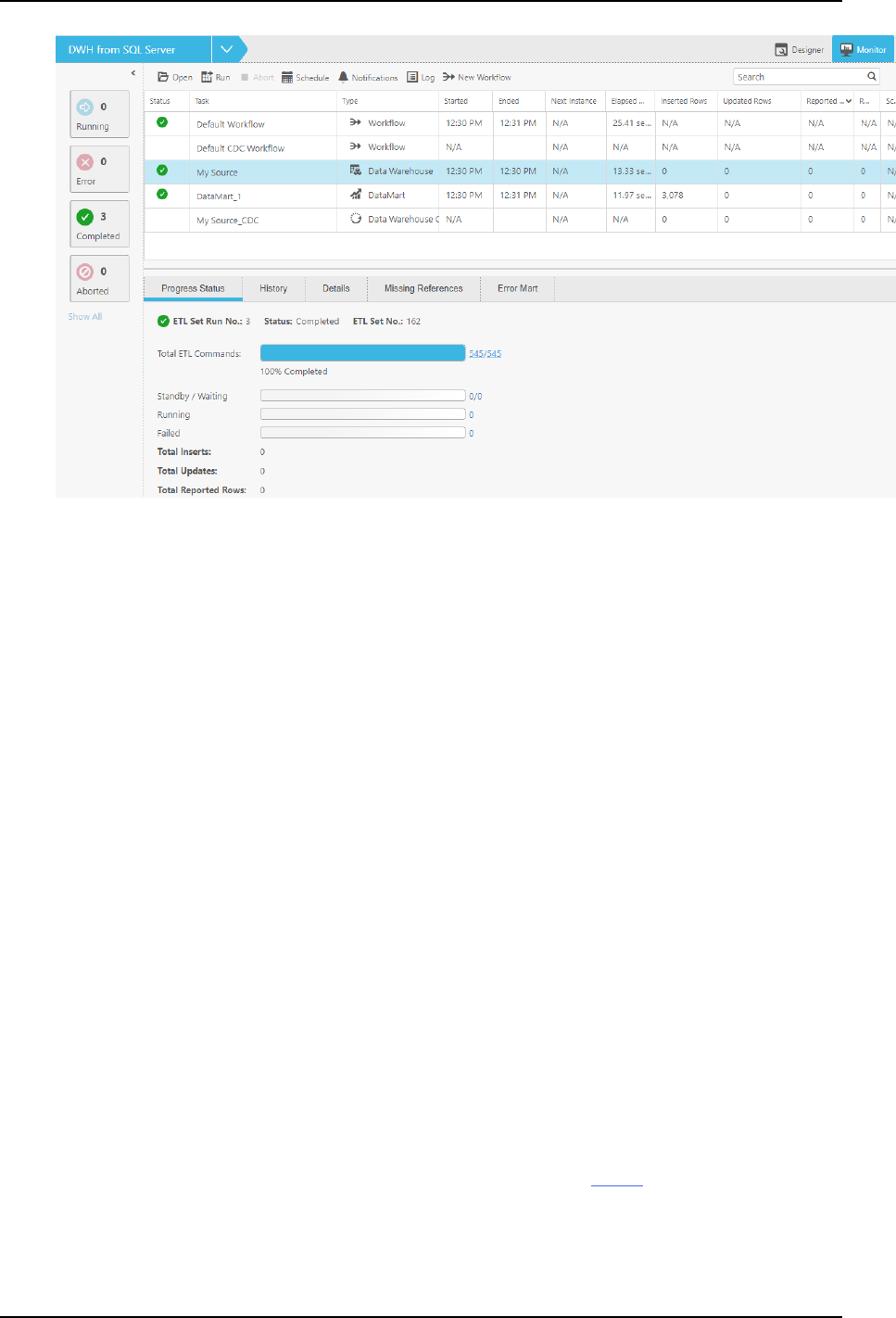

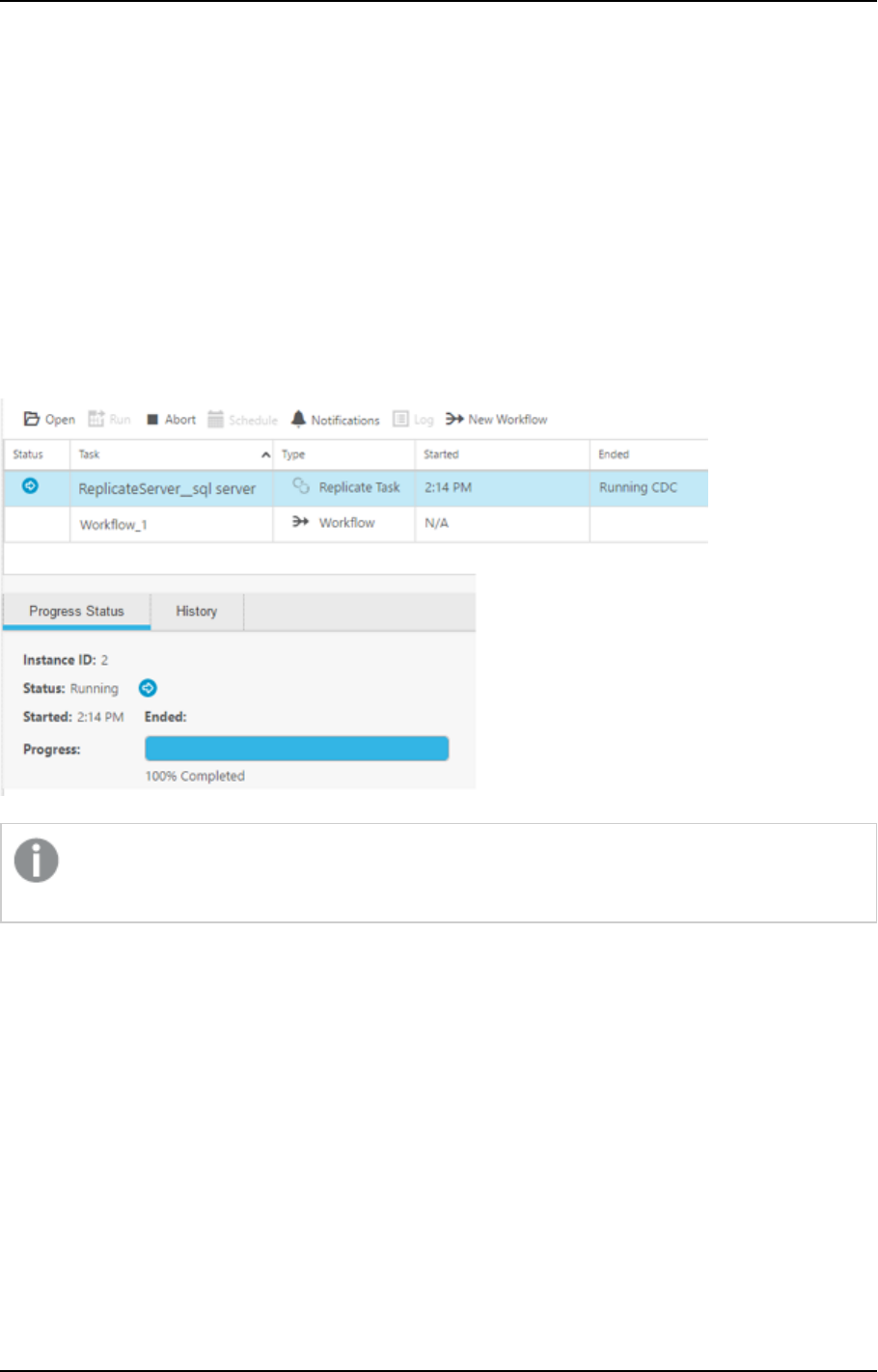

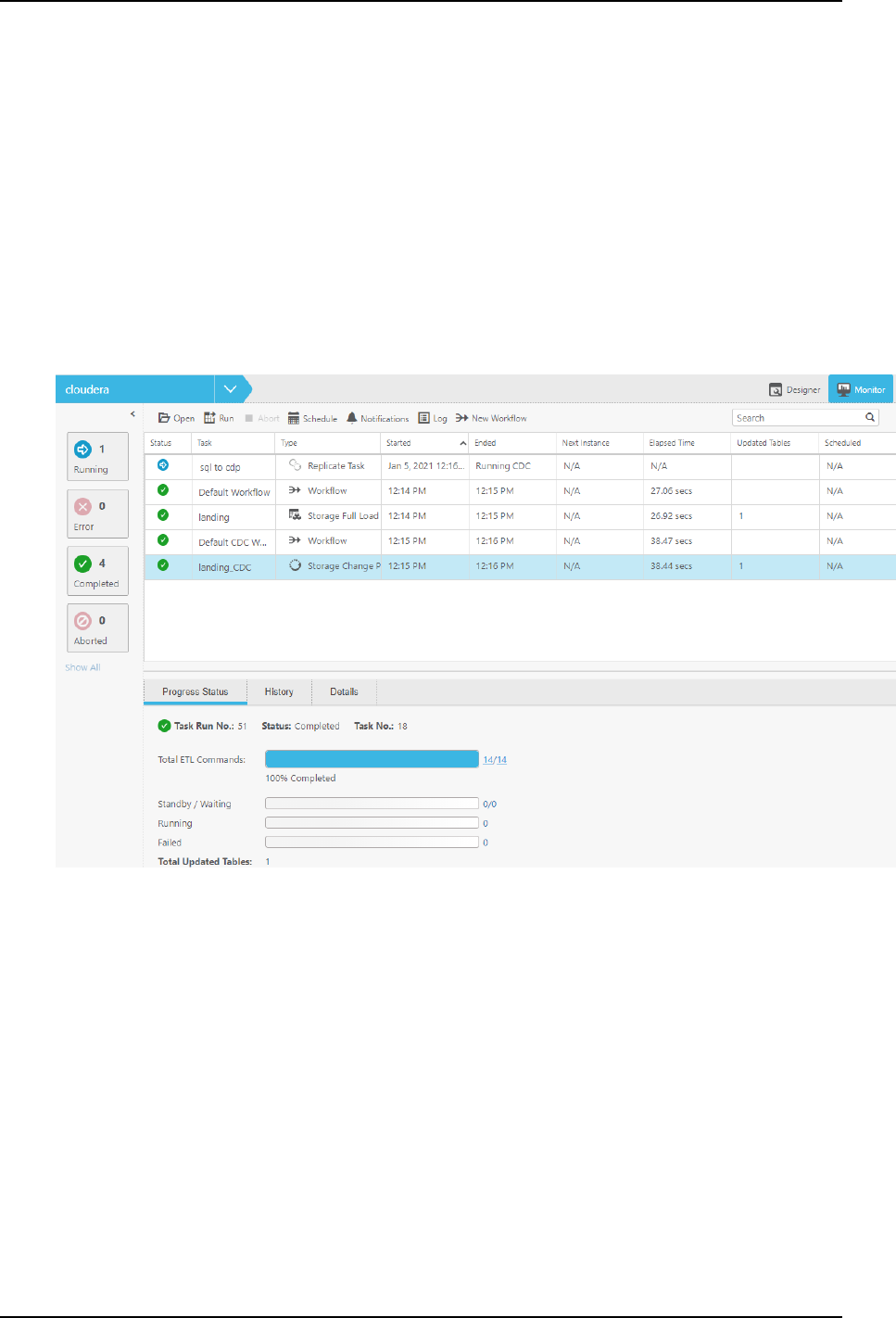

Viewing information in the monitor 260

Viewing missing references 262

Controlling tasks 264

Notifications 266

Workflows 268

Monitoring and controlling Qlik Replicate tasks 272

Contents

Setup and User Guide - Qlik Compose, May 2022 6

6 Data Lake projects 275

6.1 Defining a Qlik Replicate task 275

Prerequisites 275

Limitations and Considerations 276

Setting up the task 276

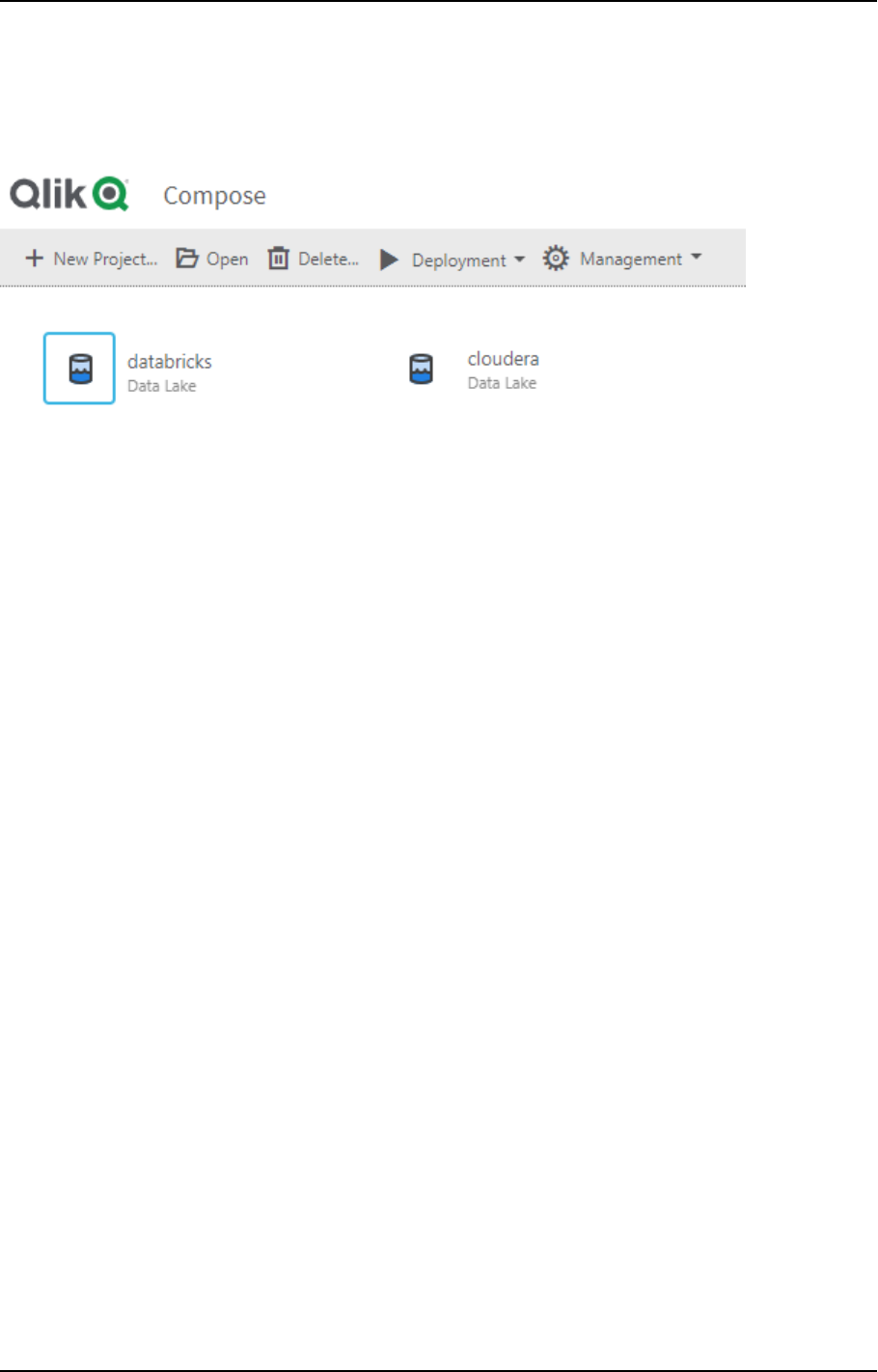

6.2 Adding and managing Data Lake projects 277

Prerequisites 277

Data Lake project guidelines 279

Adding data lake projects 280

Managing and monitoring projects 282

Project settings 283

Resetting projects 288

Project deployment 289

Exporting and importing projects using the CLI 290

Generating projects using the CLI 298

Viewing and downloading DDL scripts 299

Project versioning 300

Creating a diagnostics package 301

6.3 Getting started with Data Lake projects 302

High-level flow 302

Console elements 302

6.4 Setting up landing and storage connections 305

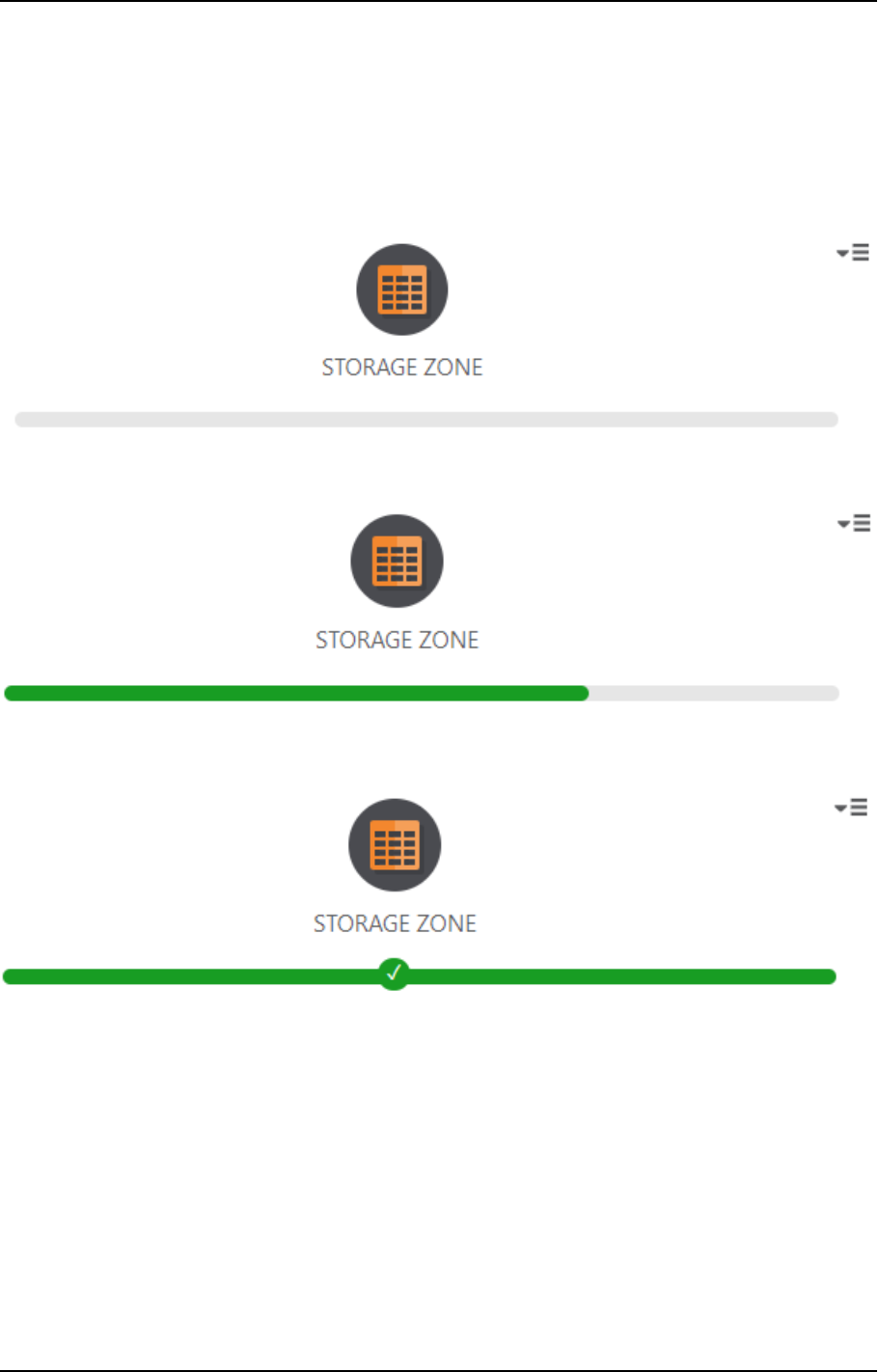

Defining a Storage Zone 305

Defining Landing Zones 314

Managing Landing and Storage connections 316

6.5 Selecting source tables and managing metadata 316

Reserved column names 316

Selecting and adding the source tables 317

Validating the metadata and storage 320

Managing the metadata 322

Schema evolution 327

Creating transformations 329

Reusable transformations 335

6.6 Creating and Managing Storage Zone Tasks 337

Defining and running data storage tasks 338

Managing task definitions 341

Clearing the metadata cache 350

Viewing and exporting task statements 351

Modifying task settings 352

6.7 Creating and managing command tasks 352

Defining Command tasks 353

Managing Command tasks 354

Controlling and monitoring Command tasks 354

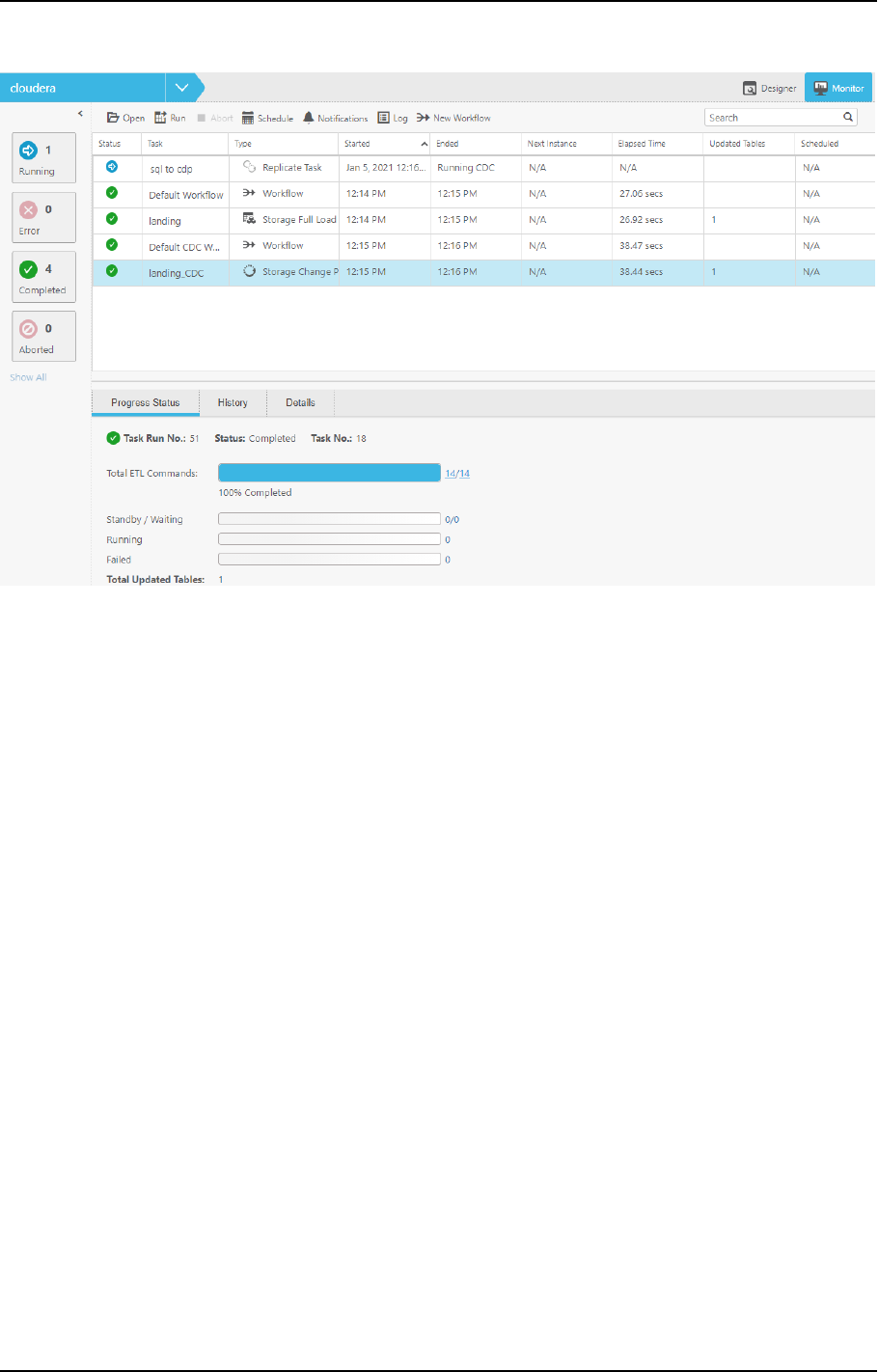

6.8 Controlling and monitoring tasks and workflows 354

Viewing information in the monitor 355

Running and controlling tasks 356

Notifications 358

Contents

Setup and User Guide - Qlik Compose, May 2022 7

Workflows 360

Monitoring and controlling Replicate tasks 364

7 Managing Compose 366

7.1 License settings 366

License enforcement 366

Registering a license 366

7.2 Viewing a license 367

7.3 Logging settings 367

Setting the logging level 367

Setting automatic roll over and cleanup 368

Viewing and downloading Compose log files 369

7.4 Mail server settings 369

7.5 Running tasks on a remote Compose server 370

7.6 Replicate Server settings 370

7.7 User permissions 371

Default user permissions according to role 372

Granular access control 373

Managing user and group roles using the Compose CLI 375

Managing user permissions 377

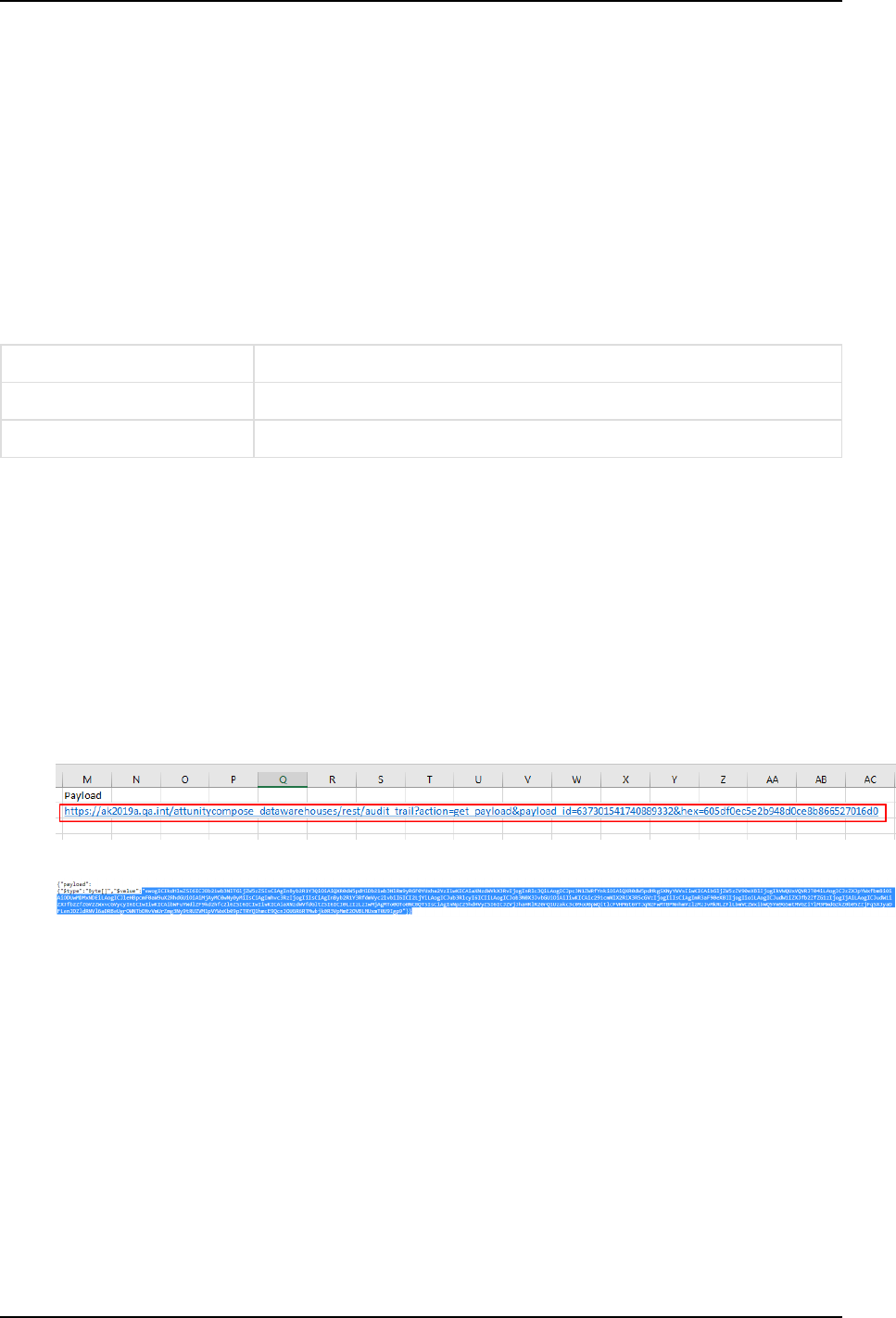

7.8 Audit trails 380

Audit trail information 380

Exporting Audit Trail files 381

Configuring Audit Trail size and retention 382

Decoding an encoded payload 382

8 Setting up Compose on a Windows HA cluster 383

8.1 Step 1: Installing Compose in the cluster 383

Preparation 383

Primary node setup 383

Secondary node setup 384

8.2 Step 2: Adding the Compose service 385

8.3 Step 3: Defining the service dependencies 385

8.4 Step 4: Defining the URLfor the cluster 386

8.5 Upgrading Compose on the cluster 386

A Impact of DST change on Qlik Compose 388

B Support matrix 389

B.1 Supported Windows platforms 389

B.2 Supported browsers 389

B.3 Supported Qlik Replicate and Enterprise Manager versions 389

B.4 Supported Databases for Data Warehouse Projects 390

Supported data sources 390

Supported data warehouses 390

B.5 Supported hive distributions for Data Lake projects 392

C Cron format and examples 393

C.1 Cron format 393

C.2 Special characters 393

Contents

1 What's new?

1 What's new?

The following section describes the enhancements and new features introduced in Qlik Compose May 2022.

The "What's new?" is cumulative, meaning that it also describes features that were already released

as part of Compose August 2021 service/patch releases. This is because customers upgrading from

initial release versions might not be aware of features that were released in interim service releases.

1.1 What's new in Data Warehouse projects?

The following section describes the enhancements and new features introduced in Qlik Compose Data

Warehouse projects.

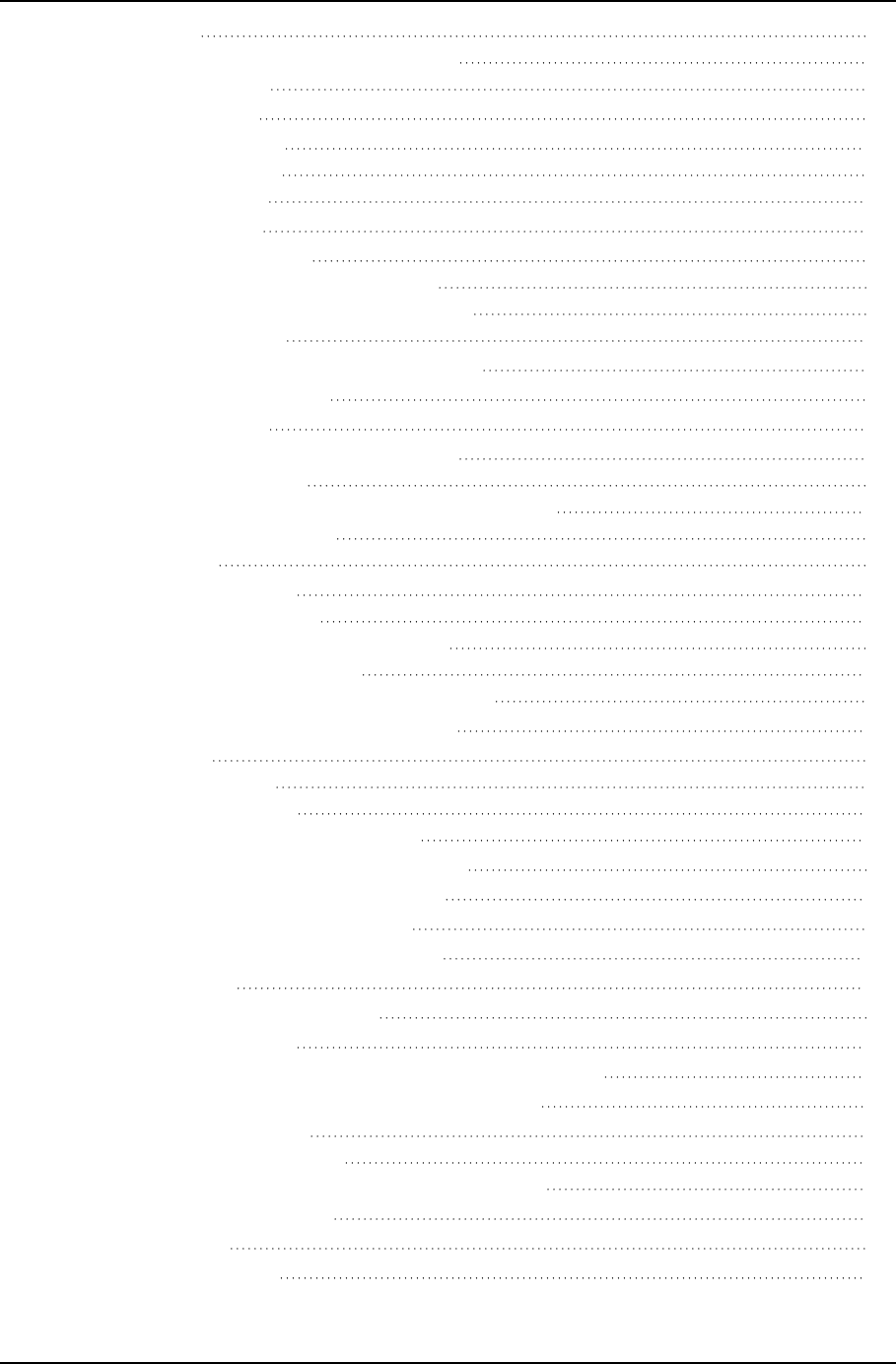

Keeping changes in the Change Tables

This version introduces a new Keep in Change Tables option in the landing zone connection settings:

When you select the Keep in Change Tables option, the changes are kept in the Change Tables after they are

applied (instead of being deleted or archived). This is useful as it allows you to:

l

Use the changes in multiple Compose projects that share the same landing

l

Leverage Change Table data across multiple mappings and/or tasks in the same project

l

Preserve the Replicate data for auditing purposes or reprocessing in case of error

l

Reduce cloud data warehouse costs by eliminating the need to delete changes after every ETL

execution

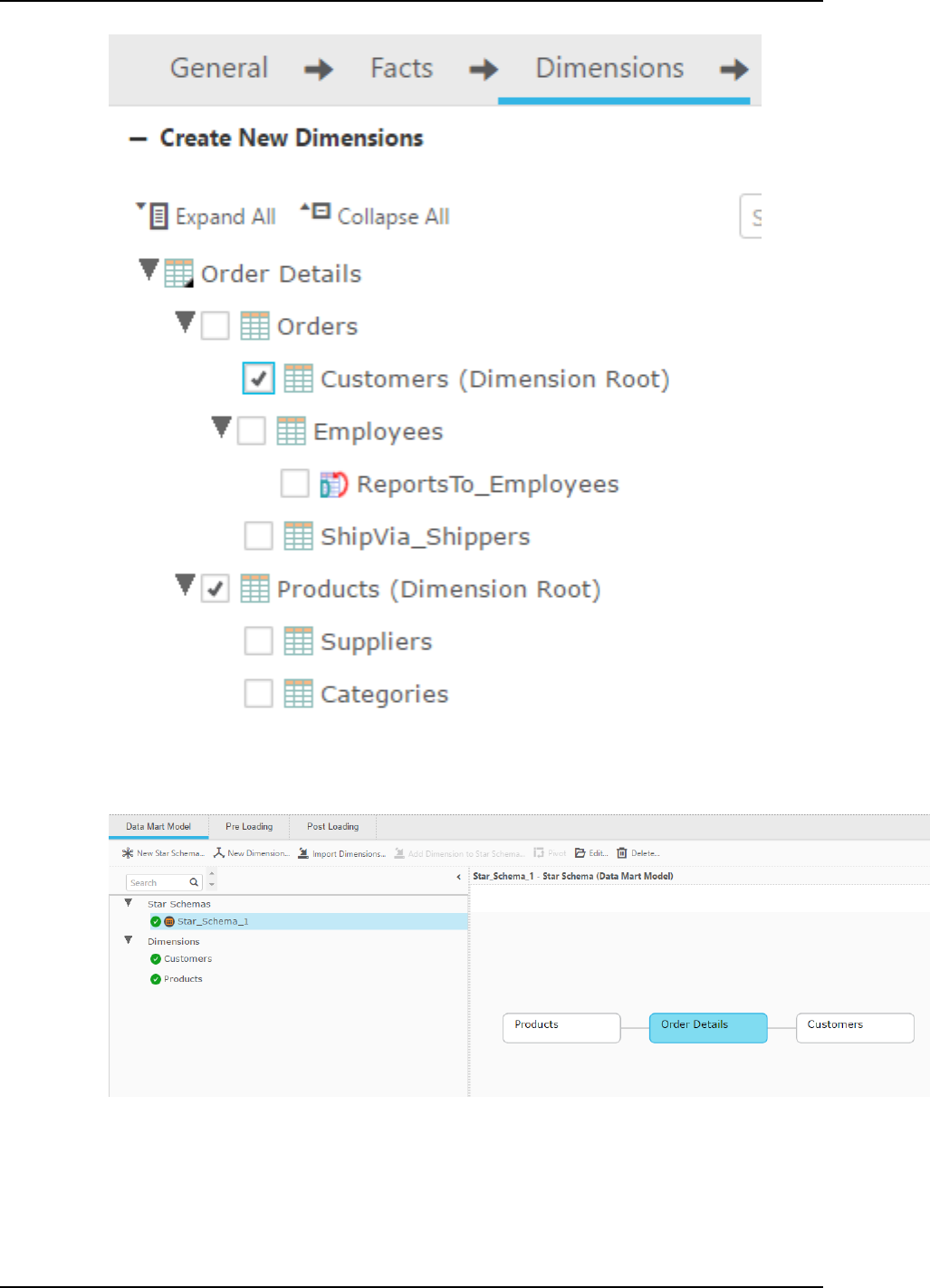

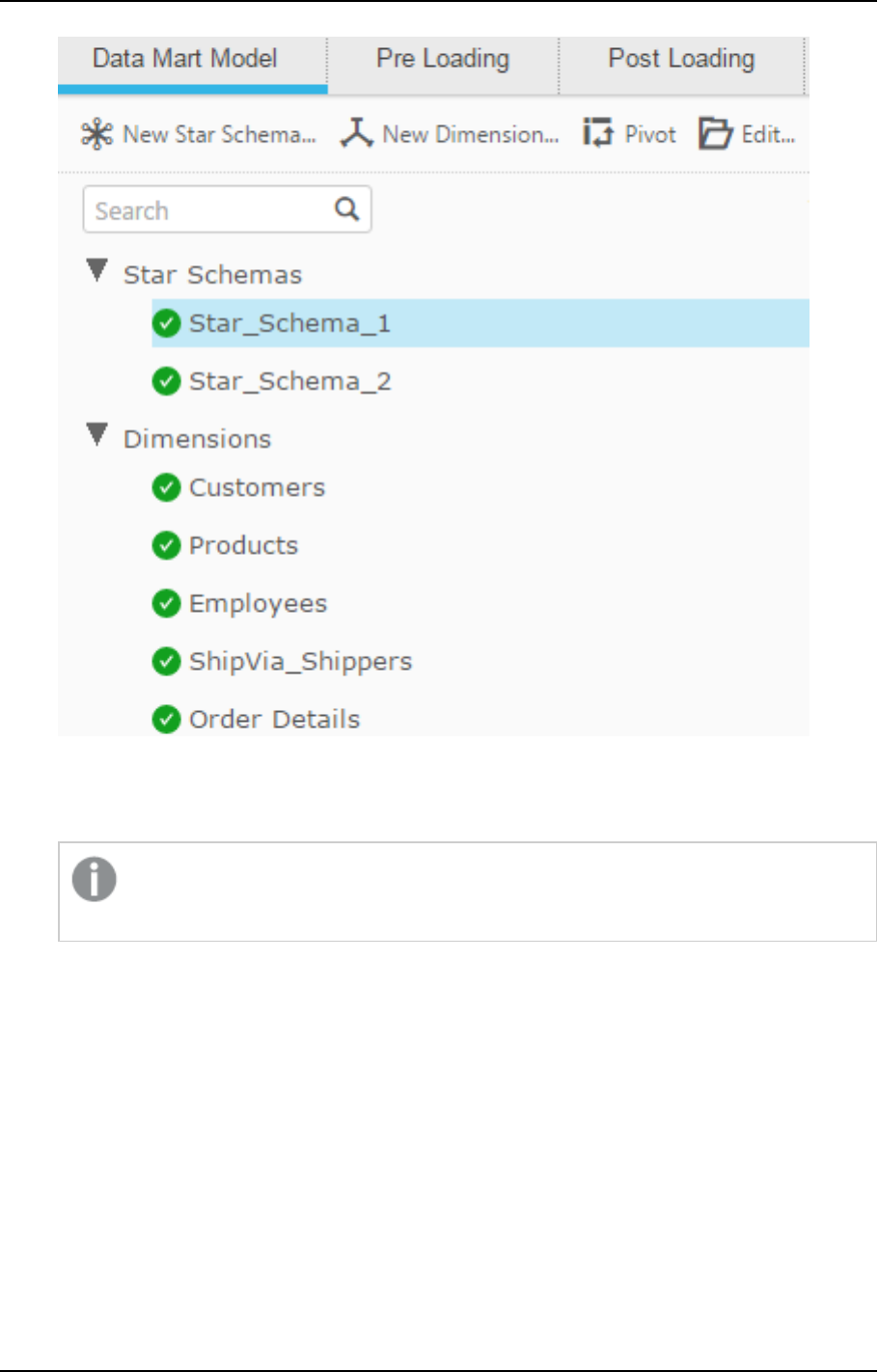

Referenced dimensions

This version introduces support for referencing dimensions. To facilitate this new functionality, a new

Reference selected dimensions option has been added to the Import Dimensions dialog which, together

with the toolbar button, has been renamed to Import and Reference Dimensions.

Setup and User Guide - Qlik Compose, May 2022 9

1 What's new?

The ability to reference dimensions improves data mart design efficiency and execution flexibility by

facilitating the reuse of data sets. Reuse of dimension tables across data marts allows you to break up fact

tables into smaller units of work for both design and data loading, while ensuring consistency of data for

analytics.

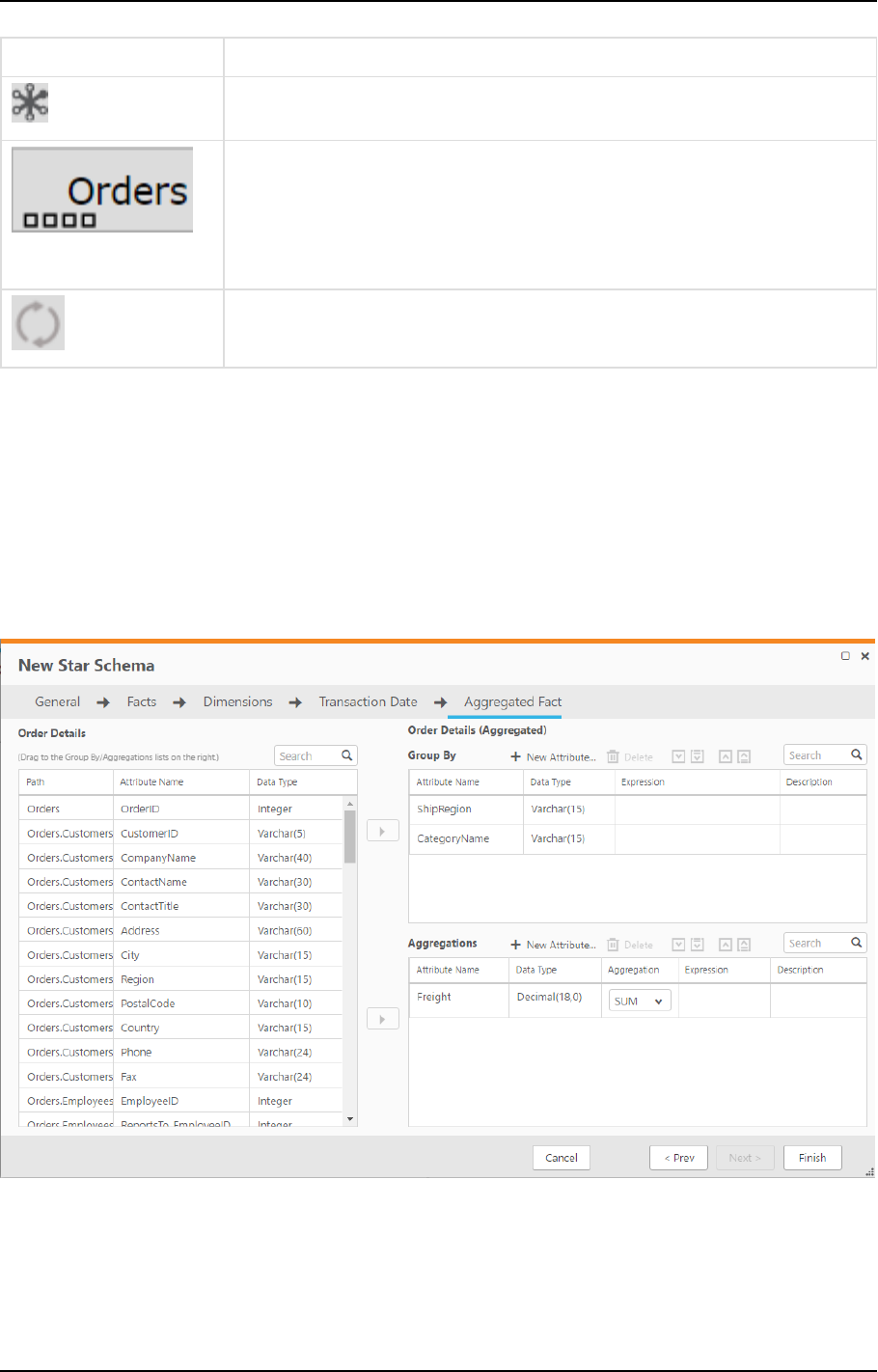

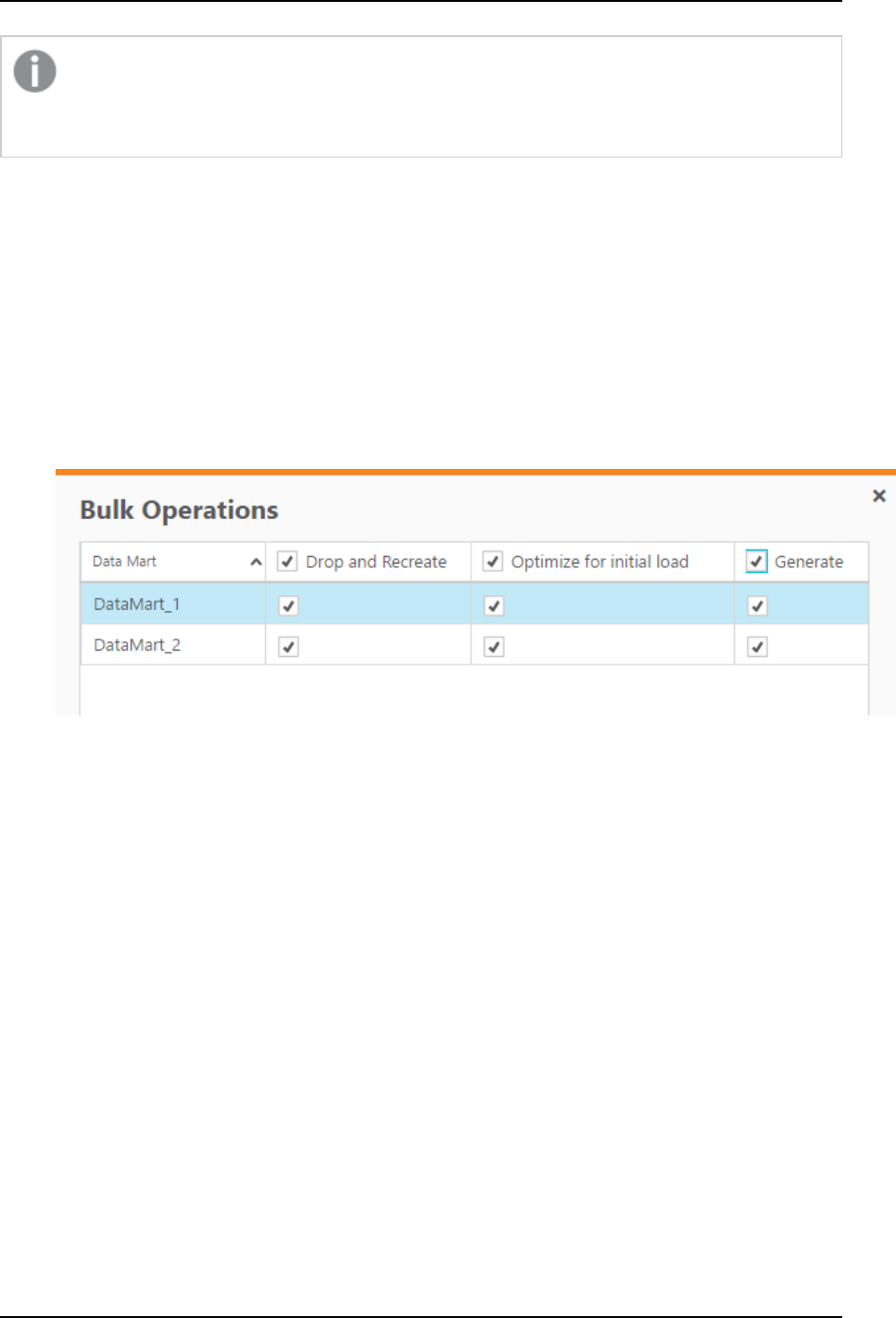

Data mart enhancements

Data mart adjust

This version introduces the following enhancements:

l

The automatic data mart adjust feature has been extended to include DROP COLUMN and

ADDCOLUMN support.

l

In previous versions, adding a dimension which did not relate to any fact would require the data mart

to b e dropped and recreated. From this version, such dimensions can be adding using auto-adjust,

including Date and Time dimensions.

l

The generate_project CLI now supports automatic data mart adjust for specific objects. In

previous versions, Compose would adjust the data marts by dropping and recreating the tables,

regardless of the required change. This would sometimes take a lot of time to complete. From this

version, only the changes will be adjusted. For example, if a new column was added to a dimension,

only that specific column will be added to the data mart tables. To support this new functionality the --

stopIfDatamartsNeedRecreation parameter must be included in the command. I this parameter is

omitted and the data mart needs to be adjusted, Compose will drop and recreate the data mart tables

like it did in previous versions.

Data mart reloading

This version introduces the ability to reload the data mart or parts of the data mart without dropping and

recreating it, thereby eliminating costly and lengthy reloading of the data mart while maximizing data

availability. Such operations should usually be performed after a column with history has been added by the

automatic adjust operation.

To facilitate this, a new mark_reload_datamart_on_next_run CLI has been developed. The new CLI

allows users to mark dimensions and facts to be reloaded on the next data mart run. These can either be

specific dimensions and facts or multiple dimensions and facts (either from the same data mart or different

data marts) using a CSV file.

Microsoft Azure Synapse Analytics Enhancements

A number of changes related to statistics have been implemented. In addition, several statements are now

tagged with an identifier label for troubleshooting 'problem queries' and identifying possible ways to optimize

database settings. Moreover, the addition of labels to ELT queries enables fine-grained workload management

and workload isolation via Synapse WORKLOAD GROUPS and CLASSIFIERS.

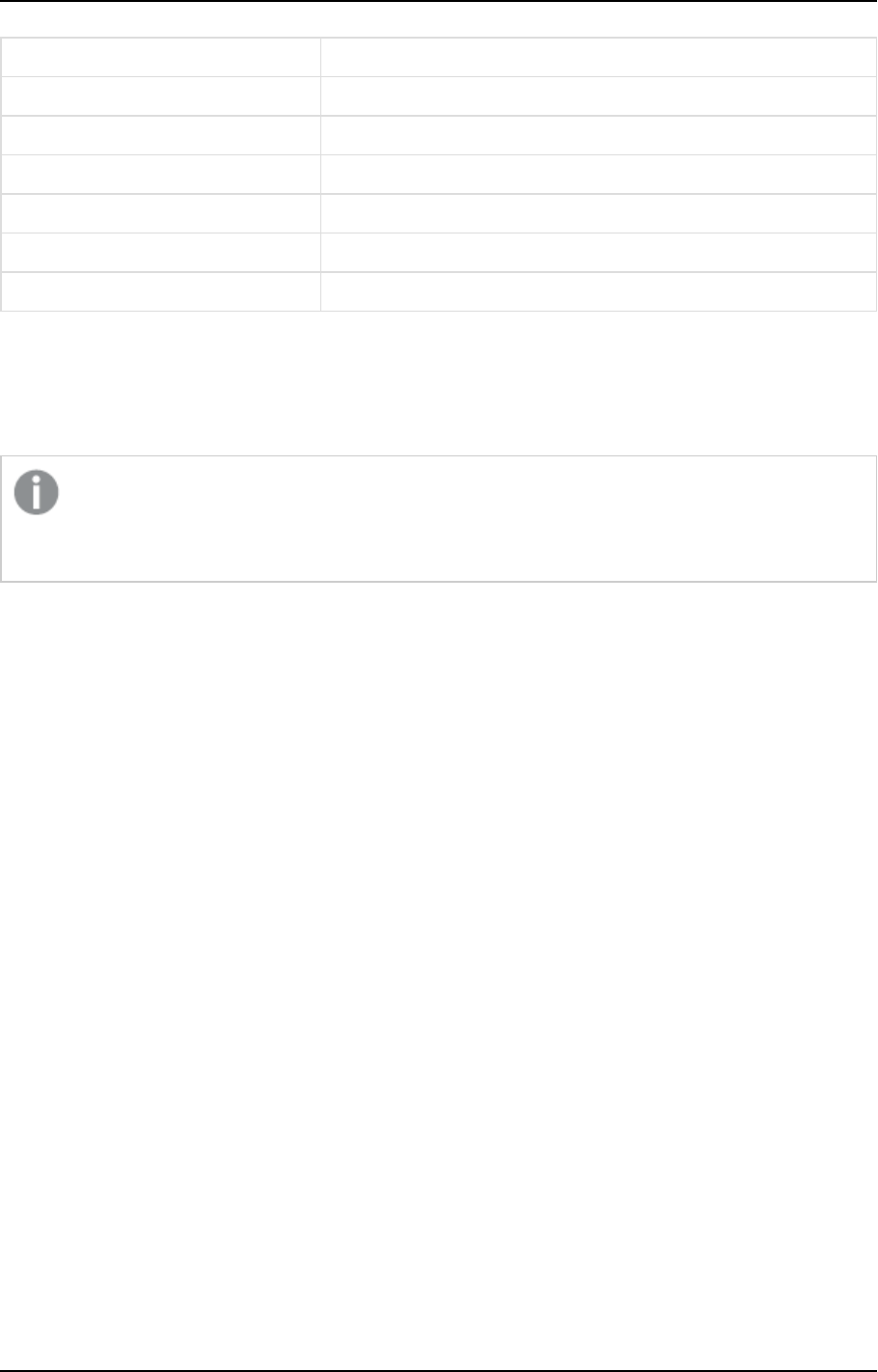

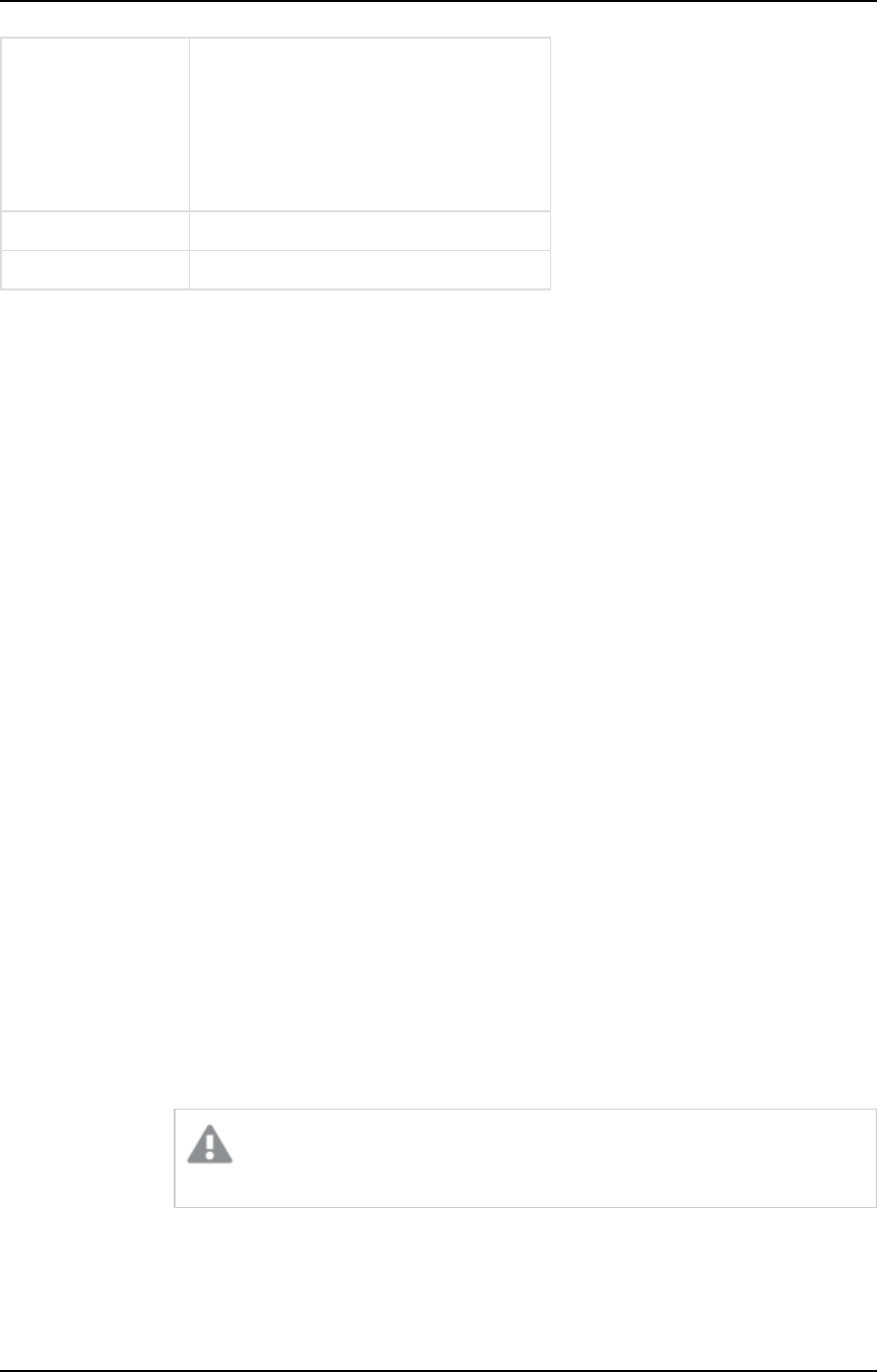

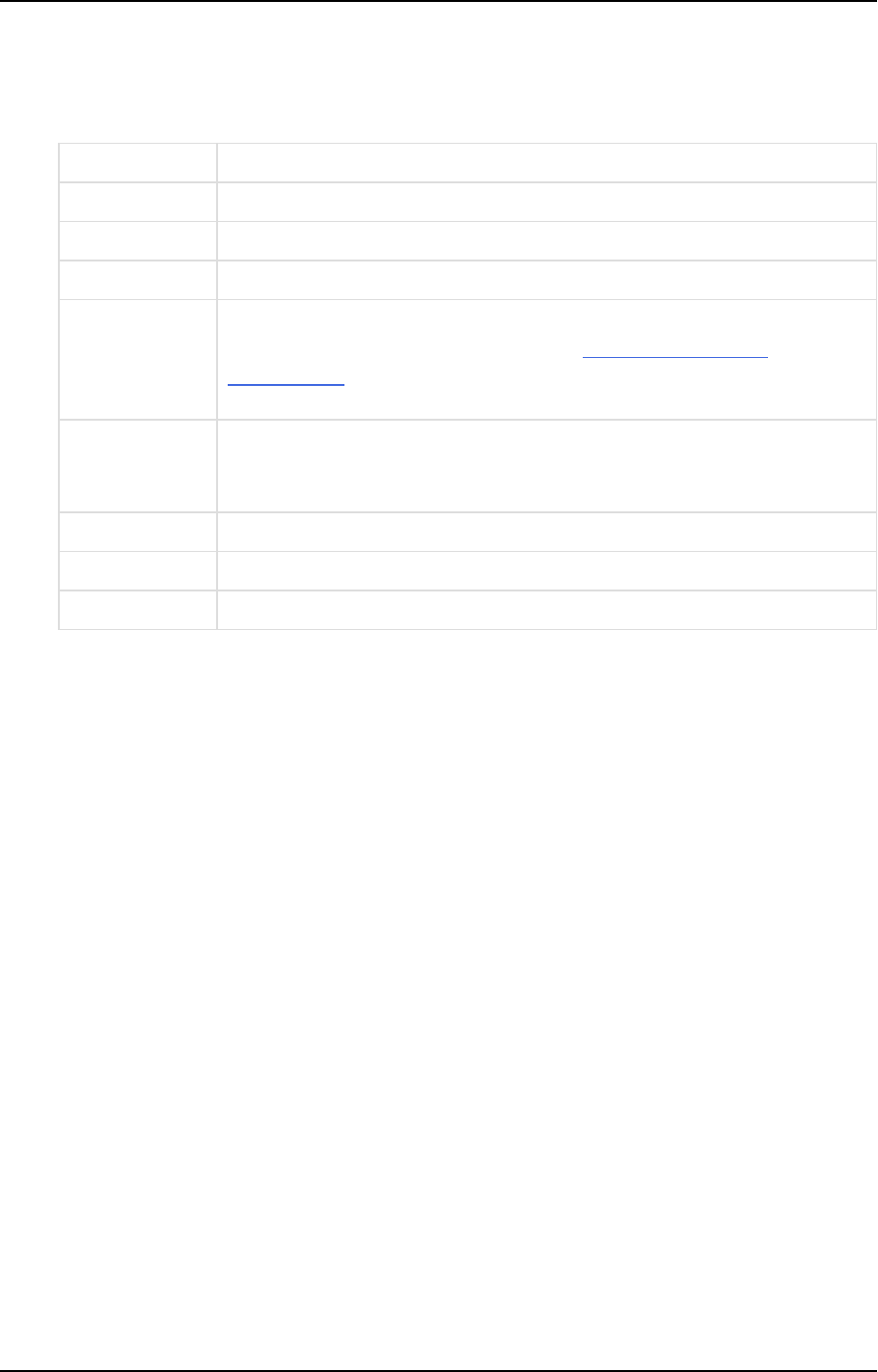

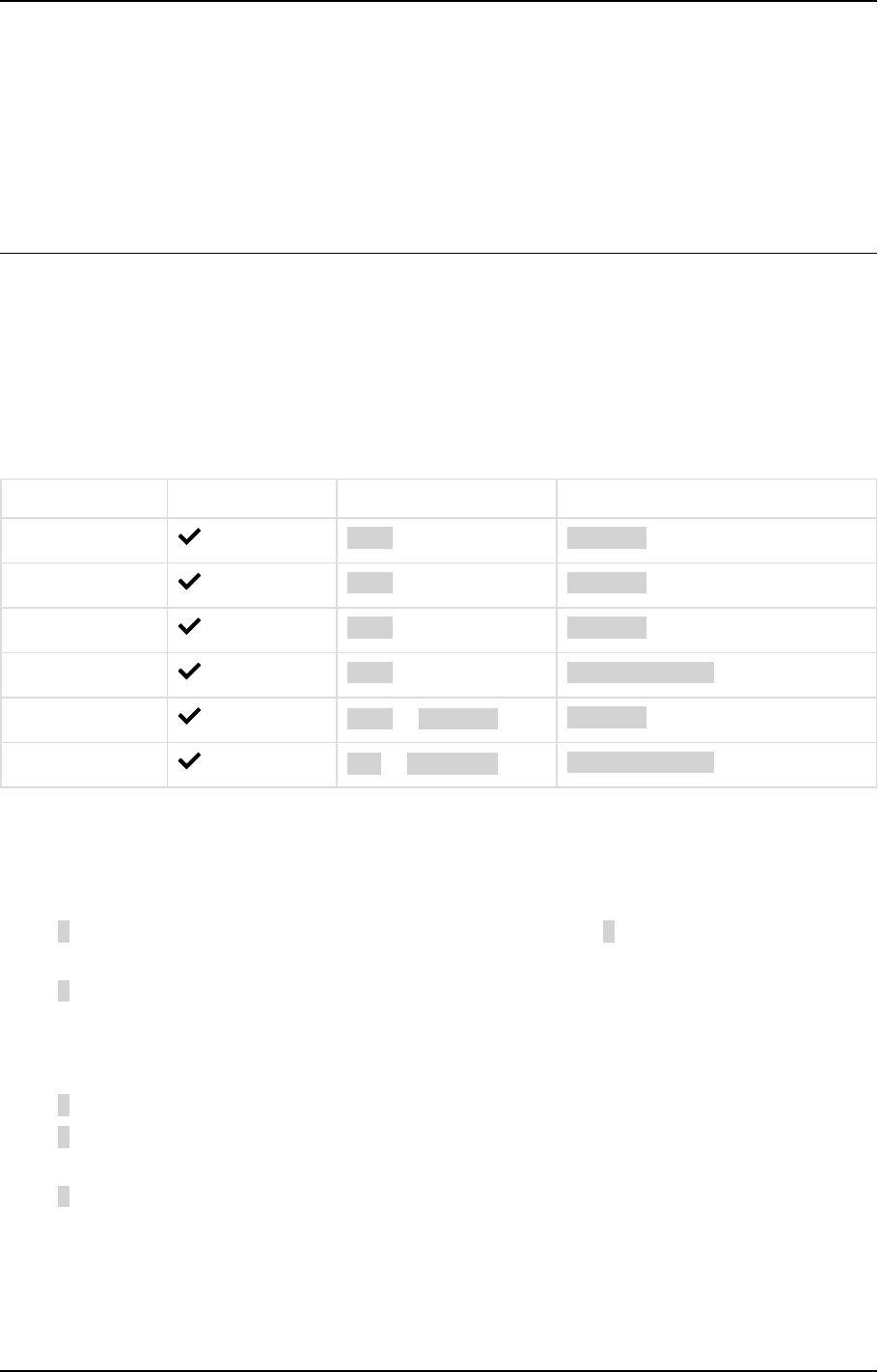

The identifier labels are as follows:

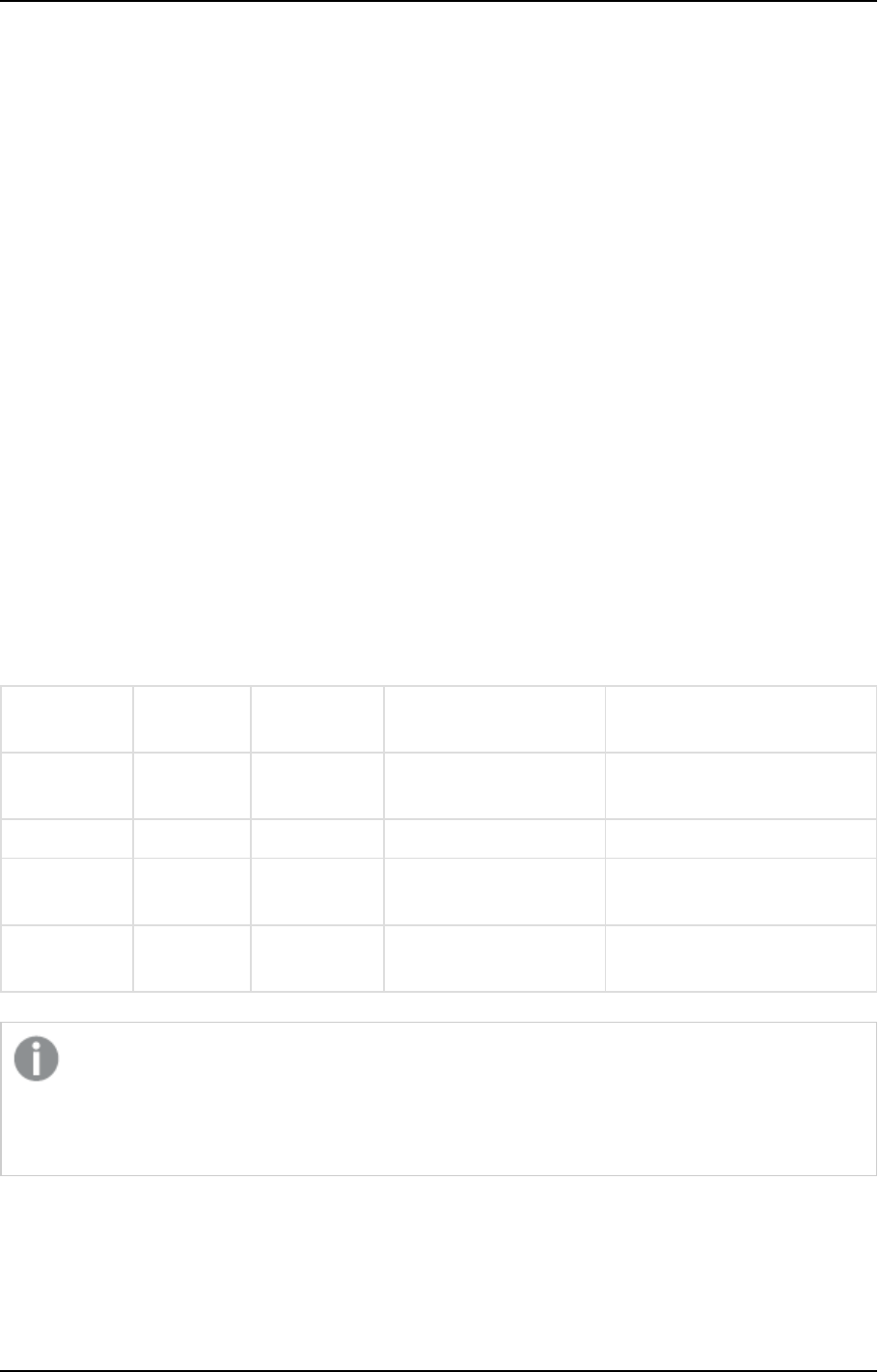

Table type Tag

Hubs CMPS_HubIns

Setup and User Guide - Qlik Compose, May 2022 10

1 What's new?

Table type Tag

Satellites CMPS_SatIns

Type1 dimensions CMPS_<data mart name>_DimT1_Init/CMPS_<data mart

name>_DimT1_Incr

Type2 dimensions CMPS_<data mart name>_DimT2_Init/CMPS_<data mart

name>_DimT2_Incr

Transactional facts CMPS_<data mart name>_FctTra_Init/CMPS_<data mart

name>_FctTra_Incr

State-oriented facts CMPS_<data mart name>_FctStO_Init

Aggregated facts: CMPS_<data mart name>_FctAgg_Init

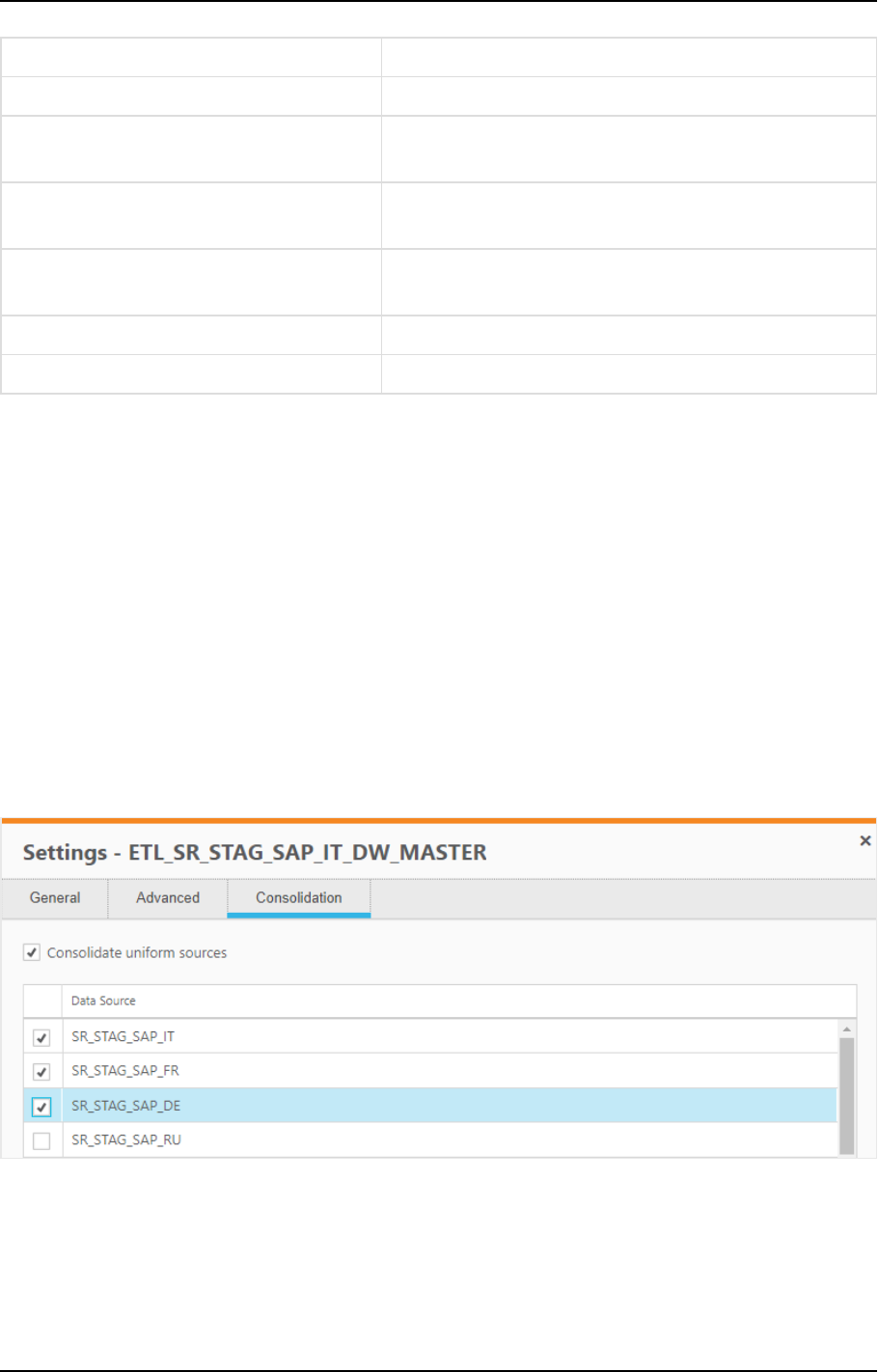

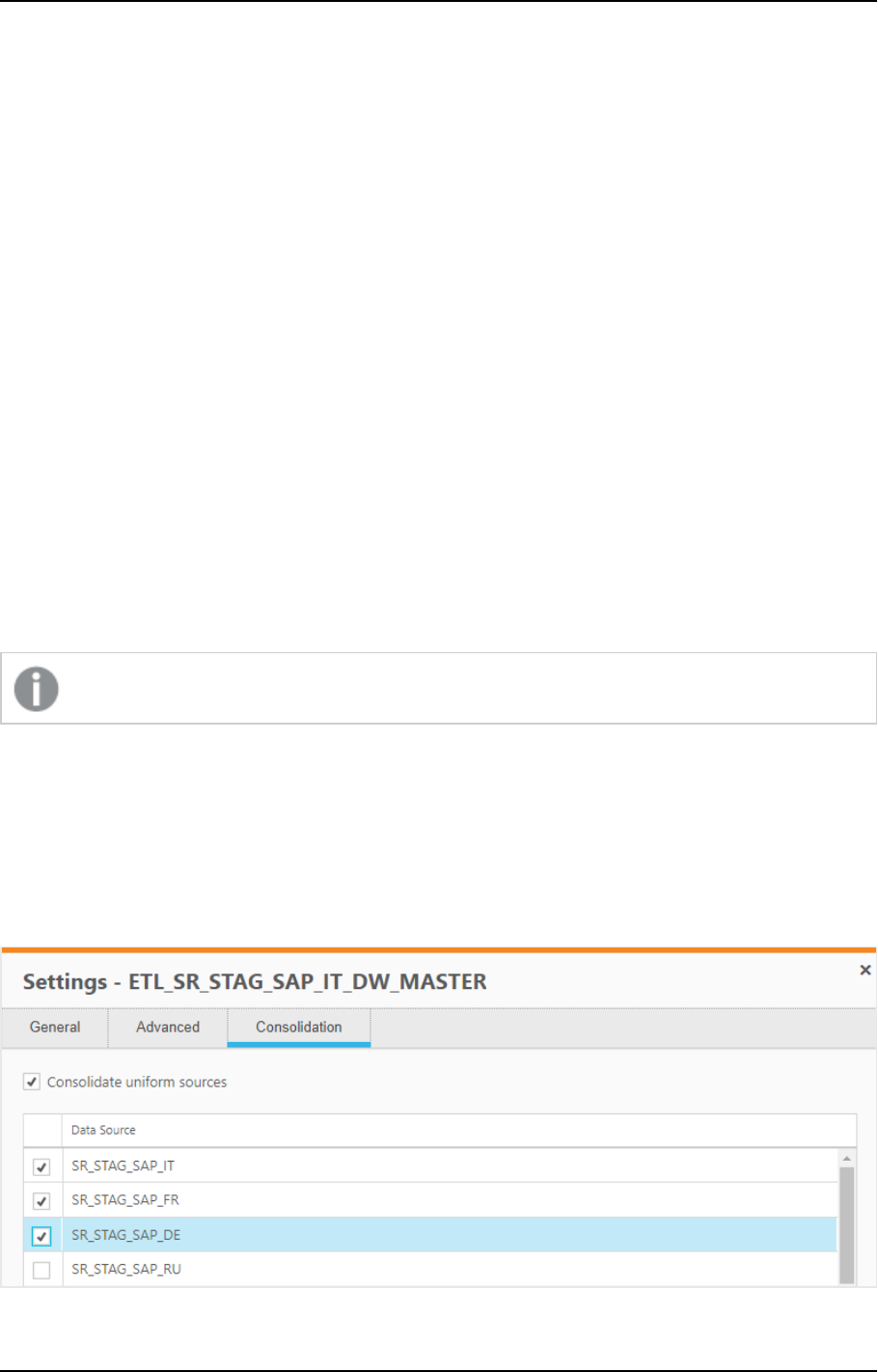

Uniform source consolidation

Uniform source consolidation as its name suggests allows you to ingest data from multiple sources into a

single, consolidated, entity.

To enable uniform source consolidation configuration, a new Consolidation tab has been added to the data

warehouse task settings.

When the Consolidate uniform sources option is enabled, Compose will read from the selected data sources

and write the data to one consolidated entity. This is especially useful if your source data is managed across

several databases with the same structure, as instead of having to define multiple data warehouse tasks (one

for each source), you only need to define a single task that consolidates the data from the selected data

sources.

Consolidation tab showing selected data sources

Environment variables

Environment variables allow developers to build more portable expressions, custom ETLs, and Compose

configurations, which is especially useful when working with several environments such as DTAP

(Development, Testing, Acceptance and Production). Different environments (for example, development and

Setup and User Guide - Qlik Compose, May 2022 11

1 What's new?

production) often have environment-specific settings such as database names, schema names, and Replicate

task names. Variables allow you to easily move projects between different environments without needing to

manually configure the settings for each environment. This is especially useful if many settings are different

between environments. For each project, you can use the predefined environment variables or create your

own environment variables.

Excluding environment variables from export operations

An option has been added to replace environment-specific settings with the defaults when exporting projects

(CLI) or creating deployment packages.

To facilitate this functionality, the --without_environment_specifics parameter was added to the

export_project_repository CLI and a Exclude environment variable values option was added to the

Create Deployment Package dialog.

Support for data profiling and data quality rules when using Google

Cloud BigQuery

You can now configure data profiling and data quality rules when using Google Cloud BigQuery as a data

warehouse.

Attributes case sensitivity support

In previous versions, attempting to create several Attributes with the same name but a different case would

result in a duplication error. Now, such attributes will now be created with an integer suffix that increases

incrementally for each attribute added with the same name. For example: Sales, SALES_01, and Sales_02.

Associating a Replicate task that writes to a Hadoop target

You can now associate a Replicate task that writes to a Hadoop target with the Compose landing.

Performance improvements

This version provides the following performance improvements:

l

Validating a model with self-referencing entities is now significantly faster than in previous versions.

For instance, it now takes less than a minute (instead of up to two hours) to validate a model with 5500

entities.

l

The time it takes to "Adjust" the data warehouse has been significantly reduced. For instance, it now

takes less than three minutes (instead of up to two hours) to adjust a data warehouse with 5500

entities.

l

Optimized queries, resulting in significantly improved data warehouse loading and CDC performance.

l

Significantly improved the loading speed of data mart Type 2 dimensions with more than two entities.

In order to benefit from this improvement, customers upgrading with existing data marts needs to

regenerate their data mart ETLs.

l

Improved performance of data warehouse loading, by reducing statements executed when there is no

data to process. This change impacts cloud data warehouses such as Snowflake, Amazon Redshift,

Setup and User Guide - Qlik Compose, May 2022 12

1 What's new?

Google BigQuery, and so on.

Relevant from Compose May 2022 SR1 only.

Support for Redshift Spectrum external tables

Supported from Compose May 2022 SR1 only.

Customers who want to leverage this support need to create Redshift Spectrum external tables and discover

them. Additionally, when running a CDC task, the new Keep in Change Tables option described above needs

to be turned on.

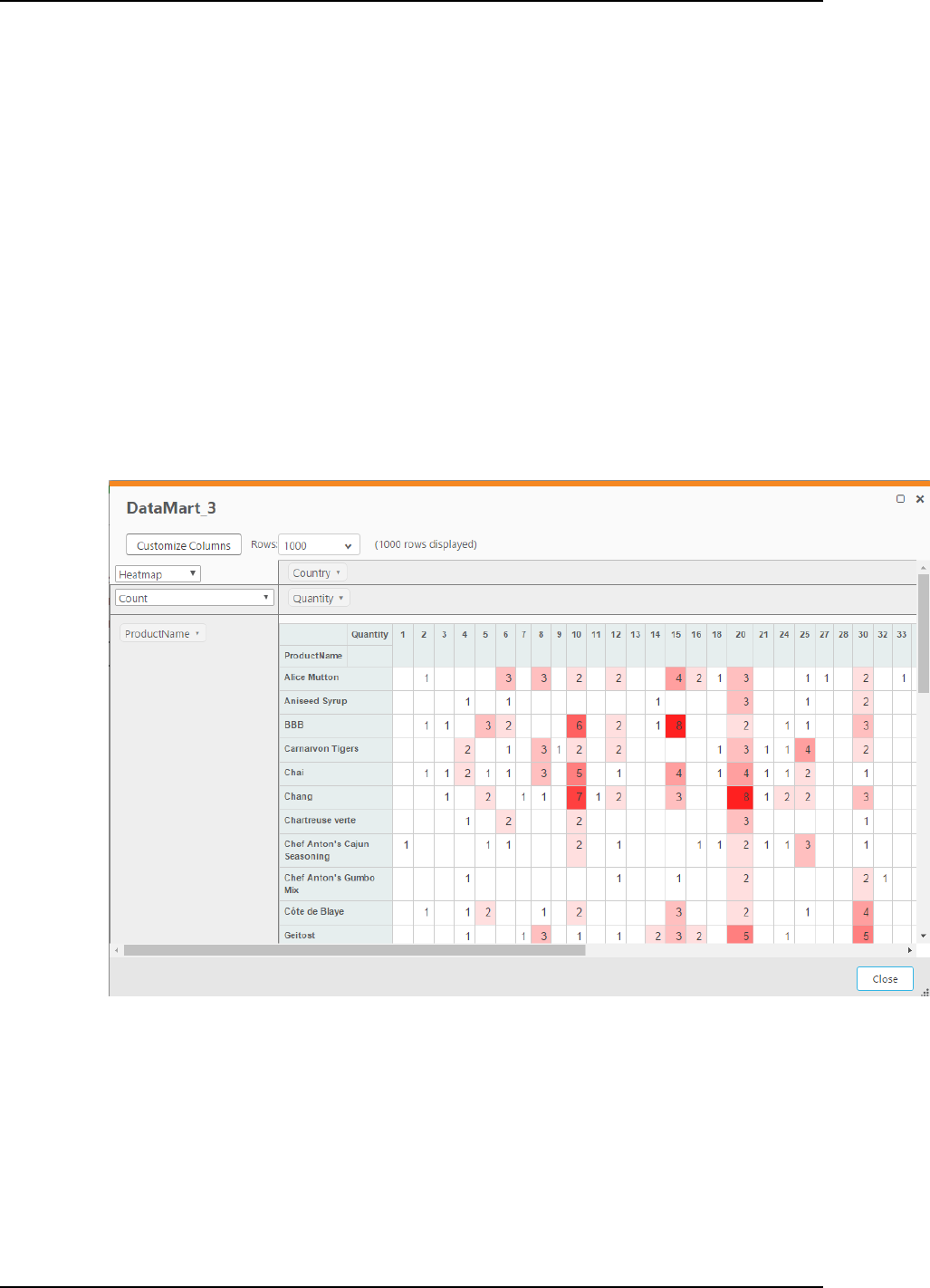

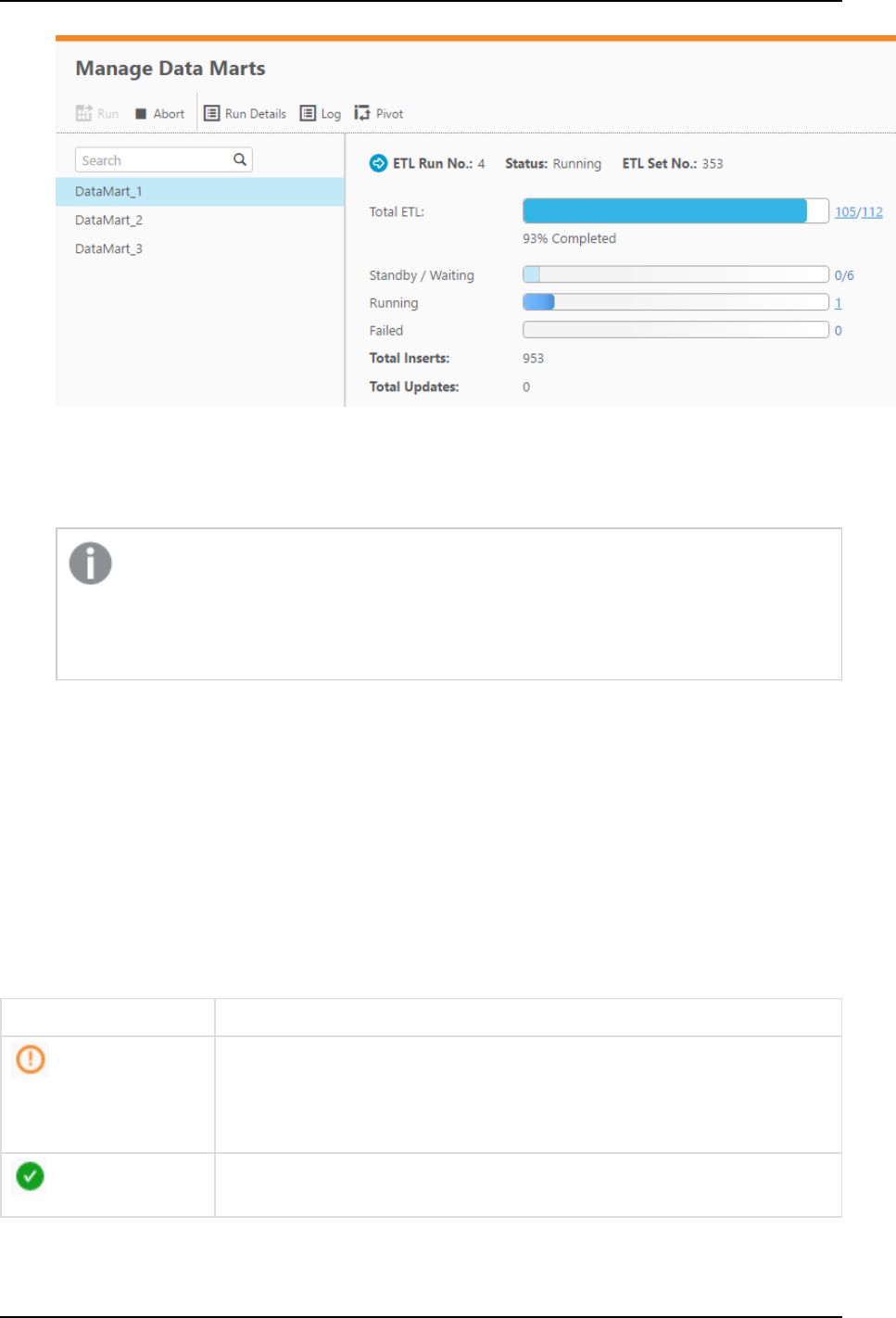

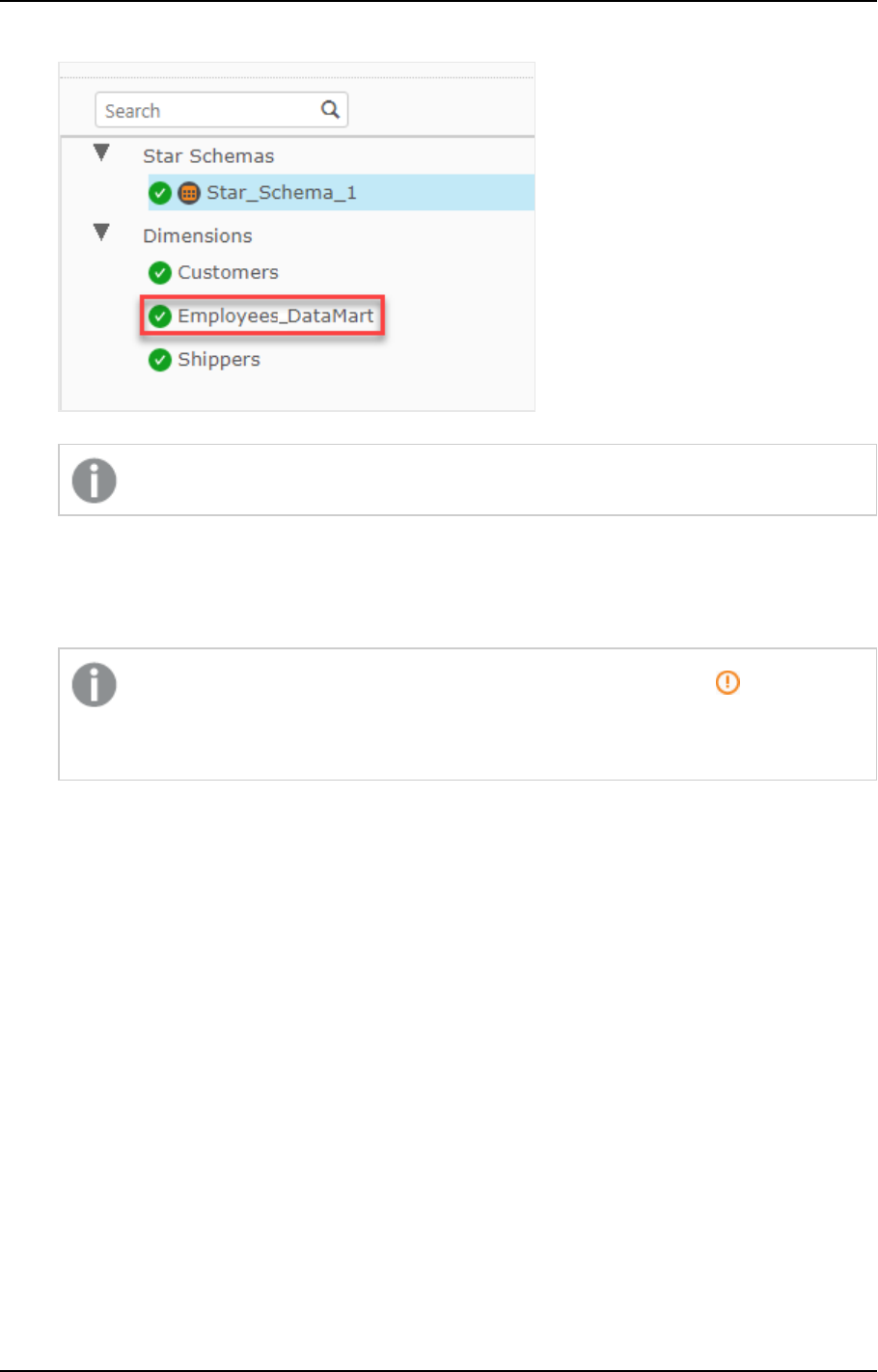

Data mart UX improvement

The Data Mart Dimensions tree and the Star Schema Fact tab were redesigned to provide a better user

experience.

Support for updating custom ETLs using the CLI

This version introduces support for updating custom ETLs using the Compose CLI. This functionality can be

incorporated into a script to easily update Custom ETLs.

Supported from Compose May 2022 SR2 only.

Support for defining a custom data mart schema in Microsoft Azure

Synapse Analytics

Customers working with Microsoft Azure Synapse Analytics can now utilize the Create tables in schema

option (in the data mart settings) to define a custom schema for the data mart tables.

Supported from Compose May 2022 SR2 only.

1.2 What's new in Data Lake projects?

The following section describes the enhancements and new features introduced in Qlik Compose Data Lake

projects.

Support for excluding deleted records from ODS views

A Deleted records in ODS views section has been added to the General tab of the project settings, with the

following options:

l

Exclude the corresponding record from the ODS views - This is the default option as records

marked as deleted should not usually be included in ODS views.

Setup and User Guide - Qlik Compose, May 2022 13

1 What's new?

l

Include the corresponding record in the ODS views - Although not common, in some cases, you

might want include records marked as deleted in the ODS views in order to analyze the number of

deleted records and investigate the reason for their deletion. Also, regulatory compliance might

require you to be able to retrieve the past record status (which requires change history as well).

As this was the default behavior in previous versions, you might need to select this option to

maintain backward compatibility.

Improved Historical Data Store resolution

Supported from Compose May 2022 SR1 only.

In previous versions, HDS resolution was one second. This was problematic at times as multiple changes to a

Primary Key within a second resulted in only the last change appearing in the HDS. To view all the history,

customers were forced to review the landing.

From this version, all changes (history) will shown in the HDS, facilitating better support for auditing.

Associating a Replicate task that writes to a Hortonworks Data Platform

target

You can now associate a Replicate task that writes to a Hortwonworks Data Platform target with the Compose

landing connection (in a Cloudera Data Platform (CDP) Compose project).

Databricks projects

New Databricks versions

l

Databricks 9.1 LTS is now supported on all cloud providers (AWS, Azure, and Google Cloud Platform).

l

Databricks 10.4 LTS is now supported on all cloud providers (AWS, Azure, and Google Cloud Platform).

Databricks 10.4 LTS is supported from Compose May 2022 SR1 only.

SQL Warehouse compute and Parquet support

Supported from Compose May 2022 SR1 only.

Compose May 2022 SR1 introduces support for SQL Warehouse compute. To benefit from this support,

customers need to use the new Replicate Databricks (Cloud Storage) target endpoint, which is available from

Replicate November 2022. SQL Warehouse compute offers a lower cost alternative to clusters while also

allowing Parquet file format to be used in the Landing Zone.

Setup and User Guide - Qlik Compose, May 2022 14

1 What's new?

Support for Unity Catalog

This version introduces support for Databricks Unity Catalog. Customers working with Unity Catalog can now

specify a catalog name both in the Landing connection settings and in the Storage connection settings.

1.3 New features common to both Data Warehouse projects

and Data Lake projects

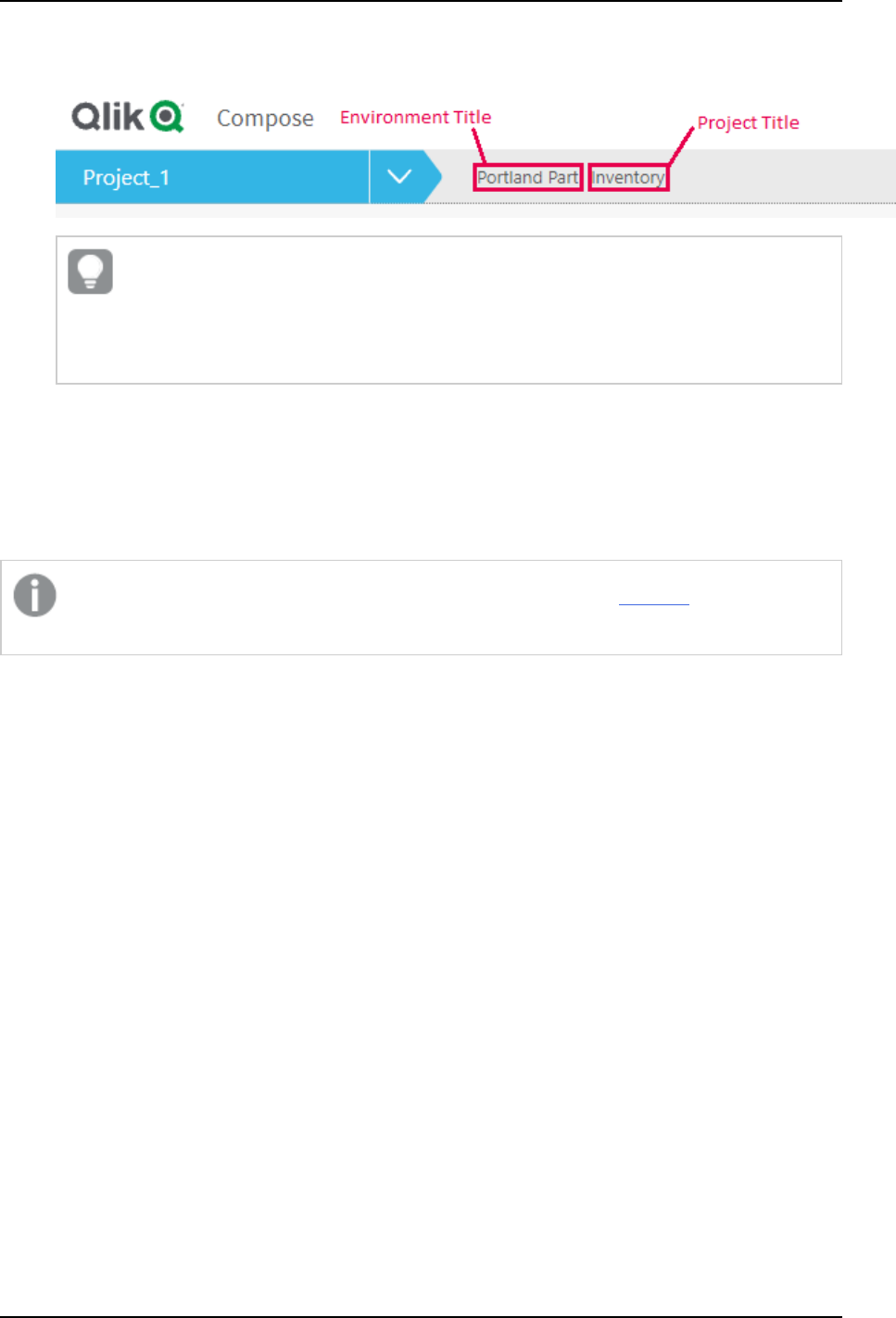

New Project title setting

A new Project title setting had been added to the Environment tab of the project settings. The project title

will be shown in the console banner. If both an Environment Title and a Project Title are defined, the project

title will be displayed to the right of the environment title. Unlike the Environment title and Environment

type, which are unique for each environment, the project title is environment independent. This means that

the project title will always be retained, even when deploying to a different environment.

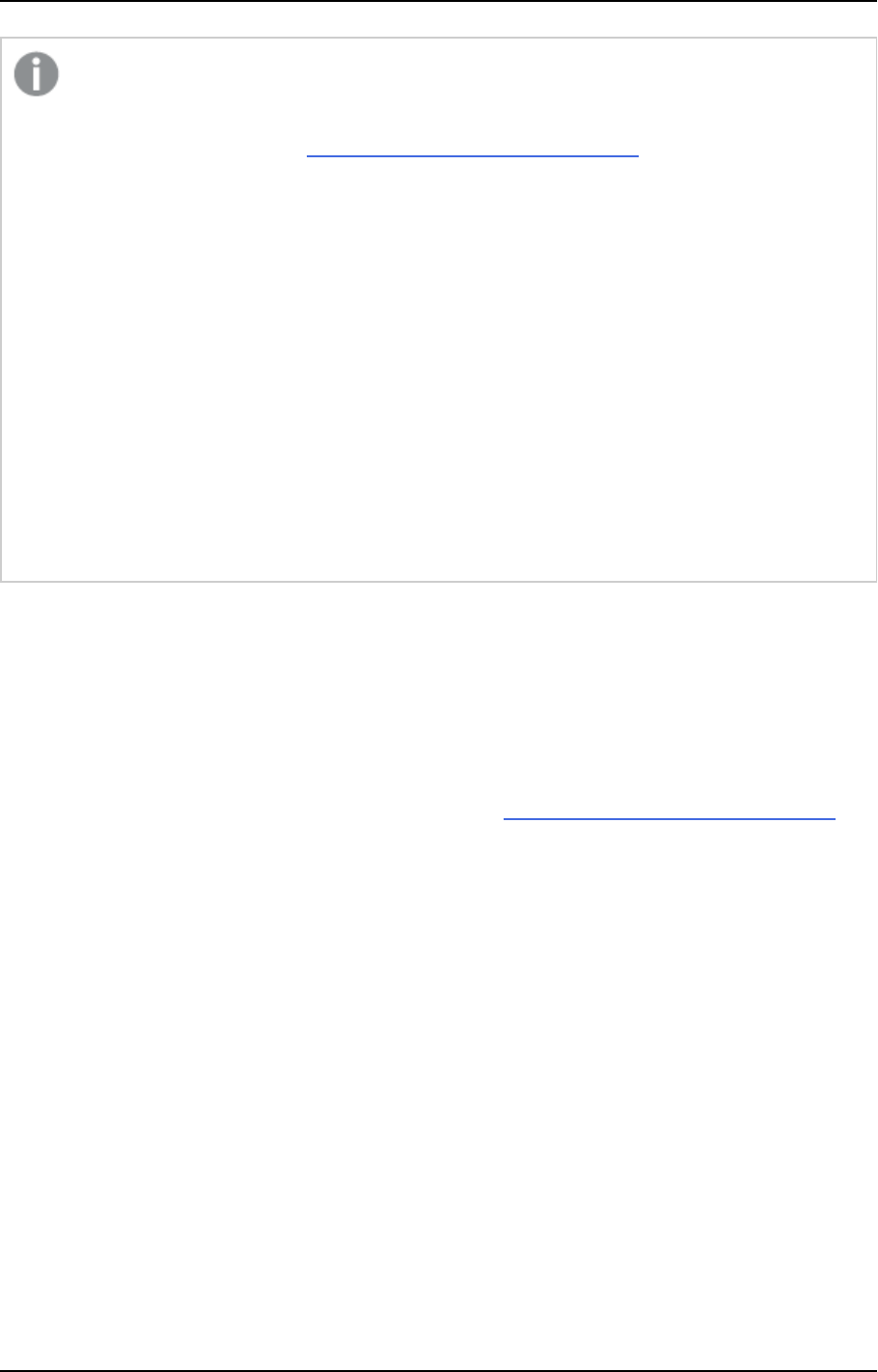

The following image shows the banner with both an Environment title and a Project title:

The banner text is shown without the Environment title and Project title console labels. This

provides greater flexibility as it allows you add any banner text you like, regardless of the actual

label name. For example, specifying Project owner: Mike Smith in the Project title field,

will display that text in the banner.

Support for Microsoft Edge Browser

This version introduces support for accessing the Compose console using Microsoft Edge.

Windows Server 2022 (64-bit) support

Windows Server 2022 support is available from Compose May 2022 SR1.

Security Hardening

For security reasons, command tasks are now blocked by default. To be able to run command tasks, a

Compose administrator needs to turn on this capability using the Compose CLI. For more information, see the

Compose online help.

This functionality only applies to command tasks created after a clean installation. If you upgrade to

this version, command tasks will continue to work as previously.

Setup and User Guide - Qlik Compose, May 2022 15

1 What's new?

Managing user and group roles using the Compose CLI

This feature is available from Compose May 2022 SR1 only.

You can set and update user and group roles using the Compose CLI. You can also remove users and groups

from a role in one of the available scopes (for example, Admin in All Projects). This is especially useful if you

need to automate project deployment.

Setup and User Guide - Qlik Compose, May 2022 16

2 Introduction

2 Introduction

Qlik Compose provides an all-in-one purpose built automation solution for creating an agile data warehouse

and/or ingesting data from multiple sources to your data lake for further downstream processing. To this end,

Qlik Compose offers two project types: Data Warehouse and Data Lake. This introduction will take a closer

look at how these projects can help your organization overcome the hurdles typically faced when confronted

with the challenge of setting up and maintaining an agile data warehouse, or when faced with challenge of

ingesting data from multiple source to a single analytics-ready storage system.

2.1 Data warehouse projects

Traditional methods of designing, developing, and implementing data warehouses require large time and

resource investments. The ETL stand-up development effort alone – multi-month and error-prone with prep

times of up to 80 percent and expertise from specialized developers – often means your data model is out of

date before your BI project even starts. Plus, the result of a traditional data warehouse design, development,

and implementation process is often a system that can’t adapt to continually changing business

requirements. Yet modifying your data warehouse diverts skilled resources from your more innovation-related

projects. Consequently, your business ends up with your data warehouse becoming a bottleneck as much as

an enabler of analytics.

Qlik Compose data warehouse projects allows you to automate these traditionally manual, repetitive data

warehouse tasks: design, development, testing, deployment, operations, impact analysis, and change

management. Qlik Compose automatically generates the task statements, data warehouse structures, and

documentation your team needs to efficiently execute projects while tracking data lineage and ensuring

integrity. Using Qlik Compose, your IT teams can respond fast – in days – to new business requests, providing

accurate time, cost, and resource estimates. Then once projects are approved, your IT staff can finally deliver

completed data warehouses, data marts, and BI environments in far less time.

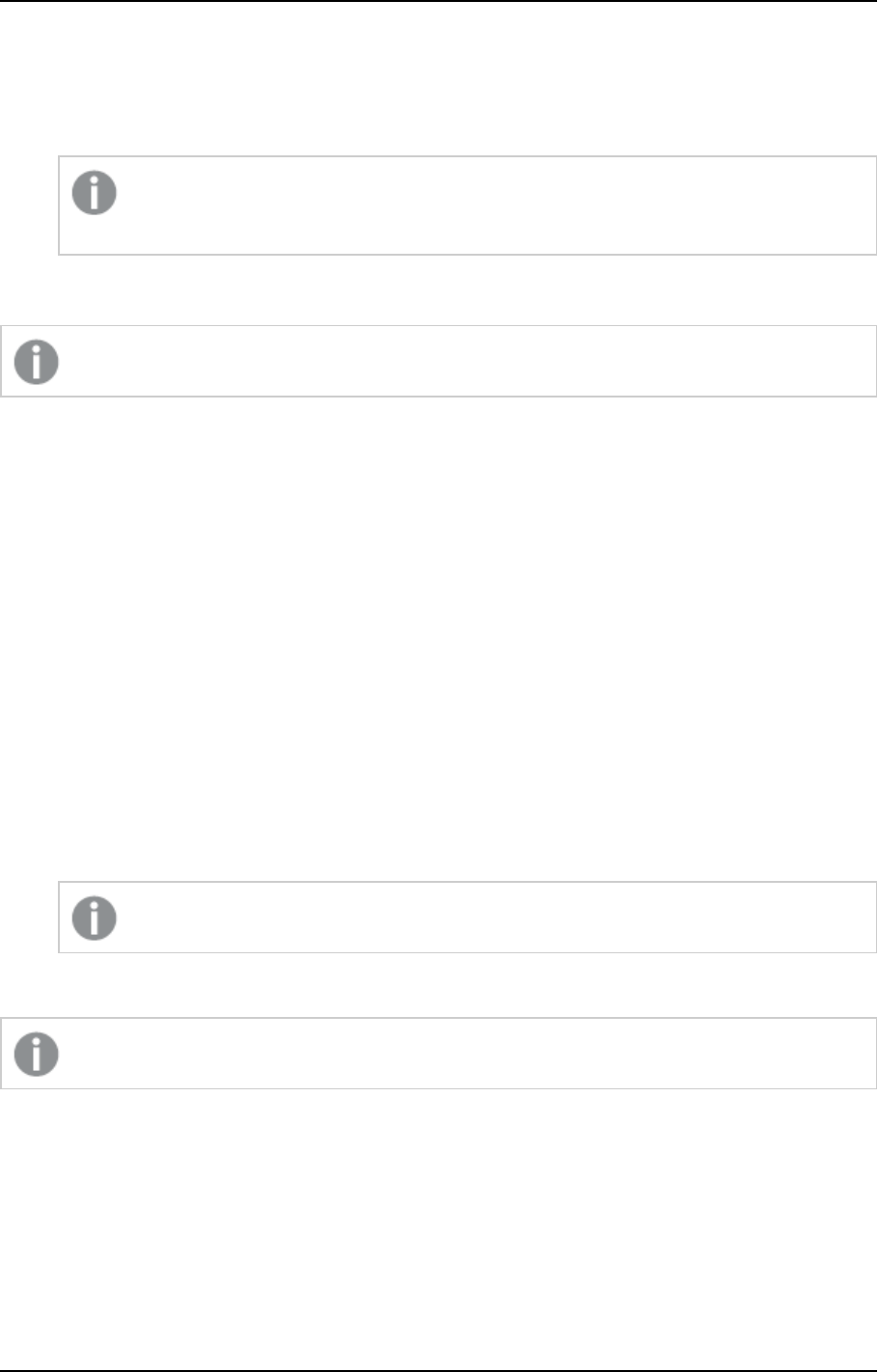

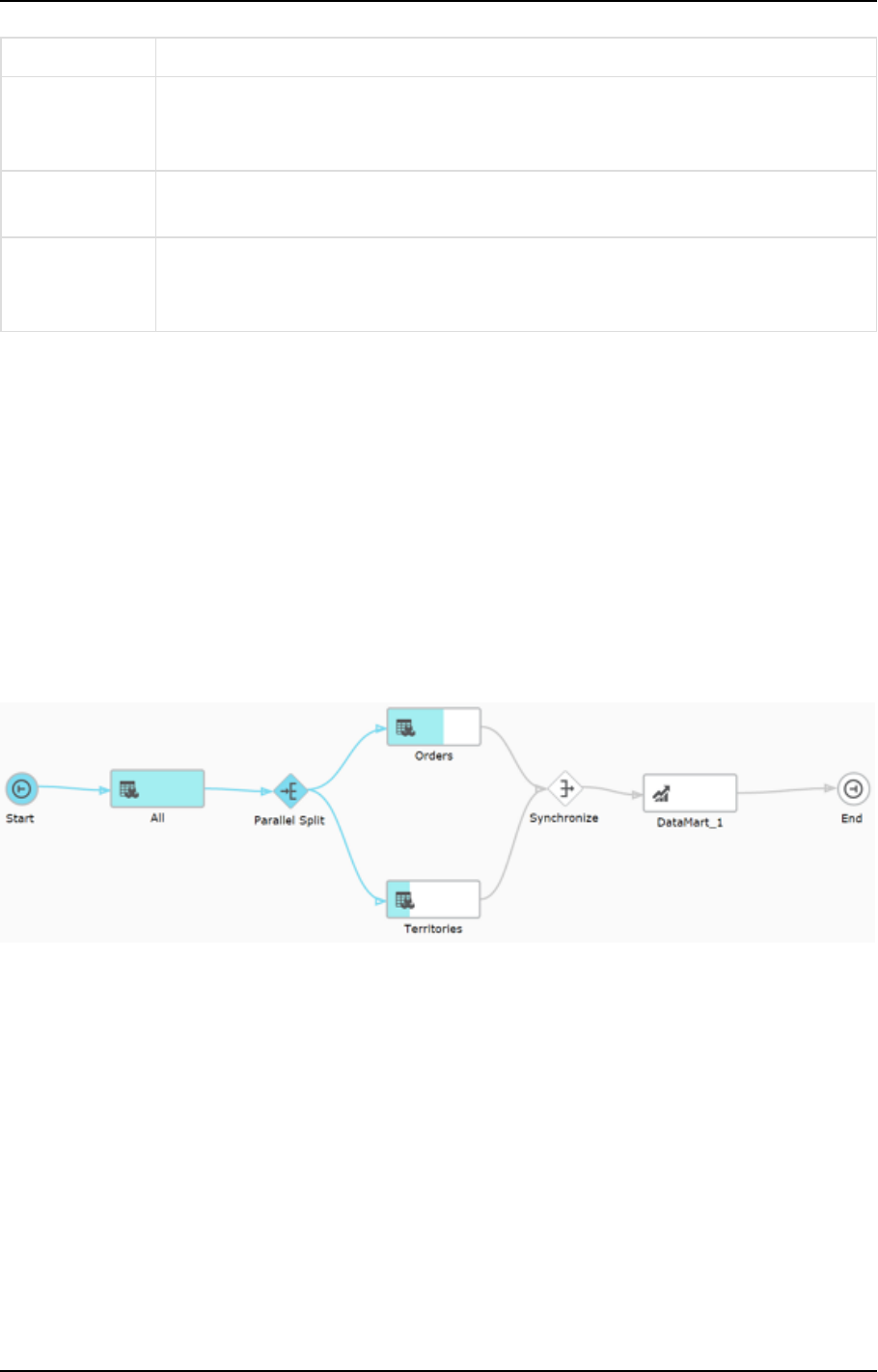

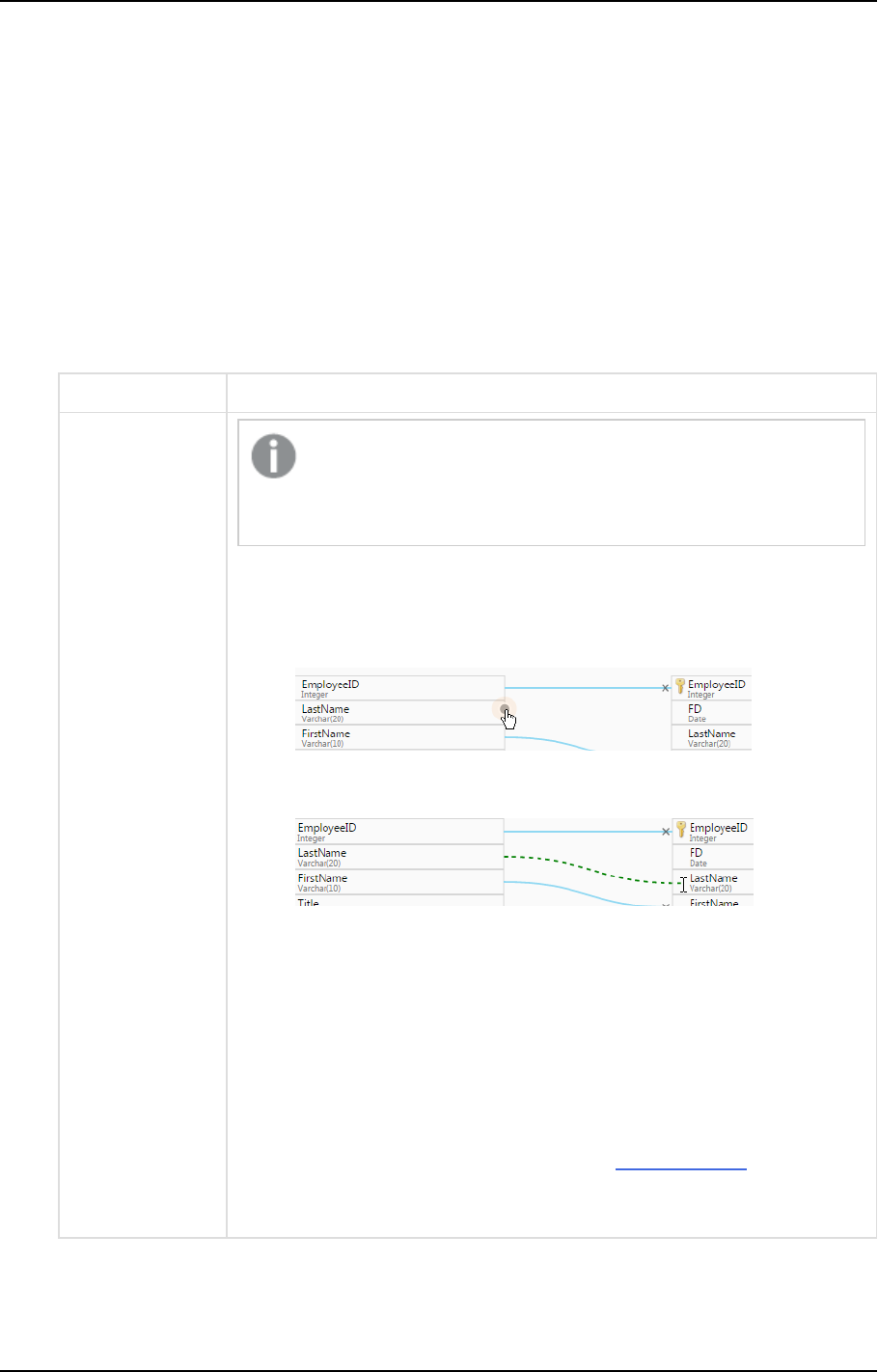

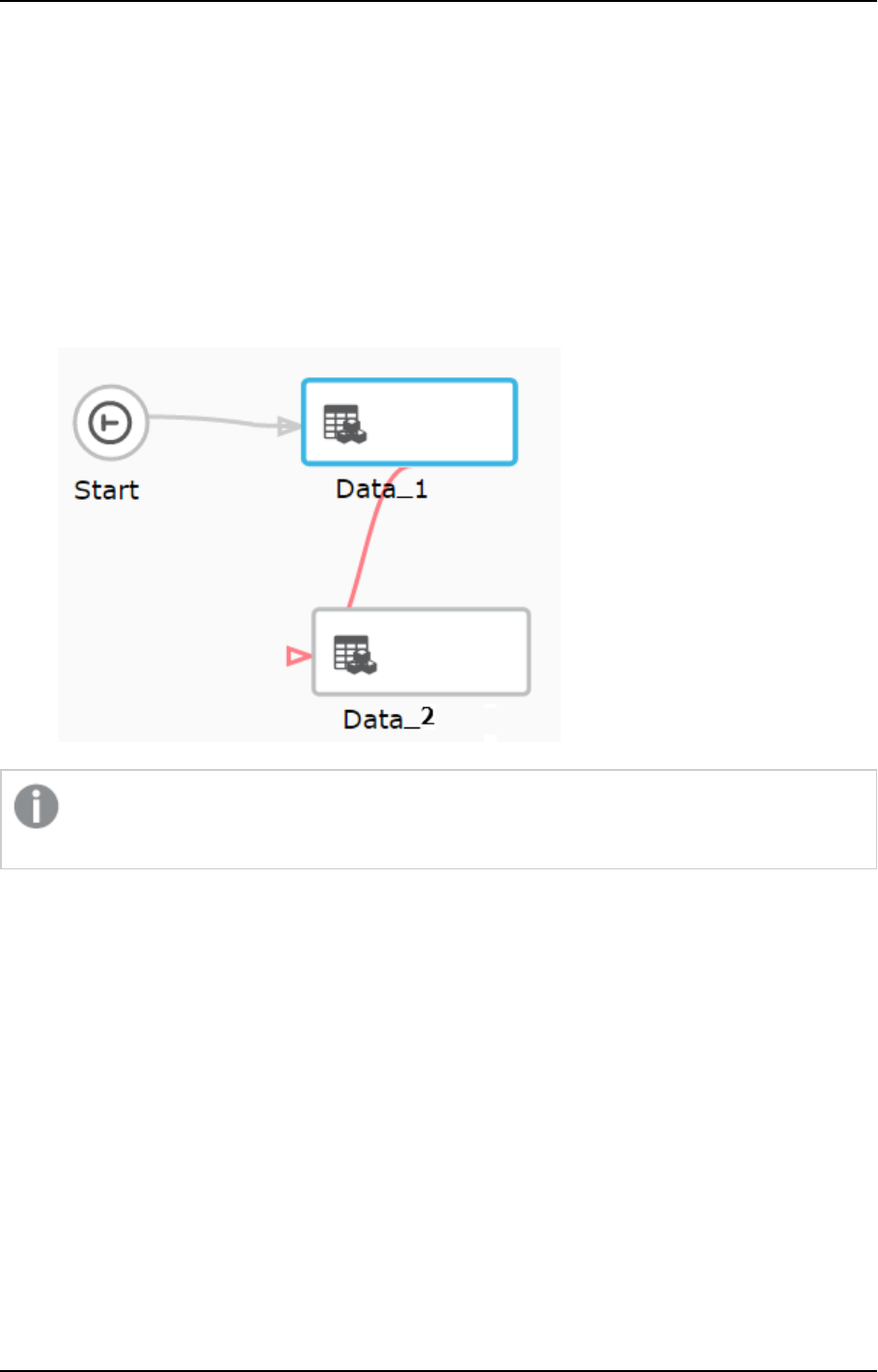

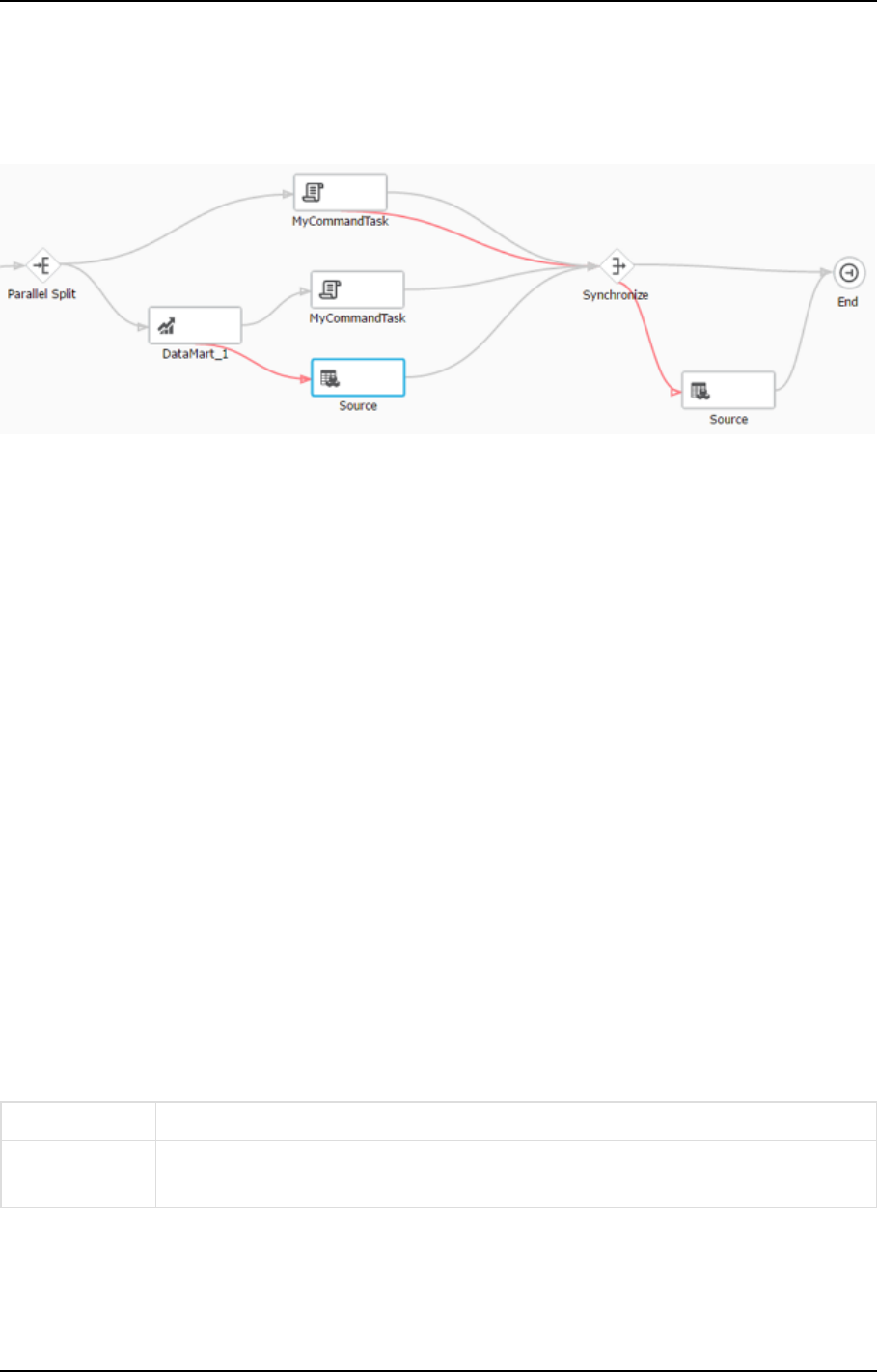

Data warehouse projects architecture

The process is illustrated in the following diagram and described below:

Setup and User Guide - Qlik Compose, May 2022 17

2 Introduction

Key features

The comprehensive set of automation features in our Qlik Compose solution simplifies data warehousing

projects. It eliminates the cumbersome and error-prone manual coding required by legacy data warehouse

design and implementations’ many repetitive steps. In addition, our solution includes the operational features

your business needs for ongoing data warehouse and data mart maintenance.

Automation Features Operational Features

l

Optimized for either model-driven or

data-driven data warehousing

approaches

l

Real-time source data integration

l

Automated ETL generation

l

Physical data warehouse management

l

Data mart generation

l

Monitoring

l

Workflow designer and scheduler

l

Notifications

l

Data profiling and quality enforcement

l

Lineage and impact analysis

l

Project documentation generation

l

Migration between environments

2.2 Data lake projects

Leverage Qlik Compose data lake projects to automate your data pipelines and create analytics-ready data

sets. By automating data ingestion, schema creation, and continual updates, organizations realize faster time-

to-value from their existing data lake investments.

Easy data structuring and transformation

An intuitive and guided user interface helps you build, model and execute data lake pipelines. Automatically

generate schemas and Hive Catalog structures for operational data stores (ODS) and historical data stores

(HDS) without manual coding.

Continuous updates

Be confident that your ODS and HDS accurately represent your source systems.

l

Use change data capture (CDC) to enable real-time analytics with less administrative and processing

overhead.

l

Efficiently process initial loading with parallel threading.

l

Leverage time-based partitioning with transactional consistency to ensure that only transactions

completed within a specified time are processed.

Historical data store

Derive analytics-specific data sets from a full historical data store (HDS).

l

New rows are automatically appended to HDS as data updates arrive from source systems.

l

New HDS records are automatically time-stamped, enabling the creation of trend analysis and other

time-oriented analytic data marts.

l

Supports data models that include Type-2, slowing changing dimensions.

Setup and User Guide - Qlik Compose, May 2022 18

2 Introduction

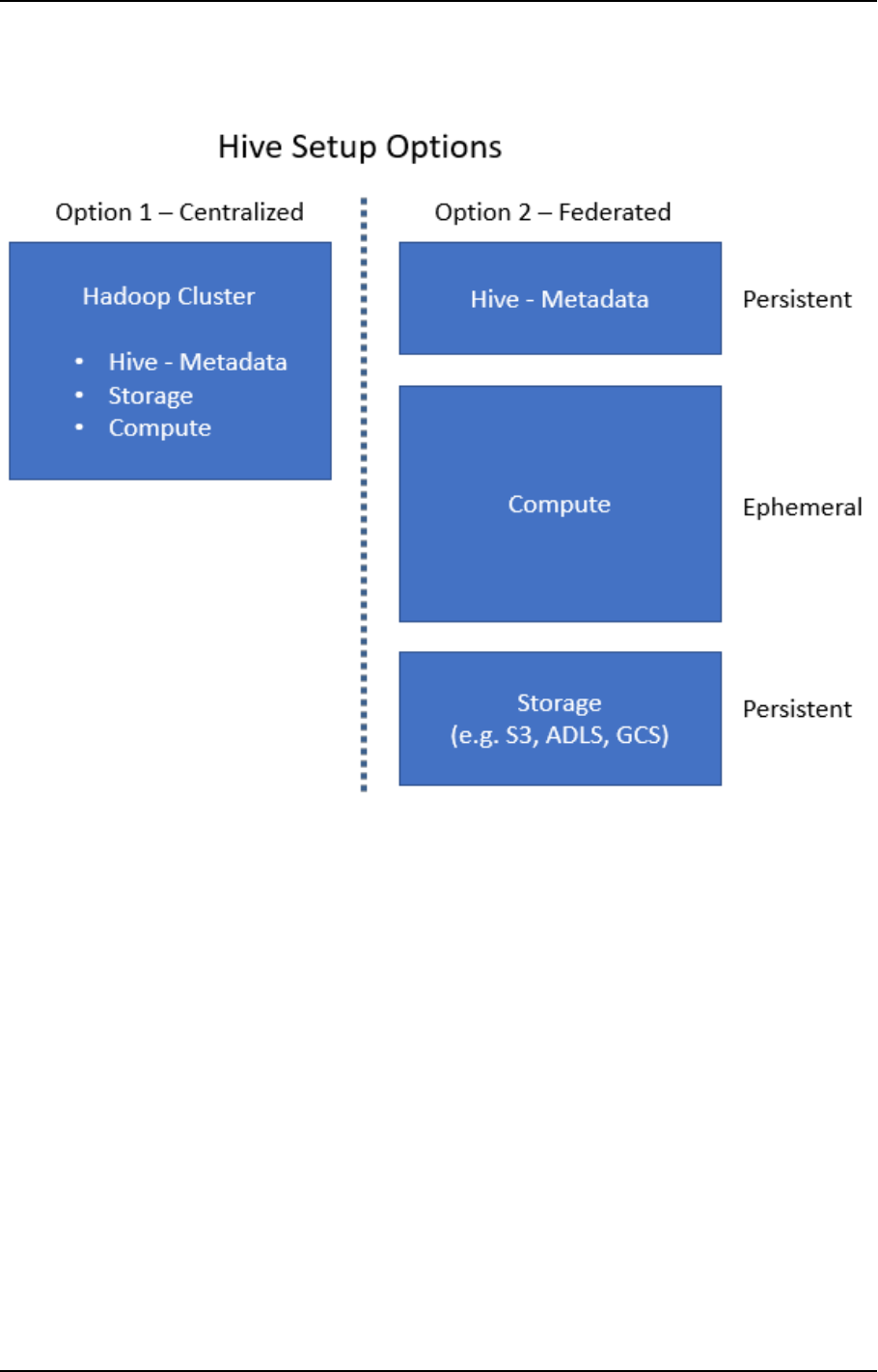

Data lake project architecture

The flow is as follows:

1. Land: The source tables are loaded into the Landing Zone using Qlik Replicate or other third-party

replication tools.

When using Qlik Replicate to move the source table to the Landing Zone, you can define either a Full

Load replication task or a Full Load and Store Changes task to constantly propagate the source table

changes to the Landing Zone in write-optimized format.

2. Store: After the source tables are present in the Landing Zone, Compose auto-generates metadata

based on the data source(s). Once the metadata and the mappings between the tables in the Landing

Zone and the Storage Zone have been finalized, Compose creates and populates the Storage Zone

tables in read-optimized format, ready for consumption by downstream applicaitons.

It should be noted that even though setting up the initial project involves both manual and automatic

operations, once the project is set up, you can automate the tasks by designing a Workflow in Compose

and/or utilizing the Compose scheduler.

Setup and User Guide - Qlik Compose, May 2022 19

3 Qlik Compose installation and setup

3 Qlik Compose installation and setup

This section describes how to install and set up Qlik Compose.

Note that as Qlik Replicate serves as a data (and metadata) provider for Qlik Compose, you also need to install

Replicate in your organization. For a description of the Replicate installation procedure, refer to the Qlik

Replicate Setup and User Guide.

In this section:

l

Preparing your system for Compose (page 20)

l

Installing or upgrading Compose (page 21)

l

Installing and upgrading Compose silently (page 22)

l

Determining the required number of database connections (page 24)

l

Accessing Qlik Compose (page 25)

3.1 Preparing your system for Compose

Compose should be installed on a Windows Server machine that is able to access the data warehouse and

optionally the source database(s) defined in your Compose project. Note that Compose only needs to access

the source database if you plan to discover the source database when generating your model. For more

information on discovery, see Discovering the Source Database or Landing Zone (page 156).

Before installing Compose, make sure that the following prerequisites have been met:

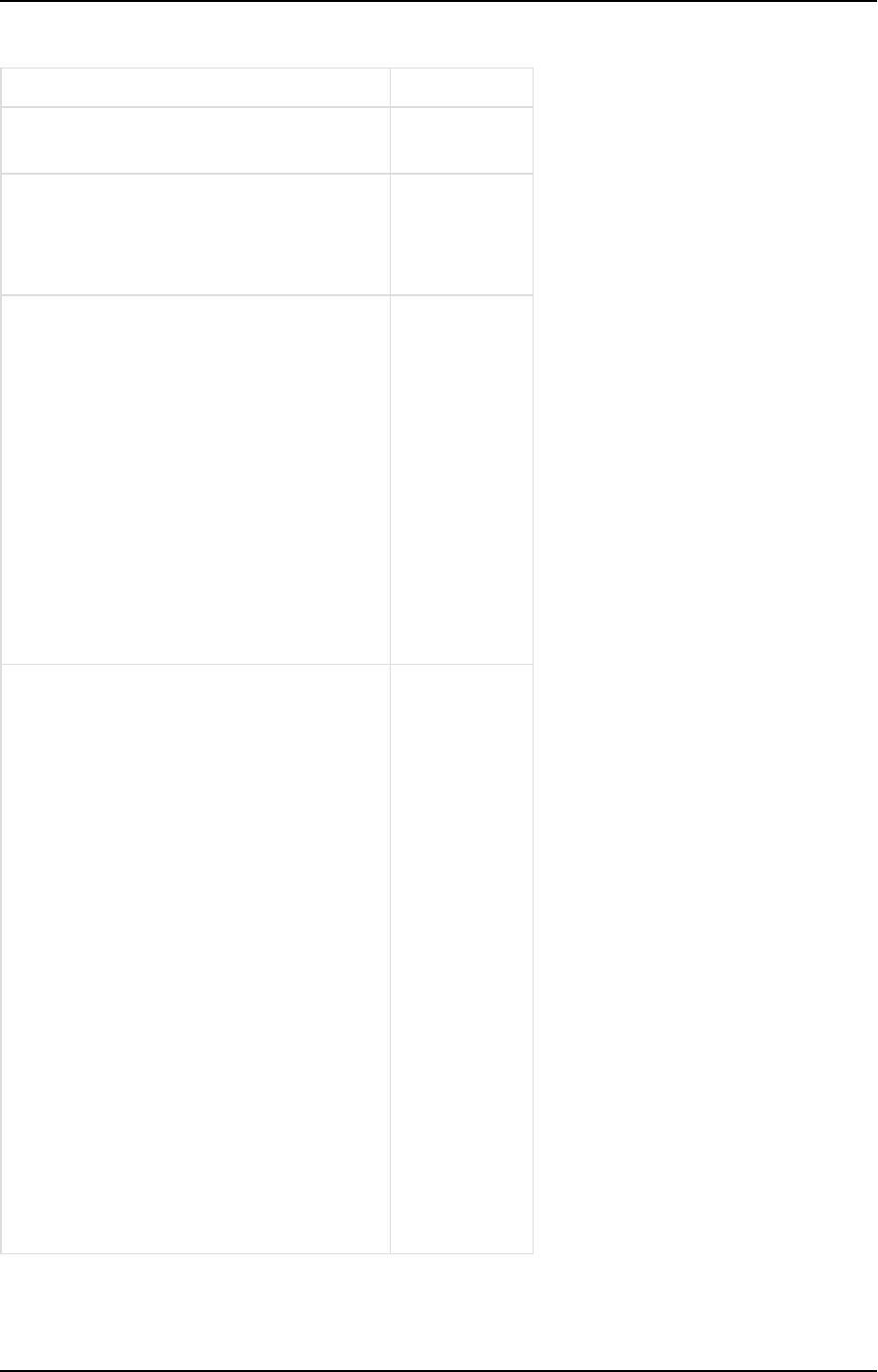

Hardware prerequisites

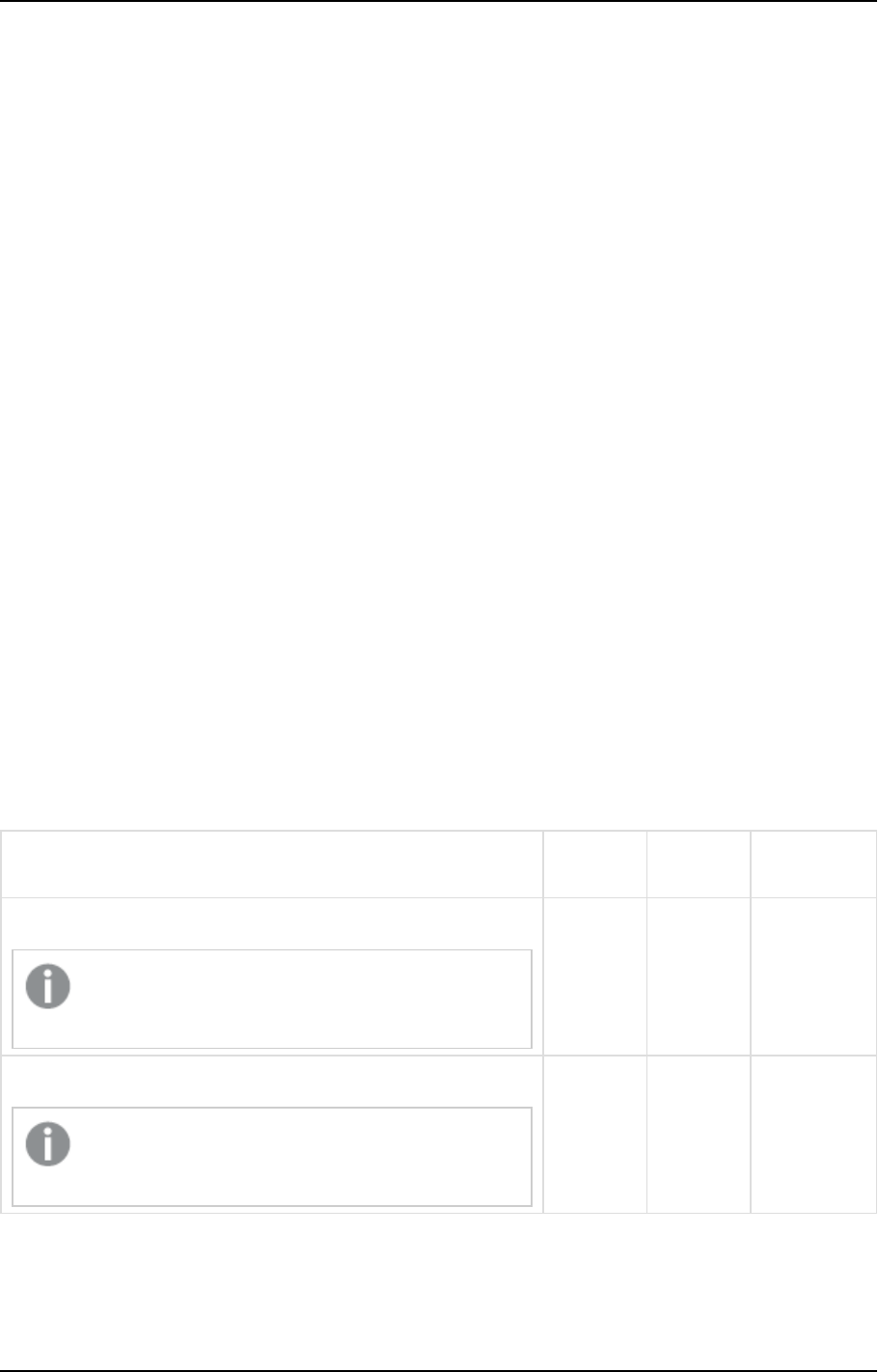

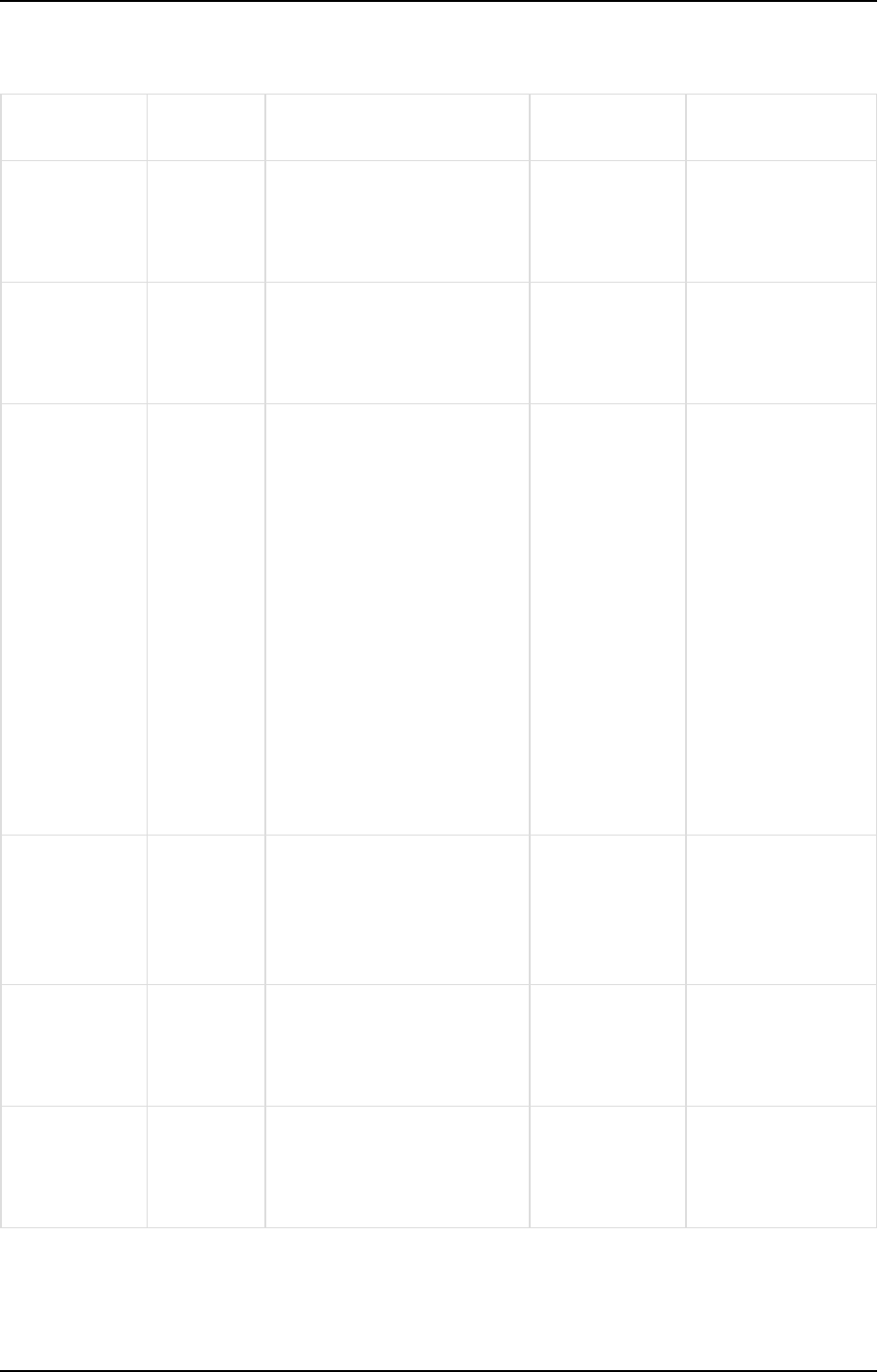

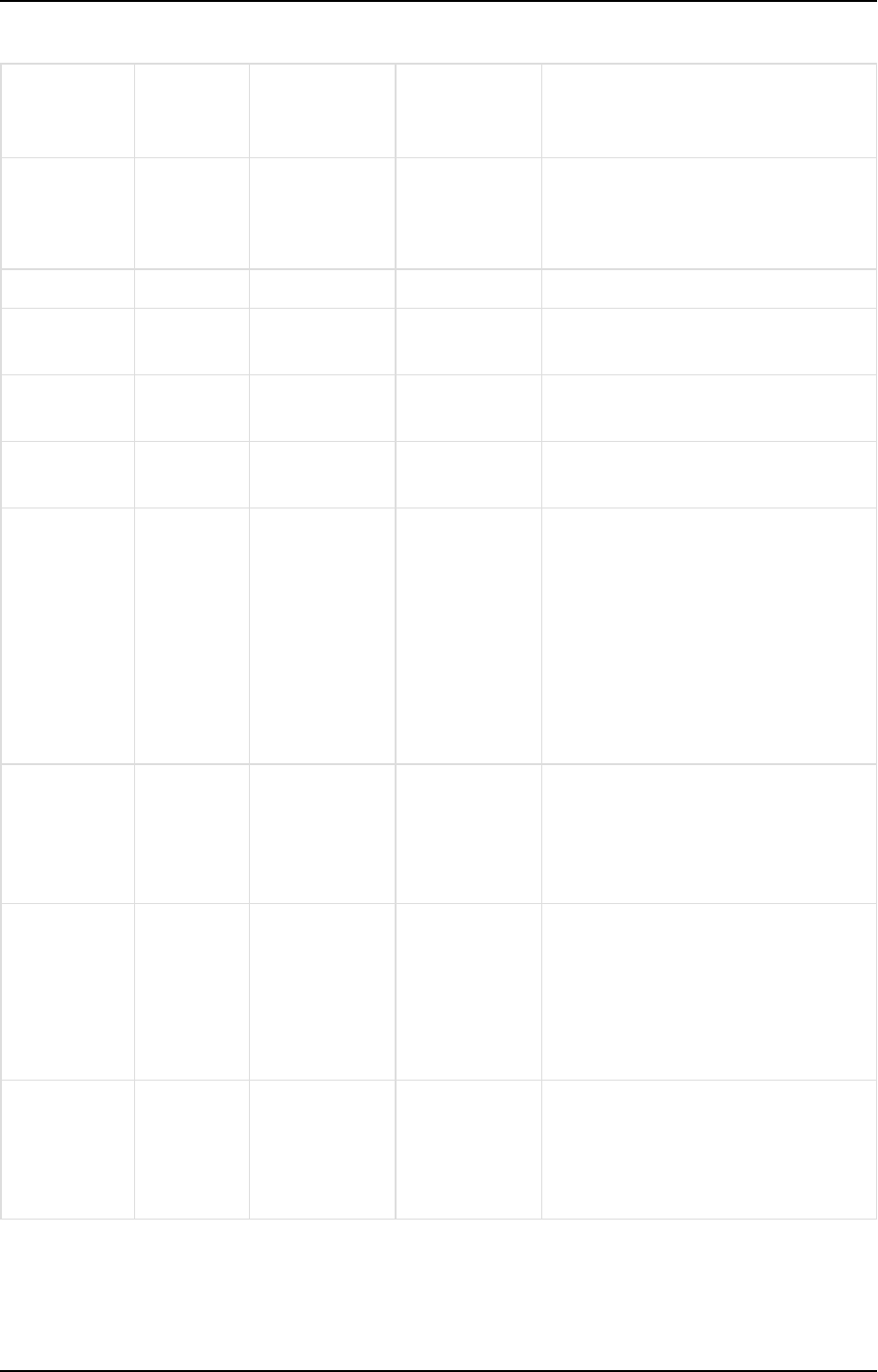

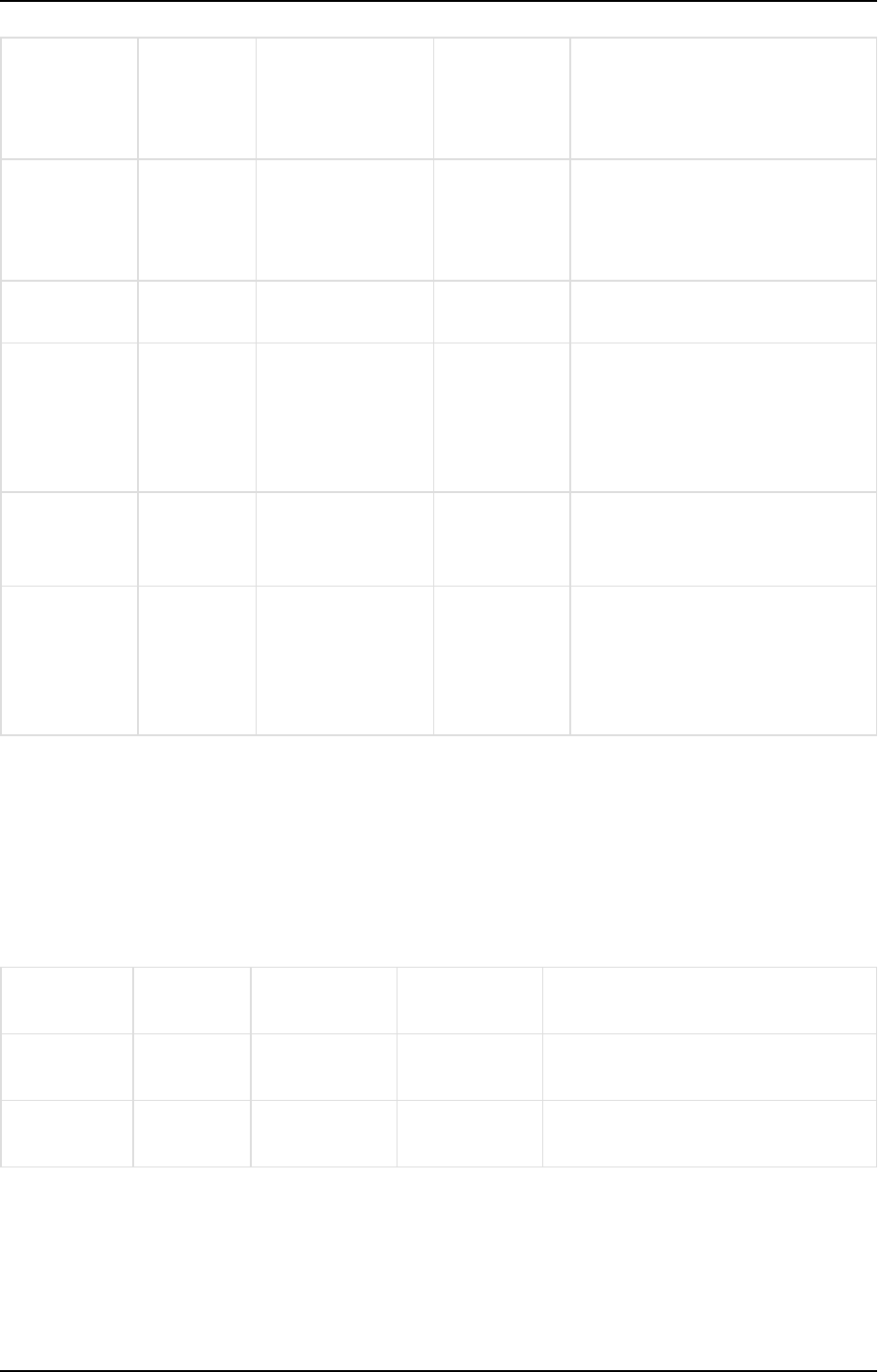

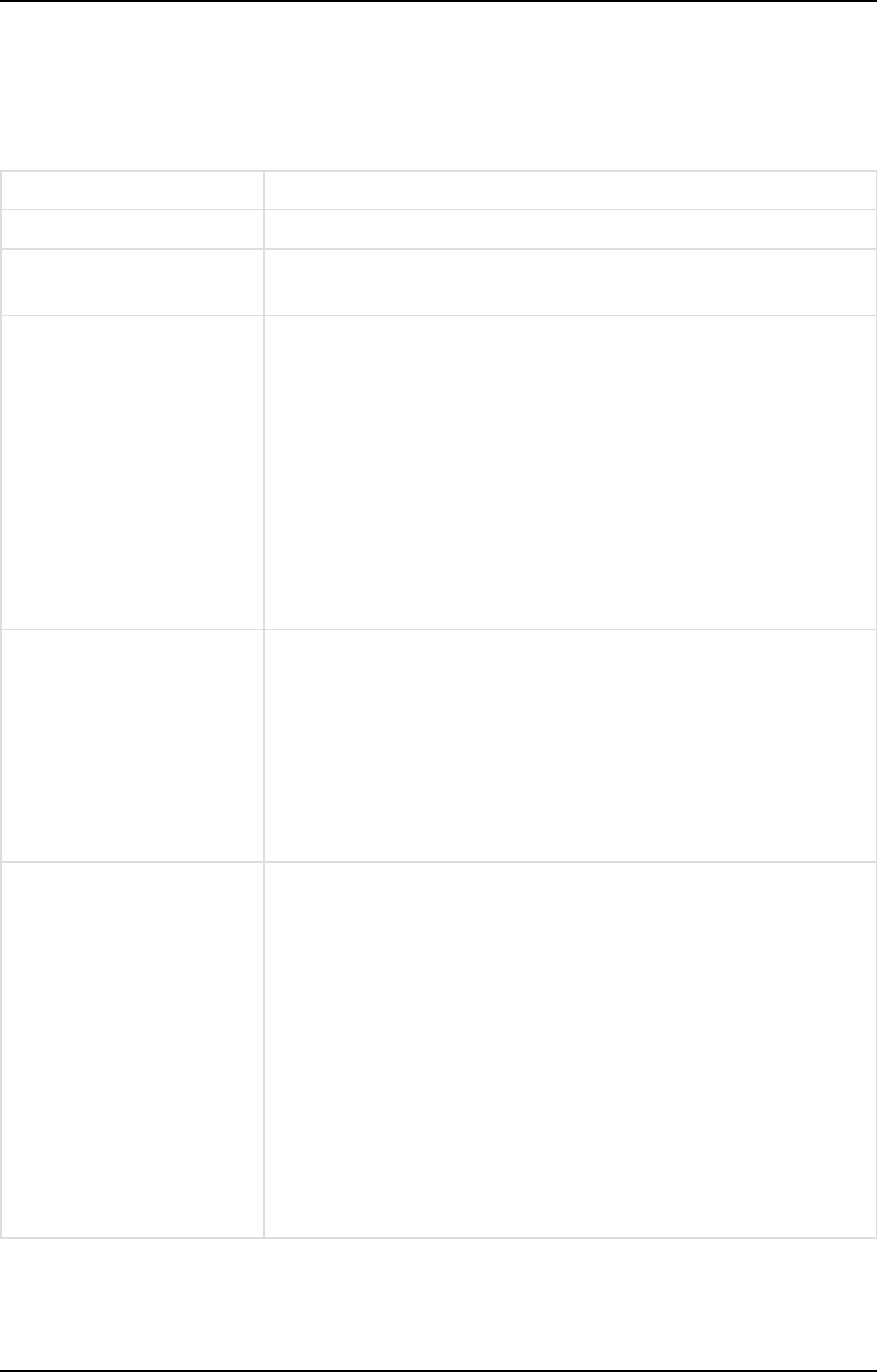

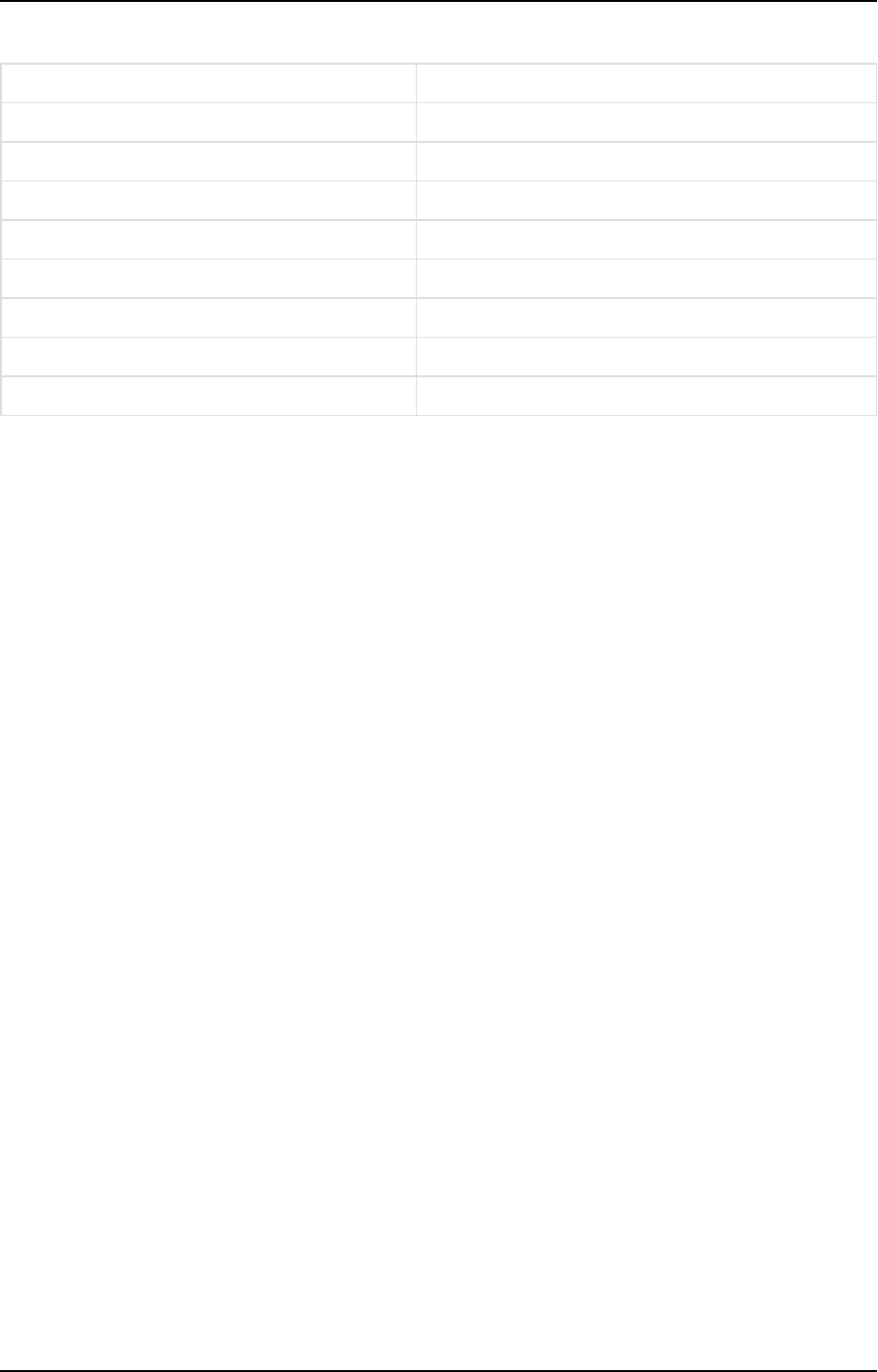

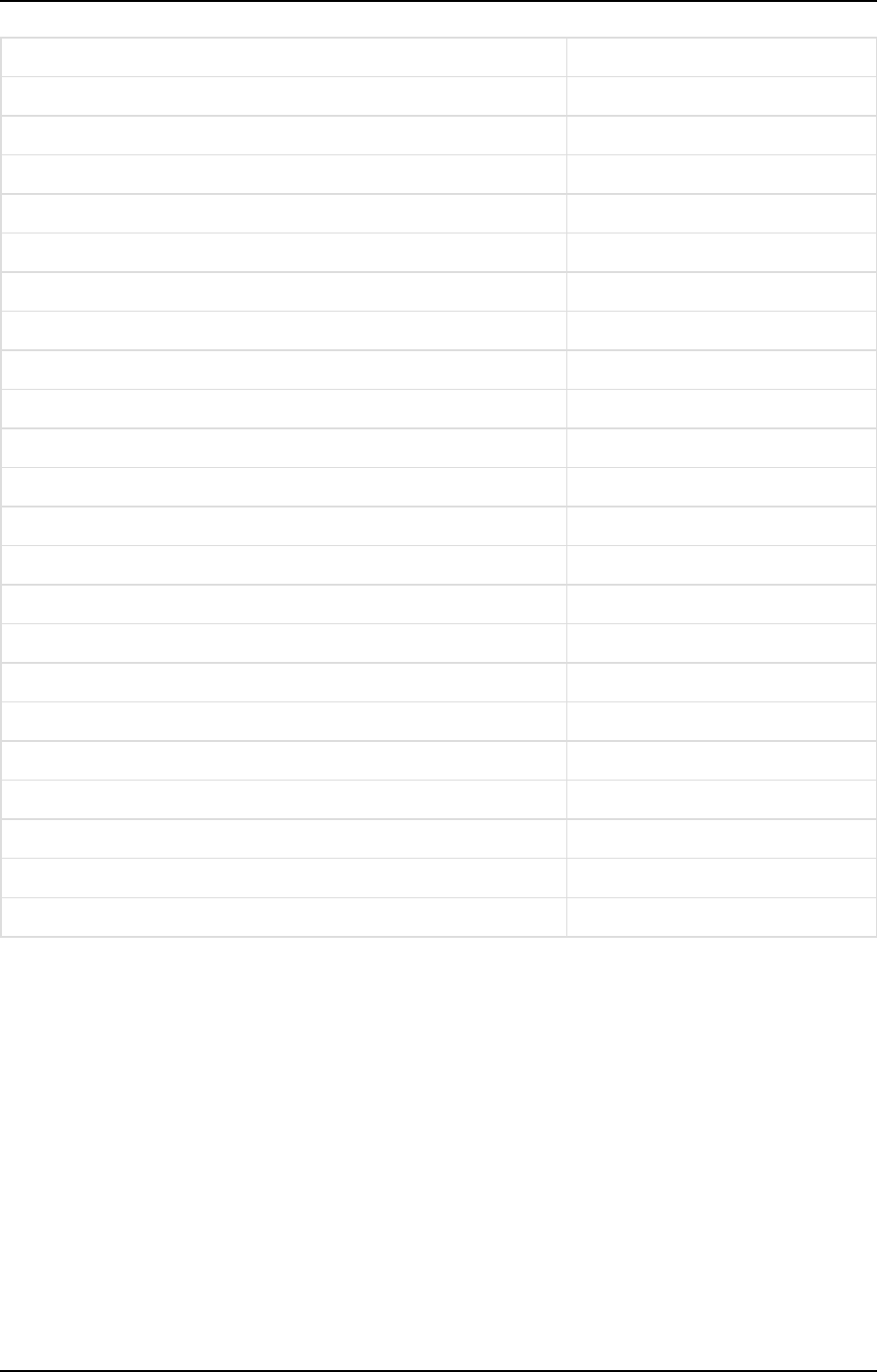

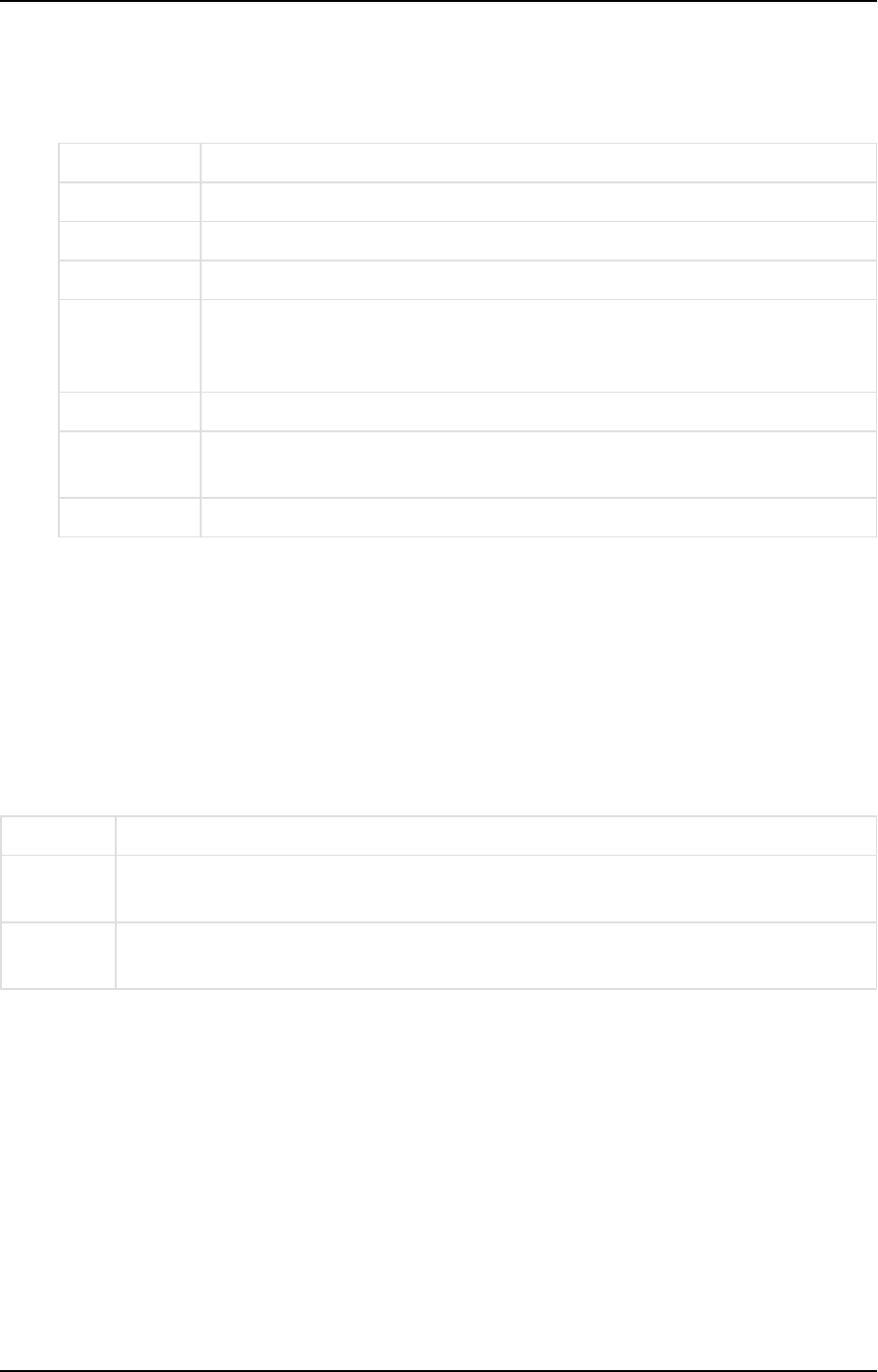

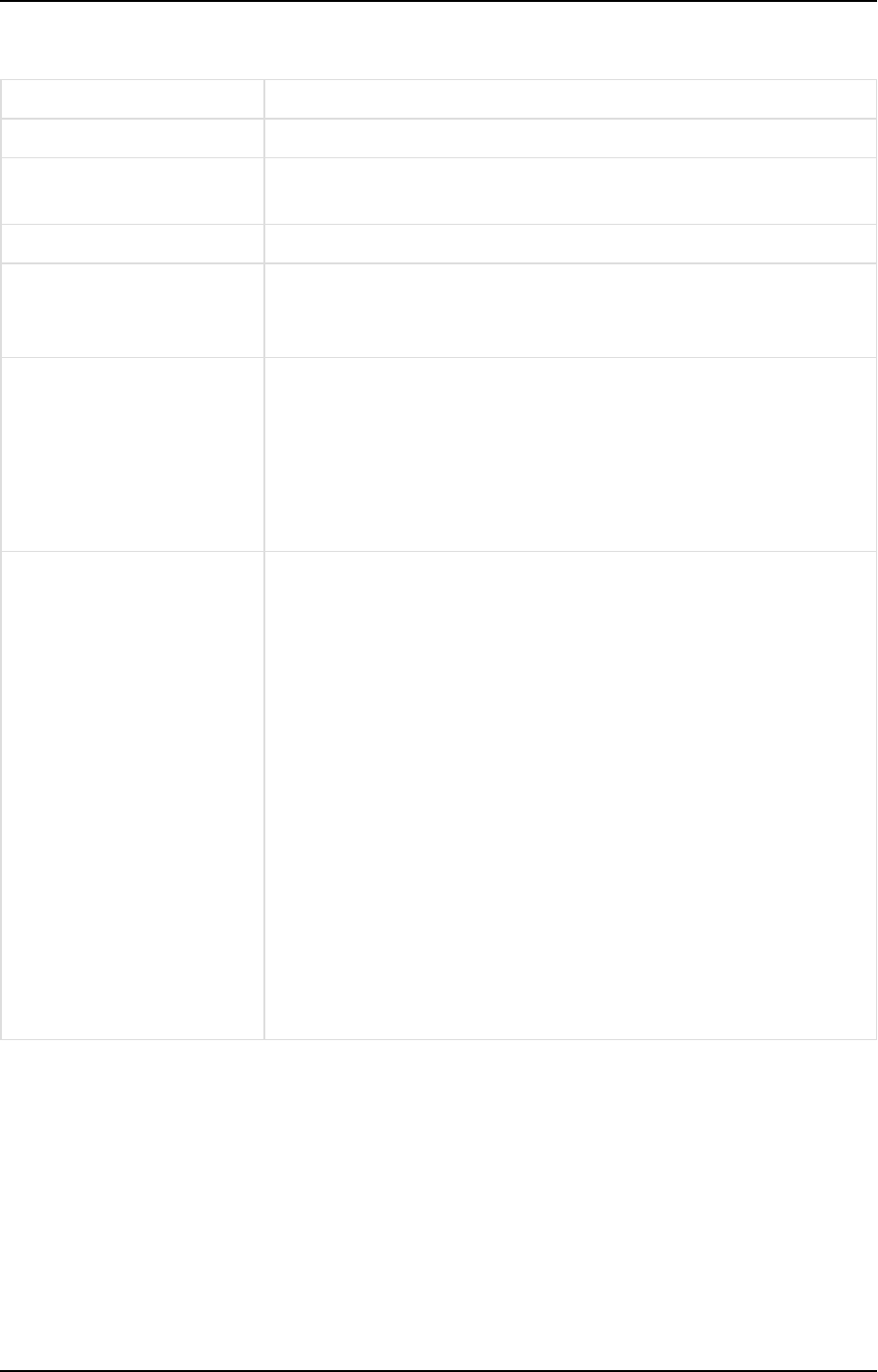

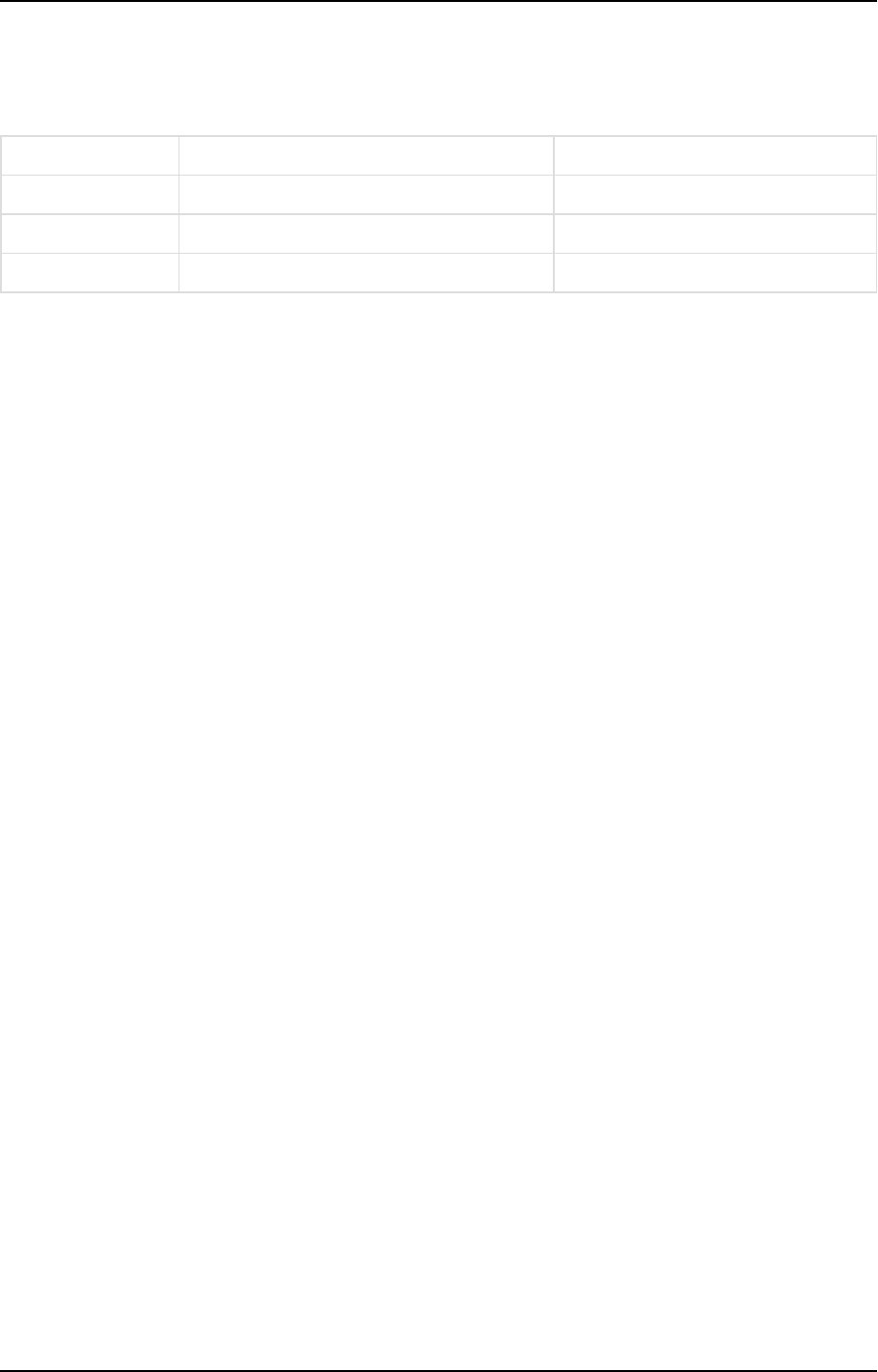

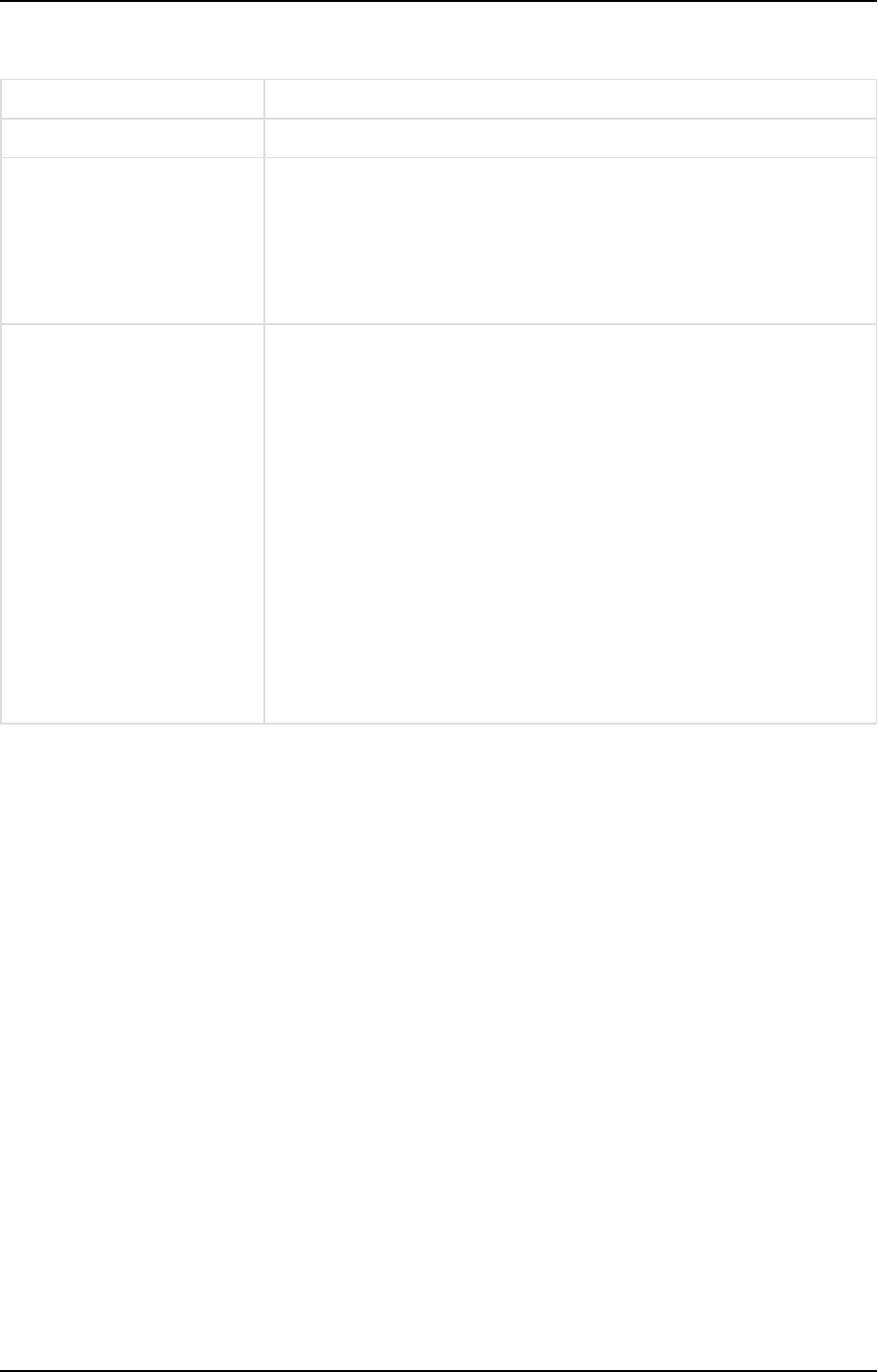

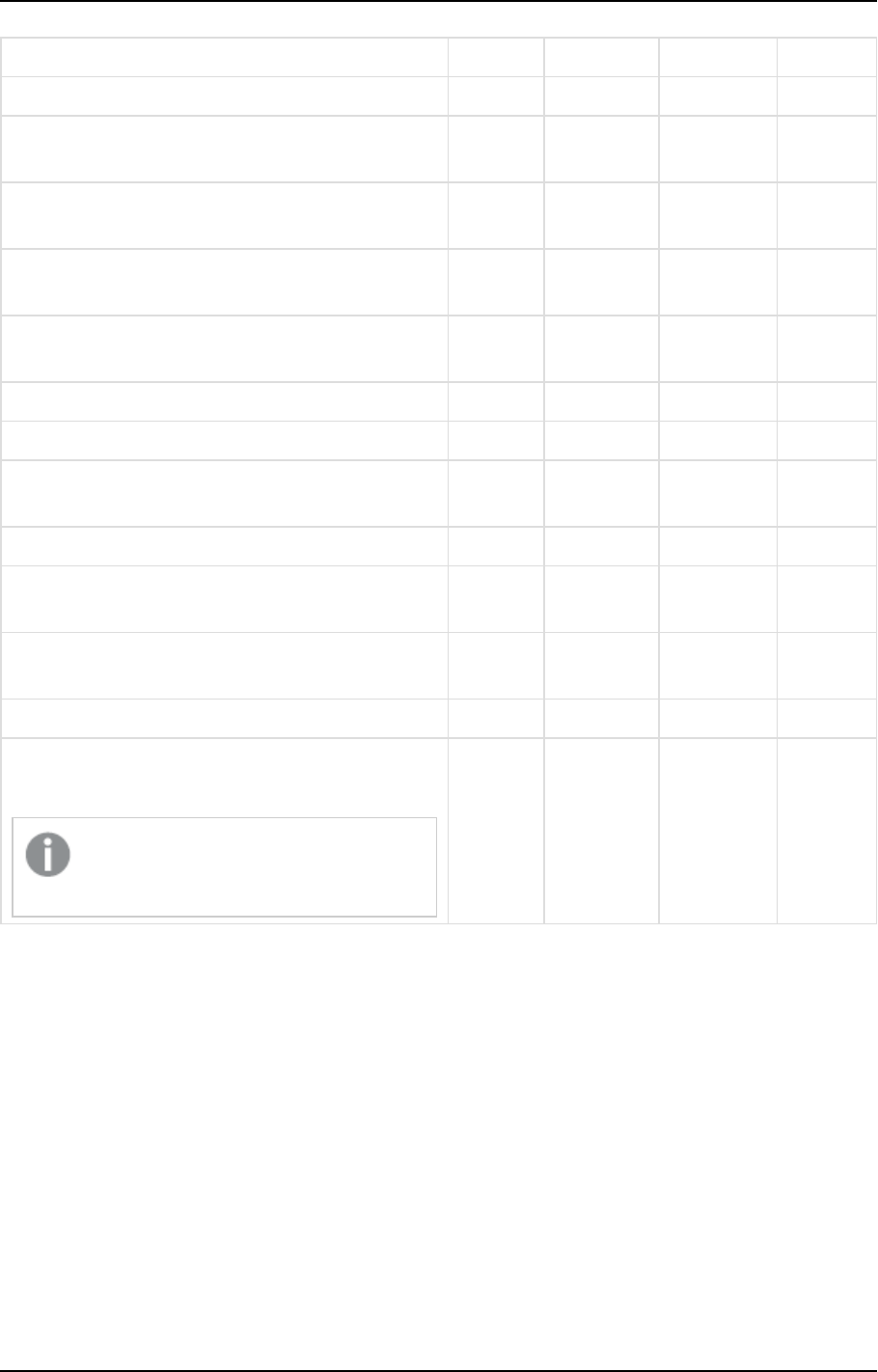

The following table lists the required hardware for varied deployment scales:

Component

Basic

System

Large

System

Extra-Large

System

Processor

Additional cores may improve performance when

several ETL processes are running concurrently.

Quad core Quad core

base

8-core base

Memory

Additional memory may improve performance when

several ETL processes are running concurrently.

8 GB 16 GB 32 GB

Hardware component requirements

Setup and User Guide - Qlik Compose, May 2022 20

3 Qlik Compose installation and setup

Component

Basic

System

Large

System

Extra-Large

System

Disk requirements

For all configurations, RAID is recommended for higher

system availability in case of disk failure.

100 GB

SSD

500 GB

10,000

RPM

RAID

500 GB

15,000 RPM

RAID

Network 1 Gb 10 Gb Two 10 Gb

Software and network prerequisites

l

Firewall ports 80/443 should be open on the Compose machine.

l

.NET Framework 4.8 or later installed on the Compose machine.

l

TLS 1.2 or later must be supported in the underlying OS.

On Windows Server 2012 R2, TLS 1.2 should be turned on by default. If it is not, refer to the

Microsoft online help for instructions on how to turn it on.

For information on supported databases and browsers, see Support matrix (page 389).

Required permissions for the Compose service

Qlik Compose needs to be installed and run as Administrator.

Reserved system names

All database object names (queries, tables, columns, schemas, and indexes) starting with the prefix qlk__,

and regardless of case, are reserved for internal Compose use.

Thus, a table named qlK__MyTable or a column named QLK__MyColumn would not be permitted.

3.2 Installing or upgrading Compose

The following topic describes how to install and upgrade Qlik Compose.

Installation Instructions

For best performance when using cloud-based databases (such as, Snowflake) as your data source

or data warehouse, it is strongly recommended to install Qlik Compose on a machine (such as

Amazon EC2) located in the same region as your database instance.

Setup and User Guide - Qlik Compose, May 2022 21

3 Qlik Compose installation and setup

To install Compose:

1. Run the Compose setup file (Qlik_Compose_<version.number>.exe).

The Qlik Compose setup wizard opens.

2. Click Next. Select I accept the terms of the license agreement and then click Next again.

3. Optionally change the installation directory and then click Next.

4. Click Next and then click Next again to start the installation.

5. When the installation completes, click Finish to exit the Wizard.

As part of the installation, a new Windows Service called Qlik Compose is created.

6. Open the Qlik Compose console as described in Accessing Qlik Compose (page 25).

When you first open the Qlik Compose Console, you will be prompted to register an

appropriate license. Register the license that you received from Qlik.

Upgrade Instructions

Depending on your existing Compose version, you may also need to perform additional version-

specific upgrade tasks. It is therefore strongly recommended to review the release notes for the new

version before upgrading.

1. Stop all Compose tasks and services.

2. After the Qlik Compose service has been stopped by the Installer, make sure that all child processes

are also stopped.

Compose runs a check to verify the termination of tasks and processes before running an

upgrade. If any processes are found to be still running, the installation will be aborted.

3. Run the Qlik Compose setup wizard.

4. Start all Compose tasks and services.

3.3 Installing and upgrading Compose silently

Compose can be installed silently (i.e. without requiring user interaction). This option is useful, for example, if

you need to install Compose on several machines throughout your organization.

Before commencing the installation, make sure that the prerequisites have been met. See Preparing

your system for Compose (page 20).

The following topics describe how silently install, upgrade, and uninstall Compose:

Setup and User Guide - Qlik Compose, May 2022 22

3 Qlik Compose installation and setup

l

Silently installing Compose (page 23)

l

Silently upgrading Compose (page 24)

l

Silently uninstalling Compose (page 24)

Silently installing Compose

The installation process consists of two stages: creating a response file, and running the silent install.

Creating a response file

Before starting the installation, you need to create a response file.

To create the response file:

1. From the directory containing the Compose setup file, run the following command(note that this will

also install Compose):

Qlik_Compose_<version.number>.exe /r /f1<my_response_file>

where:

<my_response_file> is the full path to the generated response file.

Example:

Qlik_Compose_<version.number>.exe /r /f1C:\Compose_install.iss

2. To change the default installation directory, open the response file in a text editor and edit the first

szDir value as necessary.

3. To change the default data directory, edit the third szDir value as necessary.

4. Save the file as <name>.iss, e.g. Compose_install_64.iss.

Running the silent install

To silently install Compose, open a command prompt and change the working directory to the directory

containing the Compose setup file. Then issue the following command (where <response file> is the path to

the response file you created earlier):

Syntax:

<Compose_setup_file> /s /f1<my_response_file> [/f2<LOG_FILE>]

Example:

C:\>Qlik_Compose_<version.number>.exe /s /f1C:\temp\1\Compose_install.iss /f2C:\temp\1\silent_

x64_install.log

If the installation was successful, the log file should contain the following rows:

[ResponseResult]

ResultCode=0

Setup and User Guide - Qlik Compose, May 2022 23

3 Qlik Compose installation and setup

Silently upgrading Compose

Before starting the silent upgrade:

1. Create a response file. See Step 1 of "Creating a Response File" in Silently installing Compose

(page 23)

2. It is strongly recommended to back up the Compose "Data" folder.

3. All tasks and java processes must be terminated. Compose runs a check to verify the

termination of tasks and processes before running the upgrade. If any processes are found to

be still running, the upgrade will be aborted.

To silently upgrade Compose:

1. Open a command prompt and change the working directory to the directory containing the Compose

setup file.

2. Issue the following command (where <my_response_file> is the path to the response file you created

earlier):

Syntax:

<COMPOSE_KIT> /s /f1<my_response_file> [/f2<LOG_FILE>]

Example:

C:\>Qlik_Compose_<version.number>.exe /s /f1C:\temp\1\Compose_upgrade.iss /f2C:\temp\1\silent_

x64_up.log

If the upgrade was successful, the log file should contain the following rows:

[ResponseResult]

ResultCode=0

Silently uninstalling Compose

Silently uninstalling Compose is also comprised of creating a response file and running the silent uninstall.

The process is the same as for silently installing Compose. For instructions, see Silently installing Compose

(page 23)

3.4 Determining the required number of database

connections

As a rule of thumb, the higher the number of database connections opened for Compose, the more tables

Compose will be able to load in parallel. It is therefore recommended to open as many database connections

as possible for Compose. However, if the number of database connections that can be opened for Compose is

limited, you can calculate the minimum number of required connections as described below.

Setup and User Guide - Qlik Compose, May 2022 24

3 Qlik Compose installation and setup

To determine the number of required connections:

1. For each task, determine the number of connections it can use during runtime. This value should be

specified in the Advanced tab in the Manage Data Warehouse Tasks Settings window (Data Warehouse

projects) or in the Manage Storage Tasks Settings window (Data Lake projects). When determining the

number of required connections, various factors need to be taken into account including the number

of tables, the size of the tables, and the volume of data. It is therefore recommended to determine the

required number of connections in a Test environment.

2. Calculate the number of connections needed by all tasks that run in parallel. For example, in a Data

Lake project, if three data storage tasks run in parallel, and each task requires 5 connections, then the

number of required connections will be 15.

Similarly, in a Data Warehouse project, if a workflow contains two data warehouse tasks that run in

parallel and each task requires 5 connections, then the minimum number of required connections will

be 10. However, if the same workflow also contains two data mart tasks (that run in parallel) and the

sum of their connections is 20, then the minimum number of required connections will be 20.

3. Factor in the connections required by the Compose Console. To do this, multiply the maximum

number of concurrent Compose users by three and then add to the sum of Step 2 above. So, if the

number of required connections is 20 and the number of concurrent Compose users is 4, then the total

would be:

20 + 12 = 32

3.5 Accessing Qlik Compose

You can use a Web browser to access the Qlik Compose Console from any computer in your network. For

information on supported browsers, see Preparing your system for Compose (page 20).

The person logged in to the computer where you are accessing the Console must be an authorized

Qlik Compose user. For more information, see Managing user permissions (page 377).

To access the Qlik Compose Console:

1. To access the Qlik Compose Console from the machine on which it is installed, select All Programs >

Qlik Compose > Qlik Compose Console from the Windows Start menu. To access the Qlik Compose

Console from a remote browser, type the following address in the address bar of your Web browser

https://<ComputerName>/qlikcompose/

Where <ComputerName> is the name or IP address of the computer on which Compose is installed.

2. If no server certificate is installed on the Compose machine, a page stating that the connection is

untrusted will be displayed. This is because when Compose detects that no server certificate is

installed, it installs a self-signed certificate. Since the browser has no way of knowing whether the

certificate is safe, it displays this page. For more information, see Setting up HTTPS for the Compose

console (page 26).

3. When prompted for your password, enter your domain username and password.

Setup and User Guide - Qlik Compose, May 2022 25

4 Security considerations

4 Security considerations

During normal operation, Qlik Compose needs to access databases and storage systems for the purpose of

reading and writing data and metadata.

This section describes the procedure you should follow to ensure that any data handled by Qlik Compose will

be completely secure.

In this section:

l

Setting up HTTPS for the Compose console (page 26)

l

Setting the hostname and changing the HTTPS port (page 30)

l

Setting up HSTS on Compose (page 31)

l

Setting Single Sign-On Authentication with Kerberos (page 32)

l

Changing the master user password (page 32)

4.1 Setting up HTTPS for the Compose console

Industry-standard security practices dictate that web user interface for enterprise products must use secure

HTTP (HTTPS). Qlik Compose enforces the use of HTTPS and will not work if HTTPS is configured incorrectly.

As Compose uses the built-in HTTPS support in Windows, it relies on the proper setup of the Windows

machine it runs on to offer HTTPS access. In most organizations, the IT security group is responsible for

generating and installing the SSL server certificates required to offer HTTPS. It is strongly recommended that

the machine on which Compose is installed already has a valid SSL server certificate installed and bound to

the default HTTPS port (443).

Checking if an SSL certificate is installed

To check whether an SSL certificate is installed, you can use the following command:

netsh http show sslcert | findstr /c:":443 "

If an SSL certificate is installed, the output should look like this:

netsh http show sslcert | finds

tr /c:":443 "

IP:port : 192.168.1.13:443

IP:port : 192.168.1.11:443

IP:port : [fe80::285d:599c:4a55:1092%11]:443

IP:port : [fe80::3d0e:fb1c:f6c3:bc52%23]:443

With a valid SSL certificate installed, the Qlik Compose web user interface will automatically be available for

secure access from a web browser using the following URL:

https://<ComputerName>/qlikcompose/

Where <ComputerName> is the name or IP address of the computer on which Compose is installed.

Setup and User Guide - Qlik Compose, May 2022 26

4 Security considerations

Using the self-signed certificate

Due to the way the HTTPS protocol works, there is no way for Compose to automatically provide and install a

valid SSL server certificate. Still, in the event that no SSL server certificate is installed, Compose automatically

generates and installs a self-signed SSL server certificate (as a temporary measure). This certificate is

generated on the Compose machine and cannot be exported or used elsewhere.

It should be noted that browsers do not consider the certificate to be valid because it was not signed by a

trusted certificate authority (CA). When connecting with a browser to a server that uses a self-signed

certificate, a warning page is shown such as this one in Chrome:

Or this one in Firefox:

Setup and User Guide - Qlik Compose, May 2022 27

4 Security considerations

The warning page informs you that the certificate was signed by an unknown certificate authority. All

browsers display a similar page when presented with a self-signed certificate. If you know that the self-signed

certificate is from a trusted organization, then you can instruct the browser to trust the certificate and allow

the connection. Instructions on how to trust the certificate vary between browsers and even between different

versions of the same browser. If necessary, refer to the help for your specific browser.

Some corporate security policies prohibit the use of self-signed certificates. In such cases, it is

incumbent upon the IT Security department to provide and install the appropriate SSL server

certificate (as is the practice with other Windows products such as IIS and SharePoint). If a self-

signed certificate was installed and needs to be removed, then the following command can be used:

composeCtl.exe certificate clean

Note that after the self-signed certificate is deleted, connections to the Qlik Compose machine will

not be possible until a valid server certificate is installed. Should you want to generate a new self-

signed certificate (to replace the deleted certificate), simply restart the Qlik Compose service.

Setup and User Guide - Qlik Compose, May 2022 28

4 Security considerations

Replacing the self-signed certificate on Windows

The instructions below are intended for organizations who wish to replace the self-signed certificate

generated by the Compose Server on Windows with their own certificate. The process, which is described

below, involves removing the self-signed certificate and then importing the new certificate.

See also Setting up HTTPS for the Compose console (page 26).

Before starting, make sure that the following prerequisites have been met:

l

The replacement certificate must be a correctly configured SSL PFX file containing both the private key

and the certificate.

l

The common name field in the certificate must match the name browsers will use to access the

machine.

To remove the self-signed certificate created by Qlik Compose:

1. Stop the Qlik Compose service.

2. Open a command prompt (using the "Run as administrator" option) and change the path to the

Compose bin directory. The default path is C:\Program Files\Qlik\Compose\bin.

3. Run the following command:

composeCtl.exe certificate clean

To import your own certificate:

1. Run mmc.exe to open the Microsoft Management Console.

2. From the File menu, select Add/Remove Snap-in. The Add or Remove Snap-ins window opens.

3. In the left pane, double-click Certificates. The Certificates snap-in wizard opens.

4. Select Computer account and then click Next.

5. In the Select Computer screen, make sure that Local computer is selected and then click Finish.

6. Click OK to close the Add or Remove Snap-ins window.

7. In the left pane, expand the Certificates folder. Then, right-click the Personal folder and select All

Tasks > Import.

8. In the File to Import screen, select your PFX certificate file. Note that by default the Open window

displays CER files. In order to see your PFX files, you need to select Personal Information Exchange

from the drop-down list in the bottom right of the window.

9. Click Next and enter the private key password.

10. Continue clicking Next until you reach the Completing the Certificate Import Wizard screen. Then

click Finish to exit the wizard.

11. In the Personal > Certificates folder, double-click the newly imported certificate. The Certificate

window opens.

12. Scroll down the Details tab until you see the Thumbprint details and copy them to the clipboard.

13. Open a command prompt and run the following commands:

Setup and User Guide - Qlik Compose, May 2022 29

4 Security considerations

Syntax:

¢ netsh http add sslcert ipport=0.0.0.0:443 certhash=[YOUR_CERTIFICATE_THUMBPRINT_

WITHOUT_SPACES] appid={4dc3e181-e14b-4a21-b022-59fc669b0914}

Example:

netsh http add sslcert ipport=0.0.0.0:443

certhash=5f6eccba751a75120cd0117389248ef3ca716e61 appid={4dc3e181-e14b-4a21-b022-

59fc669b0914}

Syntax:

¢ netsh http add sslcert ipport=[::]:443 certhash=[YOUR_CERTIFICATE_THUMBPRINT_WITHOUT_

SPACES] appid={4dc3e181-e14b-4a21-b022-59fc669b0914}

Example:

netsh http add sslcert ipport=[::]:443 certhash=5f6eccba751a75120cd0117389248ef3ca716e61

appid={4dc3e181-e14b-4a21-b022-59fc669b0914}

14. Close the command prompt and Microsoft Management Console.

15. Start the Qlik Compose service.

4.2 Setting the hostname and changing the HTTPS port

After installing Qlik Compose, you can use the Compose CLI to set the hostname and HTTPS port for accessing

the Qlik Compose server machine.

Under normal circumstances, you should not need to set the hostname. However, on some systems,

connecting using HTTPS redirects to localhost. If this occurs, set the hostname of the Compose machine by

running the command shown below.

To set the hostname:

Run the following command from the Compose bin directory:

Command syntax

ComposeCtl.exe configuration set --address

address

Where:

--address is the hostname of the Compose server machine.

Example

ComposeCtl.exe configuration set --address MyHostName

To change the HTTPS port:

Run the following command from the Compose bin directory:

Command syntax

ComposeCtl.exe configuration set --https_port

port_number

Where:

Setup and User Guide - Qlik Compose, May 2022 30

4 Security considerations

--https_port is the HTTPS port number of the Compose server machine. The default HTTPS port is 443.

Example

ComposeCtl.exe configuration set --https_port 442

4.3 Setting up HSTS on Compose

HSTS is a web security policy mechanism that helps to protect websites against man-in-the-middle attacks

such as protocol downgrade attacks and cookie hijacking. It allows web servers to declare that web browsers

(or other complying Dilqam) should automatically interact with it using only HTTPS connections, which

provide Transport Layer Security (TLS/SSL).

You can force the Compose Web UI and/or the Compose REST API connections to use HSTS (HTTP Strict

Transport Security). To do this, run the commands described below.

All commands should be run from as Admin from the product bin folder.

Enabling HSTS

Command syntax

ComposeCtl.exe configuration set --static_http_headers

header_list

--rest_http_headers

header_

list

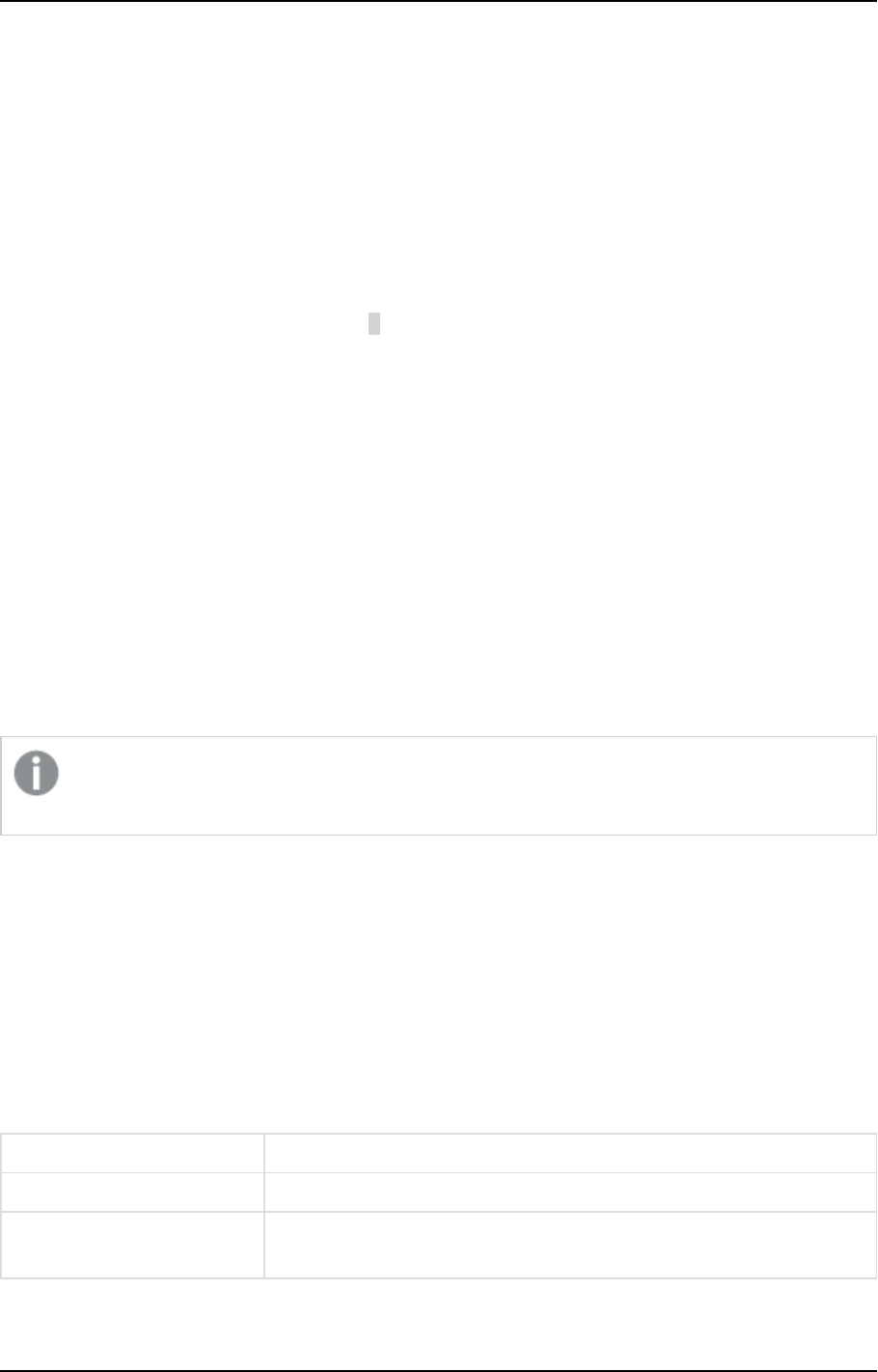

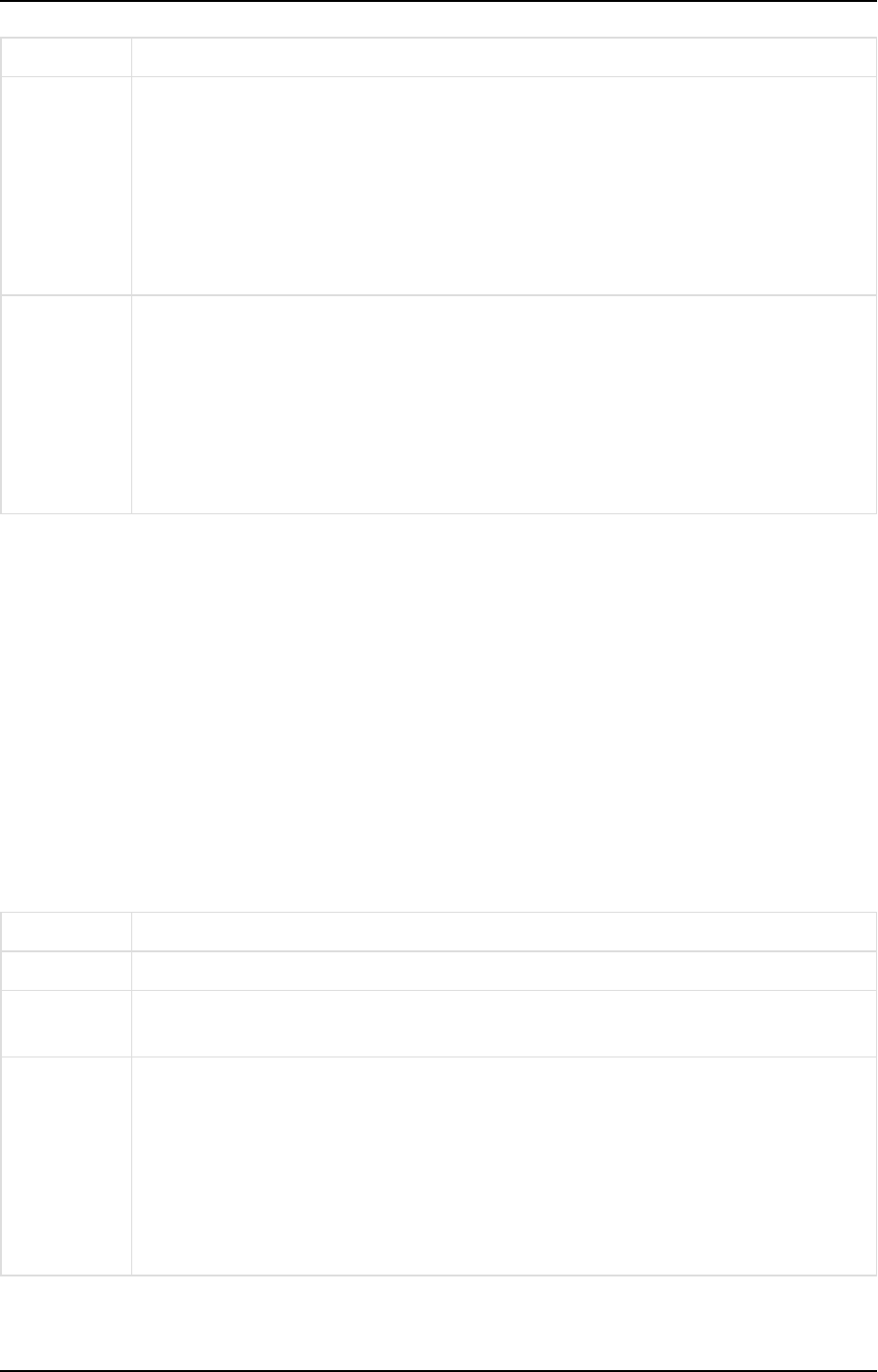

Parameters

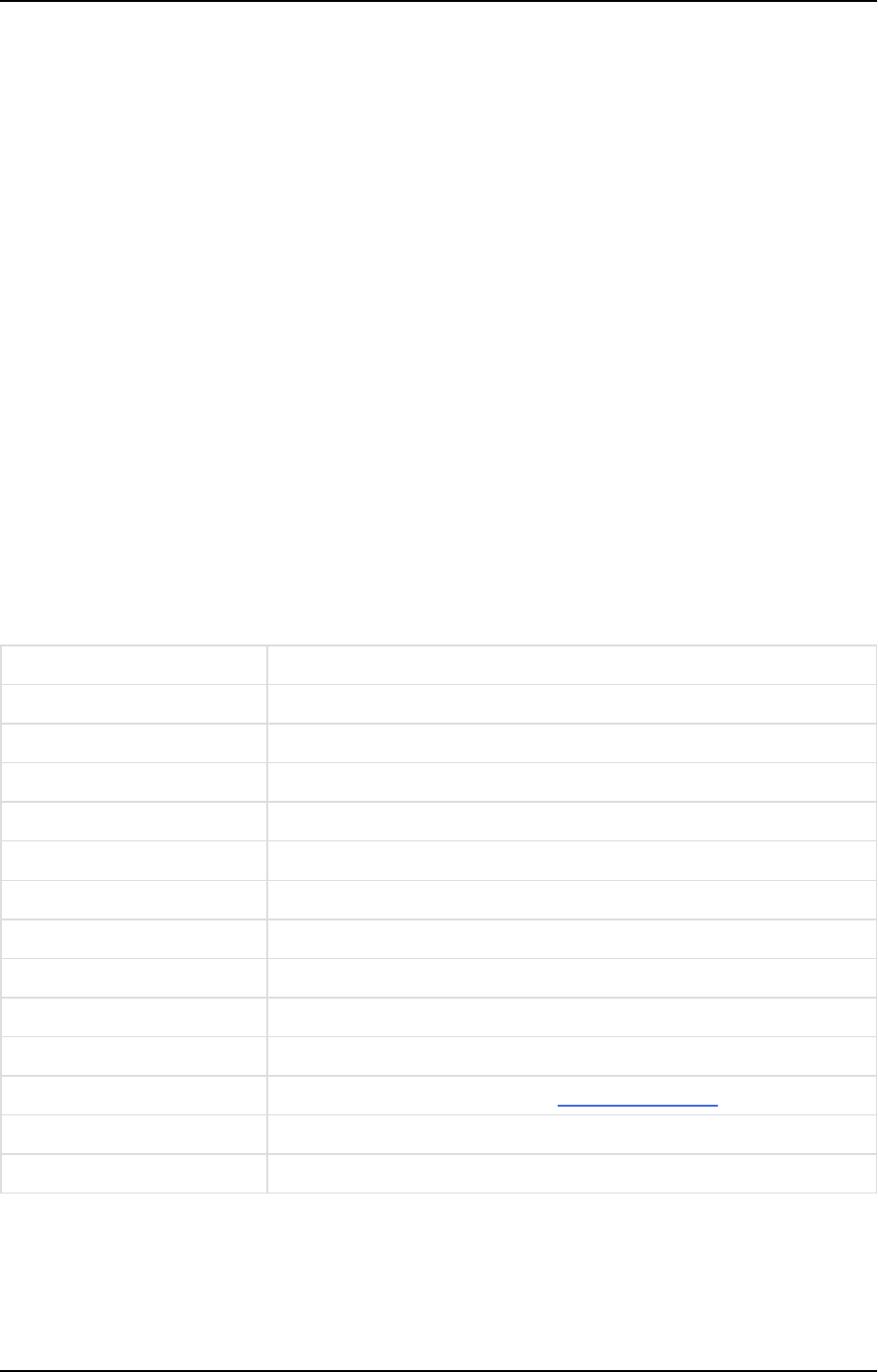

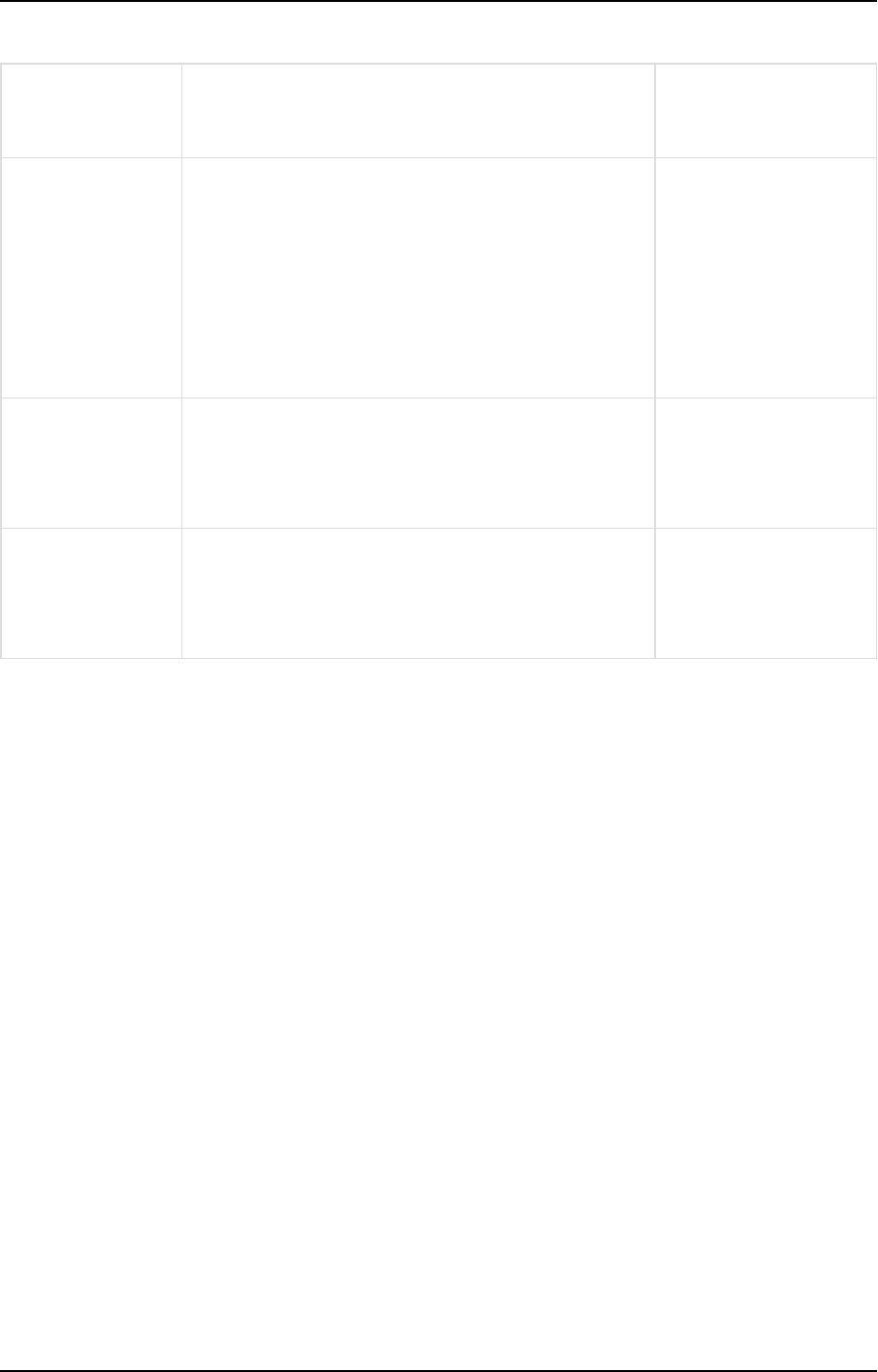

Parameter Description

--static_http_headers The headers required to connect to the Compose Web UI.

--rest_http_headers The headers required to connect using the API.

Headers should be specified using the following format:

ComposeCtl.exe configuration set --static_http_headers "header1:value1" "header2:value2" --

rest_http_headers "header1:value1" "header2:value2"

Example

ComposeCtl.exe configuration set --static_http_headers "Strict-Transport-Security:max-

age=31536000; includeSubDomains;" --rest_http_headers "Strict-Transport-Security":"max-

age=31536000; includeSubDomains;"

Disabling HSTS

You can also revert to regular HTTPS connections.

Command syntax

ComposeCtl.exe configuration set --static_http_headers ""|--rest_http_headers ""

Setup and User Guide - Qlik Compose, May 2022 31

4 Security considerations

Parameters

Parameter Description

--static_http_headers Use this parameter to revert the headers required to connect to the

Compose Web UI.

--rest_http_headers Use this parameter to revert the headers required to connect using the

API.

Example

Disable static_http_headers

ComposeCtl.exe configuration set --static_http_headers ""

Disable rest_http_headers

ComposeCtl.exe configuration set --rest_http_headers ""

4.4 Setting Single Sign-On Authentication with Kerberos

Kerberos is an enterprise authentication protocol that uses the concept of tickets and three-way

authentication to enable users and computers to identify themselves and secure access to resources.

Using Kerberos SSO, users can seamlessly log into Compose and administrators can completely externalize

and centrally manage users or group memberships using their existing Kerberos infrastructure.

To set the authentication method to single sign-on with Kerberos, run:

ComposeCtl.exe configuration set --authentication_method sso-kerberos

To revert the authentication method to standard single sign-on, run:

ComposeCtl.exe configuration set --authentication_method sso

If the Kerberos protocol fails, Compose will try to log in using NTLM authentication. If NTLM

authentication is not enabled in the system, an error will be returned.

4.5 Changing the master user password

All passwords are encrypted using a one-time randomly generated master key. The master key is stored

automatically in the root repository of Compose (<product_dir>\data\projects\GlobalRepo.sqlite).

The master key is encrypted by a user key, which in turn, is derived from a master password entered by the

user. By default, the Master User Password is randomly generated by Compose. The best practice, however, is

to change the Master User Password, as this will allow Compose projects and configuration settings to be

imported to another machine without needing to re-enter the project credentials.

Setup and User Guide - Qlik Compose, May 2022 32

4 Security considerations

It may also be convenient to use the same Master User Password within a trusted environment. In other

words, if the same administrators control both the production and the testing environments, using the same

Master User Password in both environments will facilitate the transfer of projects with credentials between

the testing and production environments.

The user key is stored in the muk.dat file located in <product_dir>\data\.

The Master User Password must be a minimum of 32 characters. You can either use your own

password or run the

genpassword

utility described below to generate a password for you. Note also

that the password can only contain alphanumeric characters (i.e. it cannot contain special keyboard

characters such as # or @).

All of the commands listed below must be run as Admin from:

<product_dir>\bin

To generate a random 32 character password:

Issue the following command:

ComposeCtl.exe utils genpassword

To change the randomly generated master user password:

1. Issue the following command:

ComposeCtl.exe masterukey set --password <new_master_password>

If you add the

--prompt

parameter to the command and omit the

--password

parameter,

the CLI will prompt you for the password. When you enter the password, it will be obfuscated.

This is especially useful if you do not want passwords to be retained in the command prompt

history.

Syntax:

ComposeCtl.exe masterukey set --prompt

2. Restart the Compose service.

To change a user-defined master user password:

1. Issue the following command:

ComposeCtl.exe masterukey set --current-password <current_master_password> --password

<new_master_password>

Setup and User Guide - Qlik Compose, May 2022 33

4 Security considerations

If you add the

--prompt

parameter to the command and omit the

--password

and

--

current-password

parameters, the CLI will prompt you for the required passwords. When

you enter the passwords, they will be obfuscated. This is especially useful if you do not want

passwords to be retained in the command prompt history.

Syntax:

ComposeCtl.exe masterukey set --prompt

2. Restart the Compose service.

Setup and User Guide - Qlik Compose, May 2022 34

5 Data Warehouse projects

5 Data Warehouse projects

This section explains how to set up data warehouse projects.

In this section:

l

Defining a Qlik Replicate task (page 36)

l

Adding and managing data warehouse projects (page 37)

l

Getting started with Data Warehouse projects (page 104)

l

Setting up a data warehouse connection (page 111)

l

Setting up Landing Zone and Data Source connections (page 131)

l

Creating and managing the model (page 154)

l

Creating and managing the data warehouse (page 190)

l

Creating and managing data marts (page 225)

l

Creating and managing command tasks (page 258)

l

Controlling and monitoring tasks and workflows (page 260)

Setup and User Guide - Qlik Compose, May 2022 35

5 Data Warehouse projects

5.1 Defining a Qlik Replicate task

In order to work with Compose, you first need to define a Qlik Replicate task that replicates the source tables

from the source endpoint to a landing zone in the data warehouse (defined as the target endpoint in the

Replicate task). The landing zone should then be defined as the data source for the Compose project.

For information on which endpoints can be used in a Replicate task that lands data for Compose, see

Supported data warehouses (page 390).

Configuring multiple Replicate tasks with the same landing zone is not supported.

The steps below highlight the settings that are required when using Qlik Replicate with Compose. For a full

description of setting up tasks in Qlik Replicate, please refer to the Qlik Replicate Help.

Prerequisites

When Oracle is defined as the source endpoint in the Replicate task, full supplemental logging should be

defined for all source table columns that exist on the target and any source columns referenced in filters, data

quality rules, lookups, and expressions.

Limitations and considerations

l

Replicate allows you to define global transformations that are applied to source/Change tables during

task runtime. The following global transformations, however, should not be defined (as they are not

compatible with Compose tasks):

l

Rename Change Table

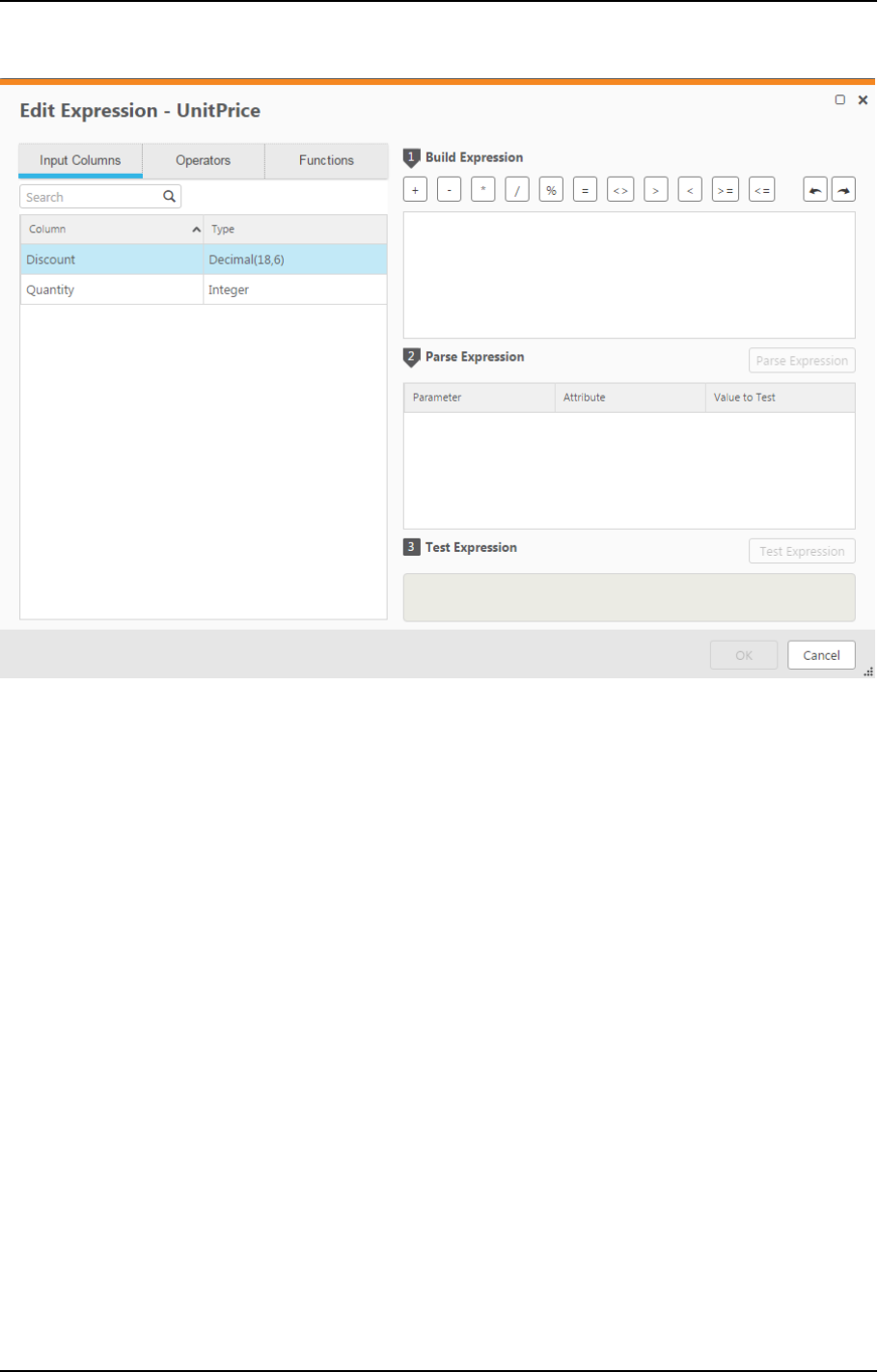

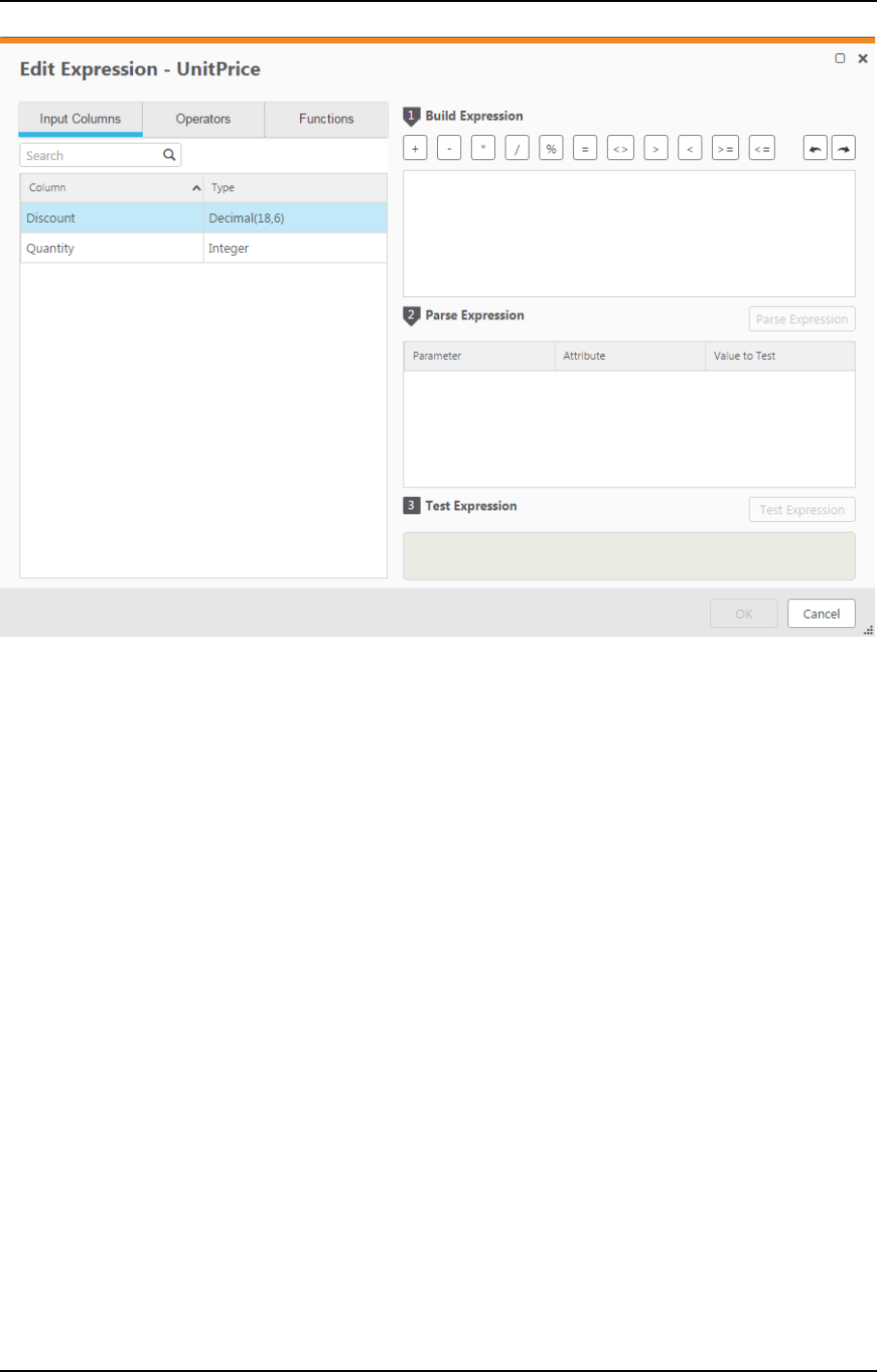

l

Rename Change Table schema

l

The Create target control tables in schema option in the Replicate task settings' Control Table tab is

not supported.

l

Support for the JSON and XML data types is limited to the Snowflake VARIANT data type. Therefore,

apart from the Snowflake VARIANT data type, columns that are usually created with these data types

(by the Replicate target endpoint) should be created as STRINGs instead. Therefore, columns that are

usually created with these data types (by the Replicate target endpoint) should be created as STRINGs

instead. This can be done automatically within Replicate using a data type transformation. For

information on which target endpoints support JSON and XML data types as well as instructions on

how to create a data type transformation, please refer to the Replicate Help.

l

As Compose requires a full-after image to be able to perform Change Processing, the following

Replicate source endpoints are not directly supported (as they do not provide a full-after image):

l

SAP HANA (log based)

l

Salesforce

Setup and User Guide - Qlik Compose, May 2022 36

5 Data Warehouse projects

Setting up the task

To define the task:

1. Open Qlik Replicate and in the New Task dialog, do one of the following:

l

To enable Full Load and Change Processing replication, enable the Full Load and Store

Changes options (the Apply Changes option should not be enabled).

l

To enable Full Load only replication, enable the Full Load replication option only.

l

To enable Change Processing replication only, make sure that only the Store Changes option is

enabled. Note that this option should only be selected if the Full Load tables and data already

exist in the landing zone.

l

To enable Change Processing for lookup tables that already exist in the landing zone and are

not part of the Compose model, enable the Apply Changes option only. Note that such a task

should be defined in addition to the Full Load and Store Changes replication task described

above. For more information on updating standalone lookup tables, see Using lookup tables

that do not have a task for CDC mapping (page 208).

2. Open the Manage Endpoint Connections window and define a source and target endpoint. The target

endpoint must be the database where you want Compose to create the data warehouse.

3. Add the endpoints to the Qlik Replicate task and then select which source tables to replicate.

4. This step is not relevant if you selected the Apply Changes or Full Load replication option only. In the

Task Settings' Store Change Setting tab, make sure that Store Changes in is set to Change tables.

5. In the Task Settings’ Target Metadata tab, specify a Target table schema name.

6. If a Primary Key in a source table can be updated, it is recommended to turn on the DELETE and

INSERT when updating a primary key column option in Replicate's task settings' Change

Processing Tuning tab. When this option is turned on, history of the old record will not be preserved

in the new record. Note that this option is supported from Replicate November 2022 only.

7. Run the task. Wait for the Full Load replication to complete and then continue the workflow in

Compose as described in the Data warehouse project tutorial (page 107) below and inAdding and

managing data warehouse projects (page 37).

Replicate allows you to define global transformations that are applied to source/Change tables

during task runtime. The following global transformations, however, should not be defined (as they

are not compatible with Compose tasks):

l

Rename Change Table

l

Rename Change Table schema

5.2 Adding and managing data warehouse projects

This section describes how to add and manage a data warehouse project.

In this section:

Setup and User Guide - Qlik Compose, May 2022 37

5 Data Warehouse projects

l

Adding data warehouse projects (page 38)

l

Managing and monitoring projects (page 282)

l

Project settings (page 40)

l

Resetting projects (page 46)

l

Project deployment (page 47)

l

Migrating objects as CSV files (page 49)

l

Exporting and importing projects using the CLI (page 78)

l

Generating projects using the CLI (page 98)

l

Exporting project documentation (page 99)

l

Viewing and downloading DDL scripts (page 100)

l

Project versioning (page 300)

l

Creating a diagnostics package (page 301)

Adding data warehouse projects

Adding a new project is the first task you need to undertake in order to work with Qlik Compose.

There are two types of project:

l

Data Warehouse - for ingesting data from multiple sources and creating analytics-ready data marts.

l

Data Lake - for ingesting data from multiple sources and moving it to a storage system for analytics.

This topic guides you through the steps required to set up a data warehouse project. For instructions on

setting up a Data Lake project, see Adding data lake projects (page 280).

You can set up as many projects as you need, although the ability to actually run tasks is determined by your

Compose license.

Adding a Data Warehouse project

To add a new Data Warehouse project:

1. Click the New Project toolbar button.

The New Project wizard opens.

2. In the Project Name tab, specify the following and then click Next:

l

Name: The project name.

Project names cannot contain the following characters: /\,&#%$@=^*+"'`~?<>:;[]{} as

well as all non-printable characters (below 0x20). The project name can contain a

single dot, but it cannot be the first or last character.

l

Environment Type: Optionally, change the default environment type.

l

Environment Title: Optionally, specify an environment title.

For information about the environment settings, see Environment tab (page 43).

Setup and User Guide - Qlik Compose, May 2022 38

5 Data Warehouse projects

The following names are reserved system names and cannot be used as project names:

CON,

PRN, AUX, CLOCK$, NUL, COM1, COM2, COM3, COM4, COM5, COM6, COM7, COM8, COM9,

LPT1, LPT2, LPT3, LPT4, LPT5, LPT6, LPT7, LPT8

and

LPT9

.

3. Select Data Warehouse as your project type and then click Finish.

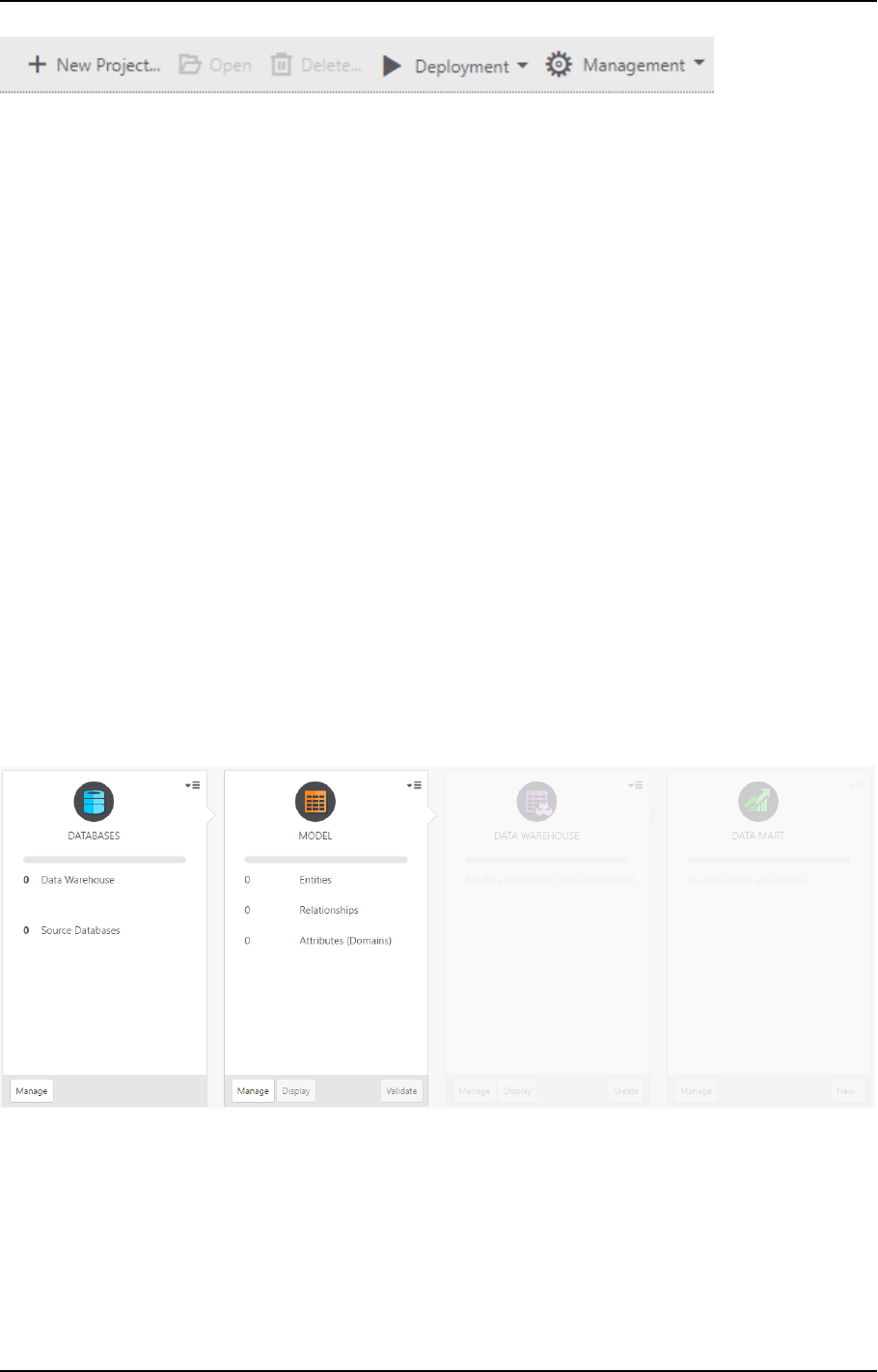

4. The project panels will be displayed.

5. Add at least one source database and a data warehouse as described in Setting up Landing Zone and

Data Source connections (page 131)and Setting up a data warehouse connection (page 111) respectively.

6. Create a model as described in Creating and managing the model (page 154).

7. Set up the data warehouse as described in Creating and managing the data warehouse (page 190).

8. Set up the data mart as described in Creating and managing data marts (page 225).

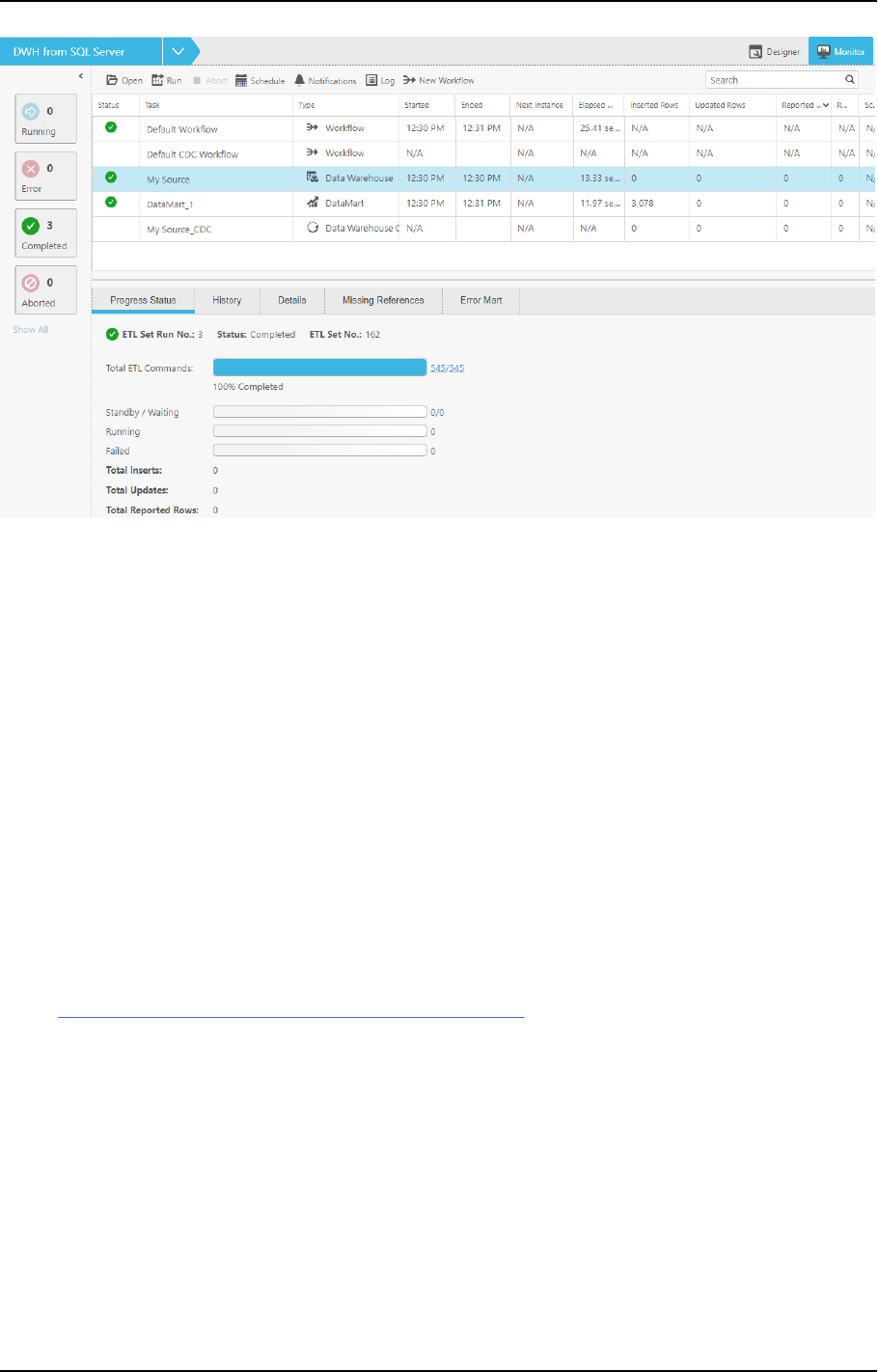

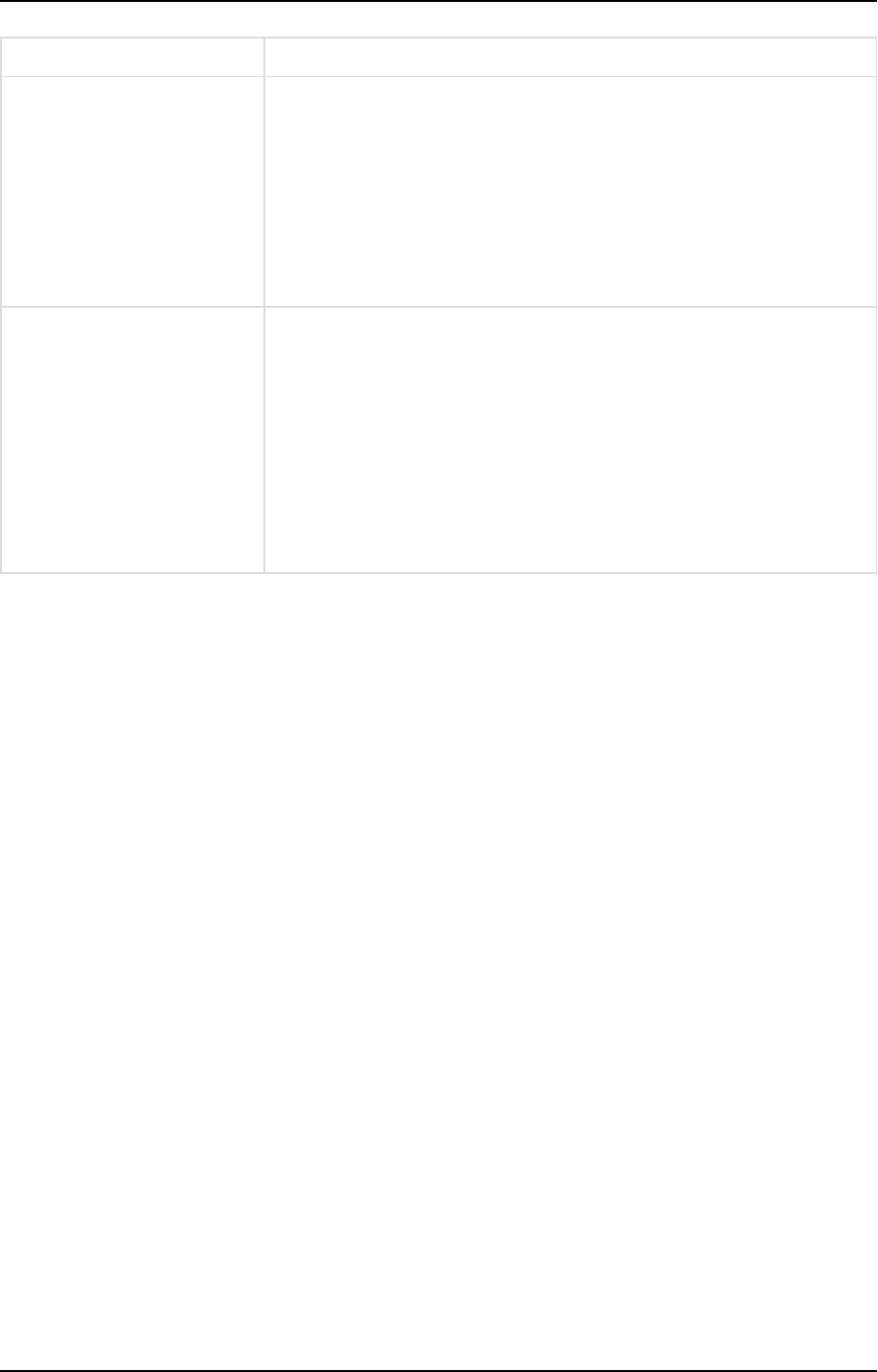

Managing and monitoring projects

The table below describes the available project management options.

Project management actions are performed in the main Compose window. To switch from a specific

project to the main window, click the downward arrow to the right of the project name and then

select All Projects from the drop-down menu.

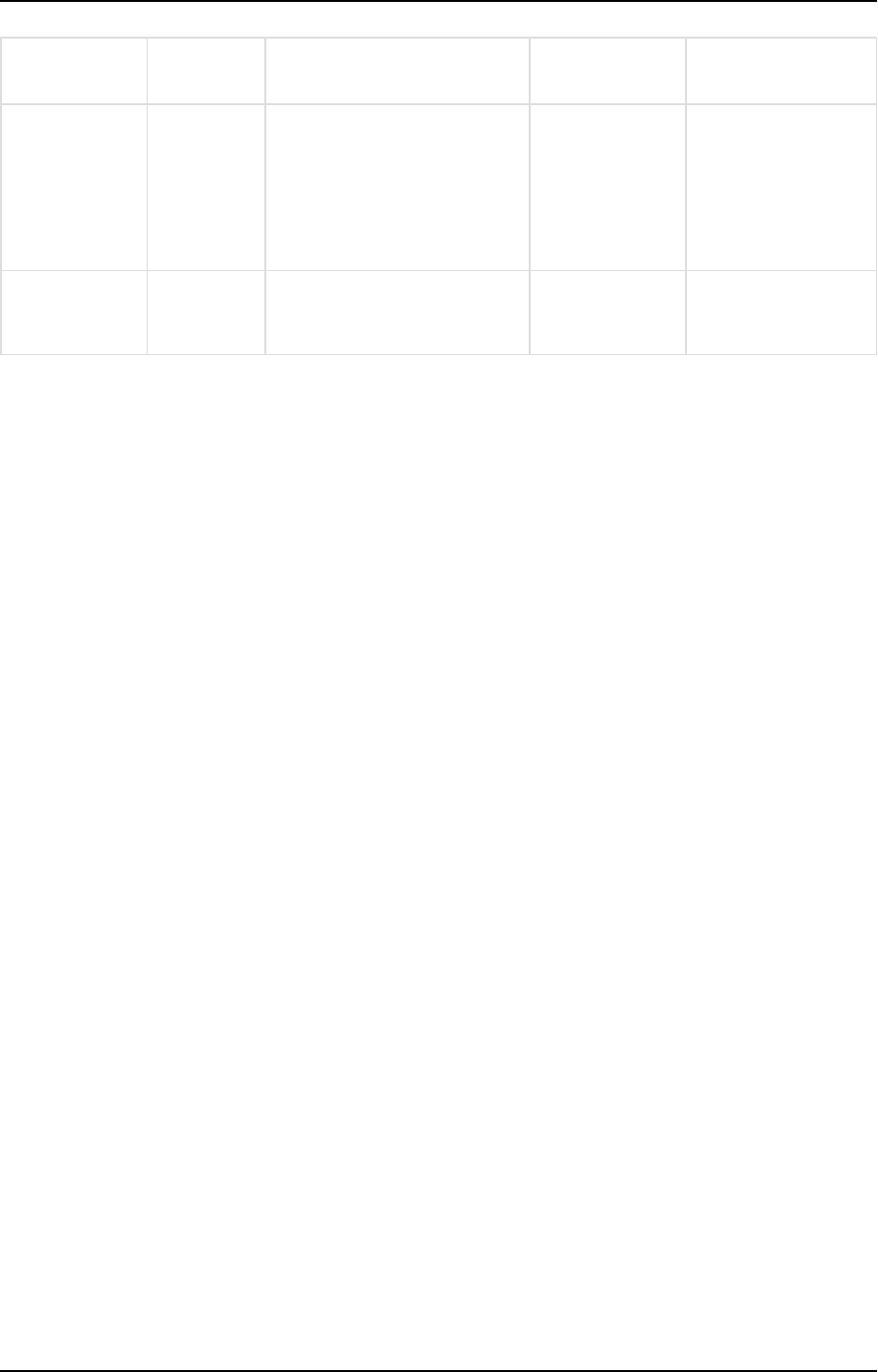

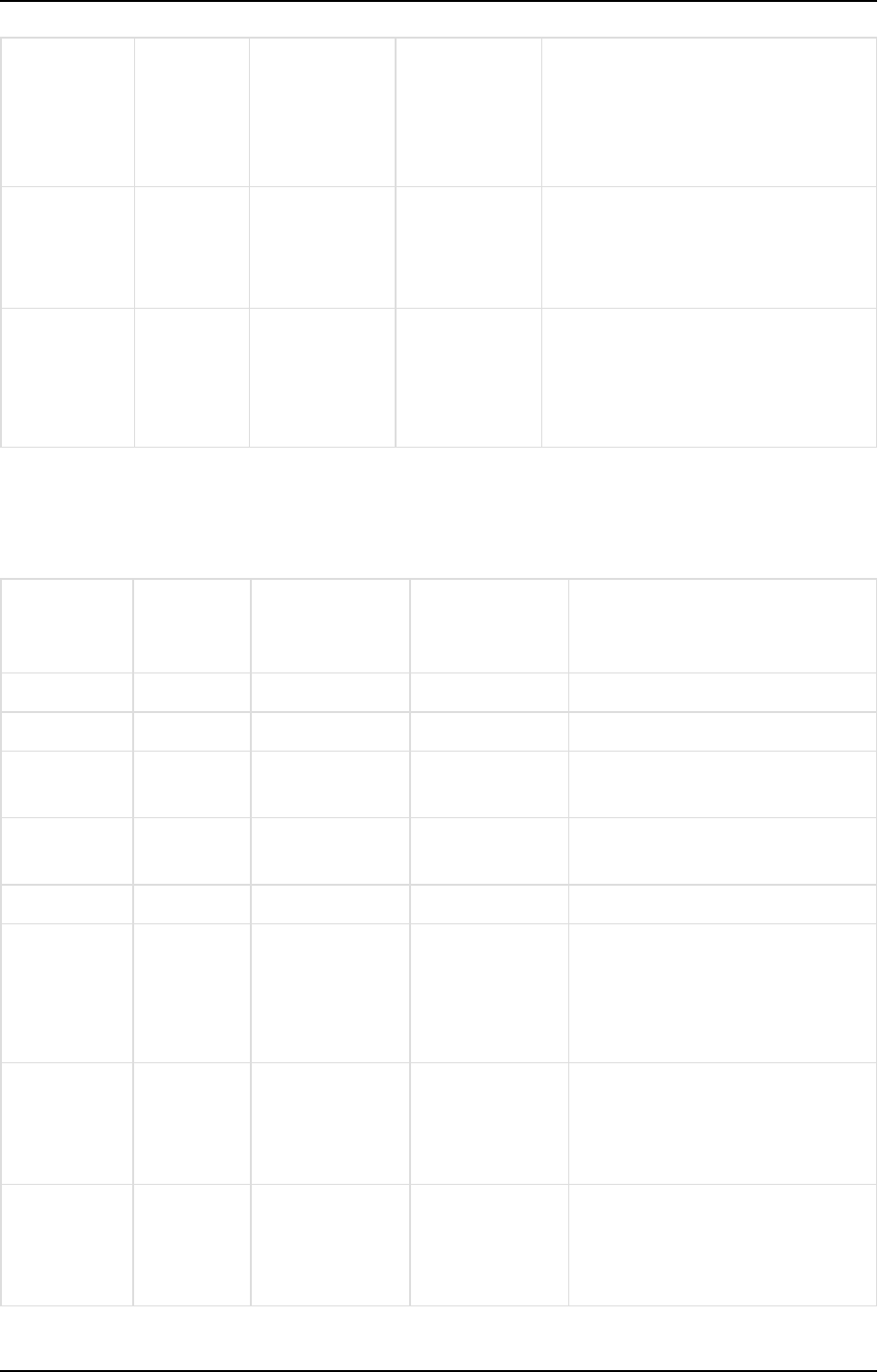

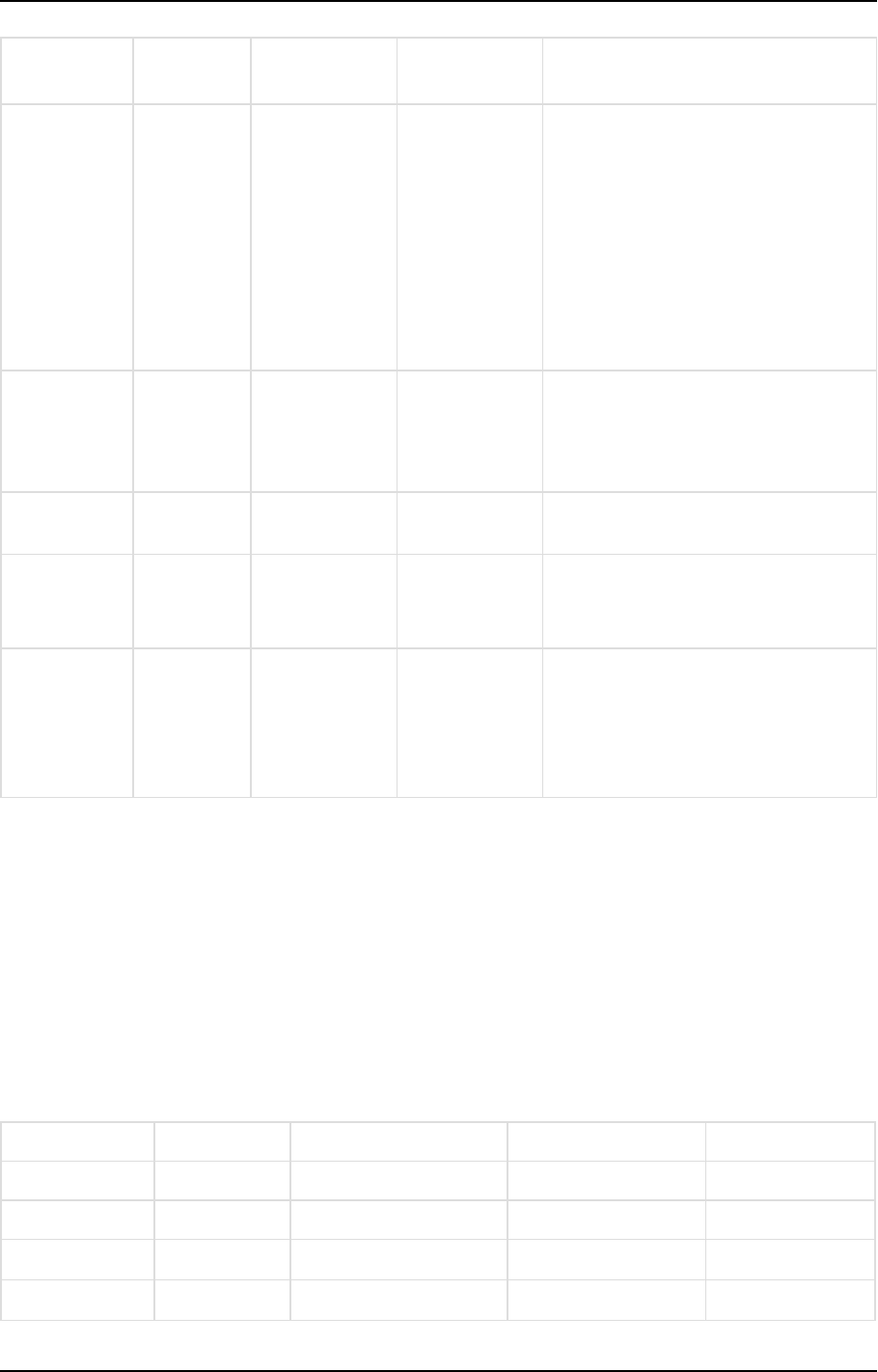

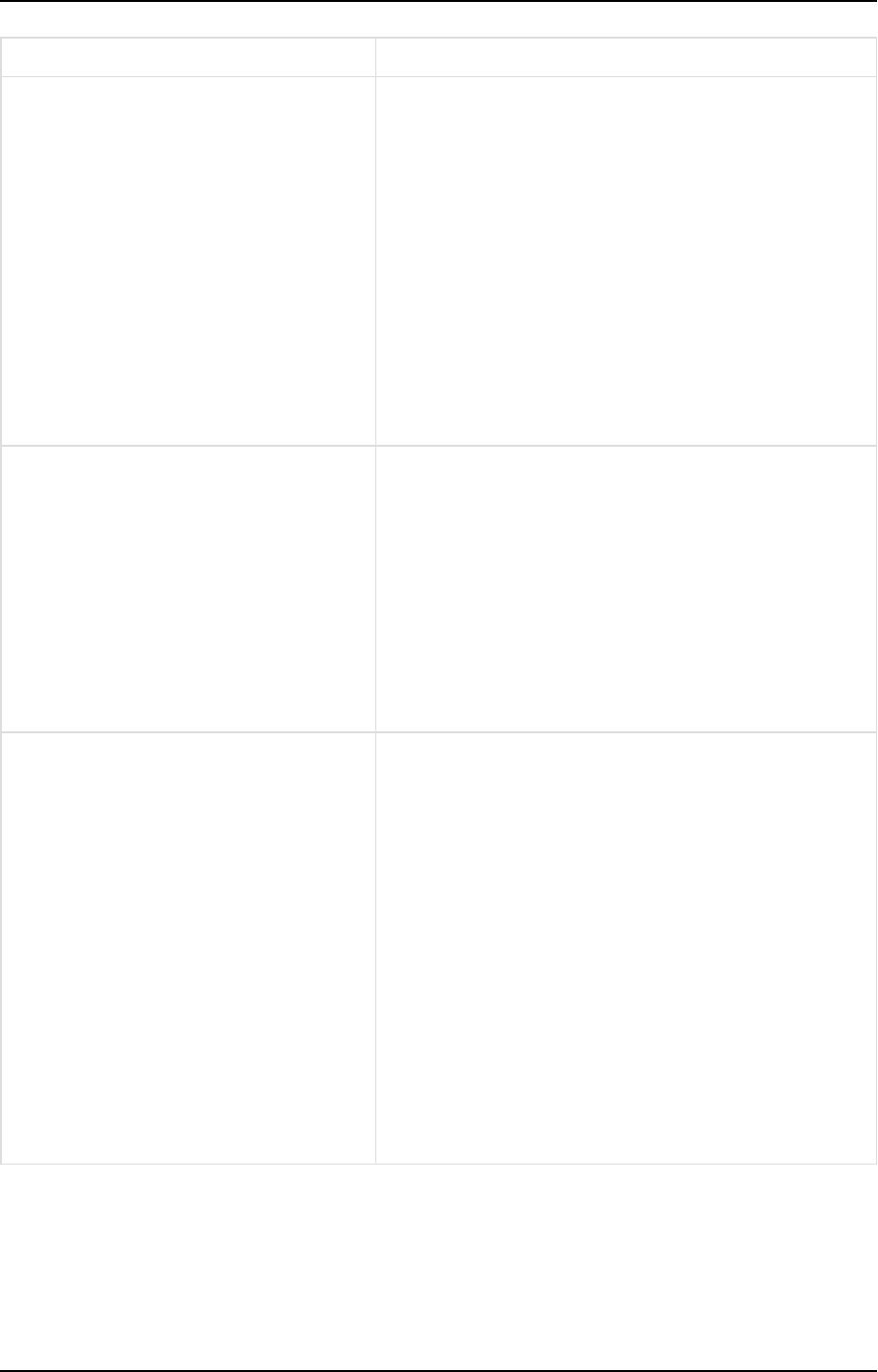

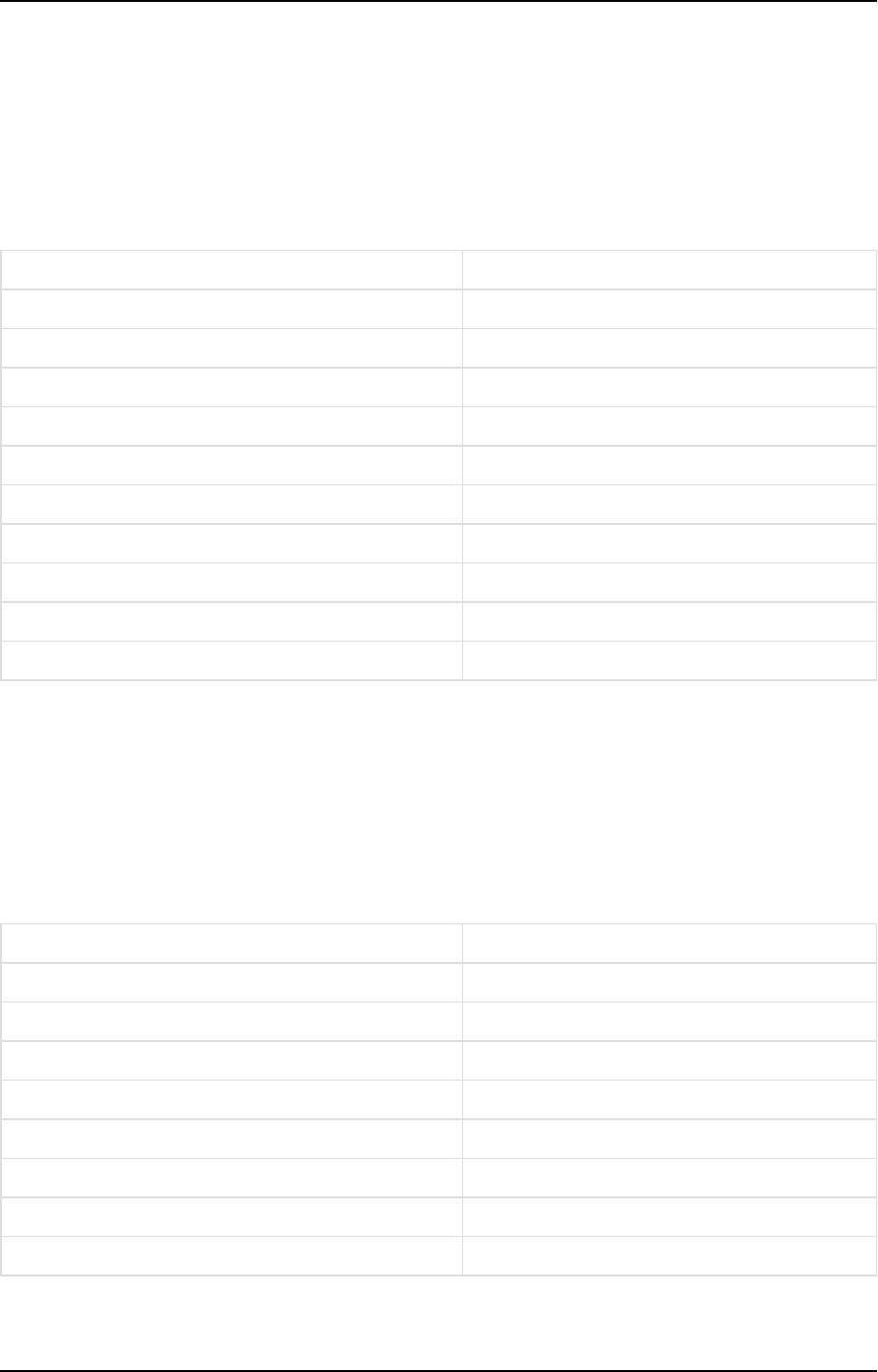

To Do this

Edit a project Any of the following:

l

Double-click the project.

l

Right-click the project and select Designer.

l

Select the project and then click the Open toolbar button.

Project management procedures

Setup and User Guide - Qlik Compose, May 2022 39

5 Data Warehouse projects

To Do this

Monitor a project Any of the following:

l

Right-click the project and select Monitor.

l

Double-click the project and select the Monitor tab on the right of the

console.

Create a deployment

package

Any of the following:

l

Right-click the project and select Create Deployment Package.

l

Select the project and then select Create Deployment Package from the

Deployment toolbar menu.

See also: Project deployment (page 47) (Data Warehouse projects) and Project

deployment (page 289) (Data Lake projects).

Delete a project Any of the following:

l

Right-click the project and select Delete.

l

Select the project and then click the Delete toolbar button.

View or change user

permissions

Right-click the project and select User Permissions.

Relevant for Data Warehouse projects only.

See also: User permissions (page 371).

Project settings

You can change the project settings according to your needs.

To access the project settings:

1. Open your project as described in Managing and monitoring projects (page 282).

2. Click the downward arrow to the right of the project name and select Settings from the drop-down

menu.

The Settings window opens, displaying the following tabs:

l

General tab (page 40)

l

Naming tab (page 42)

l

Environment tab (page 43)

l

Table creation modifiers tab (page 45)

General tab

In this tab, the following settings are available:

Setup and User Guide - Qlik Compose, May 2022 40

5 Data Warehouse projects

Miscellaneous

l

Generate DDL scripts but do not run them: By default, Compose executes the CREATE, ADJUST and

DROP statements immediately upon user request. When you select this option, Compose will only

generate the scripts but not execute them. This allows you to review and edit the scripts before they

are executed.

For example, if you want your data warehouse/storage tables to contain partitions, you will need to

edit the CREATE statement to create the partitions.

You can view, copy and download the DDL scripts as described in Viewing and downloading DDL scripts

(page 100).

When this option is selected, you need to do the following to see the results:

l

After running the scripts, clear the metadata cache as described in Clearing the data

warehouse metadata cache (page 224).

l

When this option is selected, you need to press [F5] (i.e. refresh the page) in order for

the web console to display the updated list of tables. This can be done either before

running the scripts (recommended) or after running the scripts. Note that until you

refresh the browser, the information in the web console will only be partially updated.

l

Ignore Mapping Data Type Validation: By default, Compose issues a validation error when a landing

table is mapped to a logical entity with a different data type. You can select this option to allow the

mapping of different data types. Note that you should only select this option if you need to map

landing table data types to compatible (though not identical) logical entity data types.

l

Write metadata to the TDWM tables in the data warehouse:

When this option is selected (the default unless Amazon Redshift is the data warehouse), Compose

writes the metadata for the data warehouse tables to the following tables:

<schema>.TDWM_Tables and <schema>.TDWM_COLUMNS.

Centralizing the metadata in two dedicated tables makes it easier for external metadata tools to

analyze the metadata. The metadata is also written to the local Compose repository, so clearing this

option (if performance issues are encountered) will not affect Compose functionality in any way.

l

Do not display the default workflows in the monitor: Select this option if you want to prevent the

default workflows from being executed.

Dates

l

Lowest Date: The value stored in the "From Date" column. This is the date when the version started.

l

Highest Date: The value stored in the "To Date" column. This is the date when the version ended.

Current Time Convention

When a source record’s timestamp cannot be determined, select one of the following to use instead:

l

Current time in UTC (the default for new projects)

To preserve backward compatibility when upgrading or deploying old projects, local server

time is the default.

Setup and User Guide - Qlik Compose, May 2022 41

5 Data Warehouse projects

Before changing this option, make sure that existing data will not be impacted.

l

Current time in server local time

For existing objects, Compose will not be able to determine a source record's timestamp if both of the

following are true:

l

The "From Date" columns are not mapped

l

The task is set up to perform Incremental Load

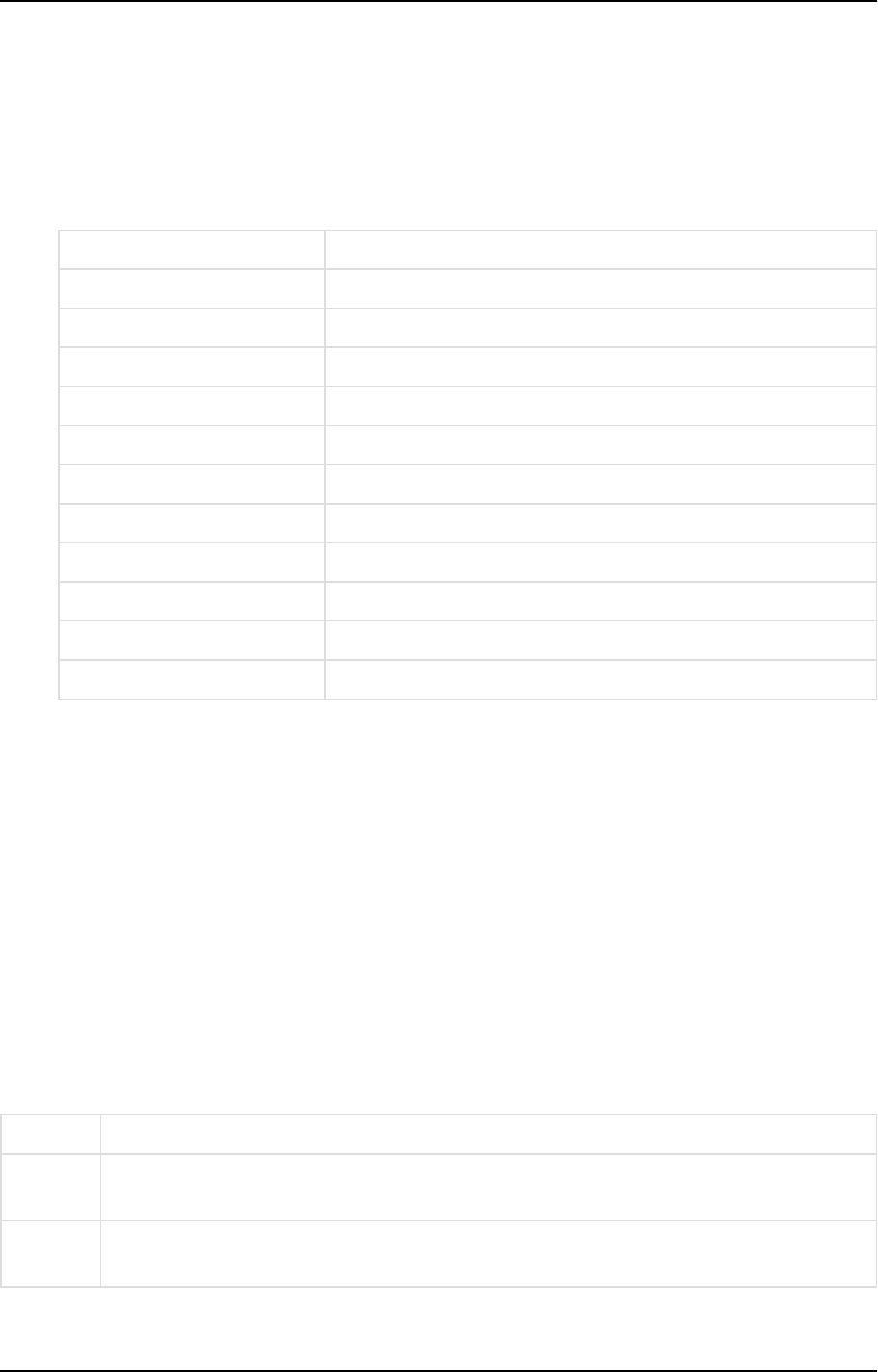

Naming tab

In this tab, you can change the default "From Date" and "To Date" column names, as well as the prefixes and

suffixes used to identify tables, views, and columns.

If you change the prefix or suffix of existing tables (e.g. data warehouse tables), you need to drop

and create the data warehouse and data mart tables.

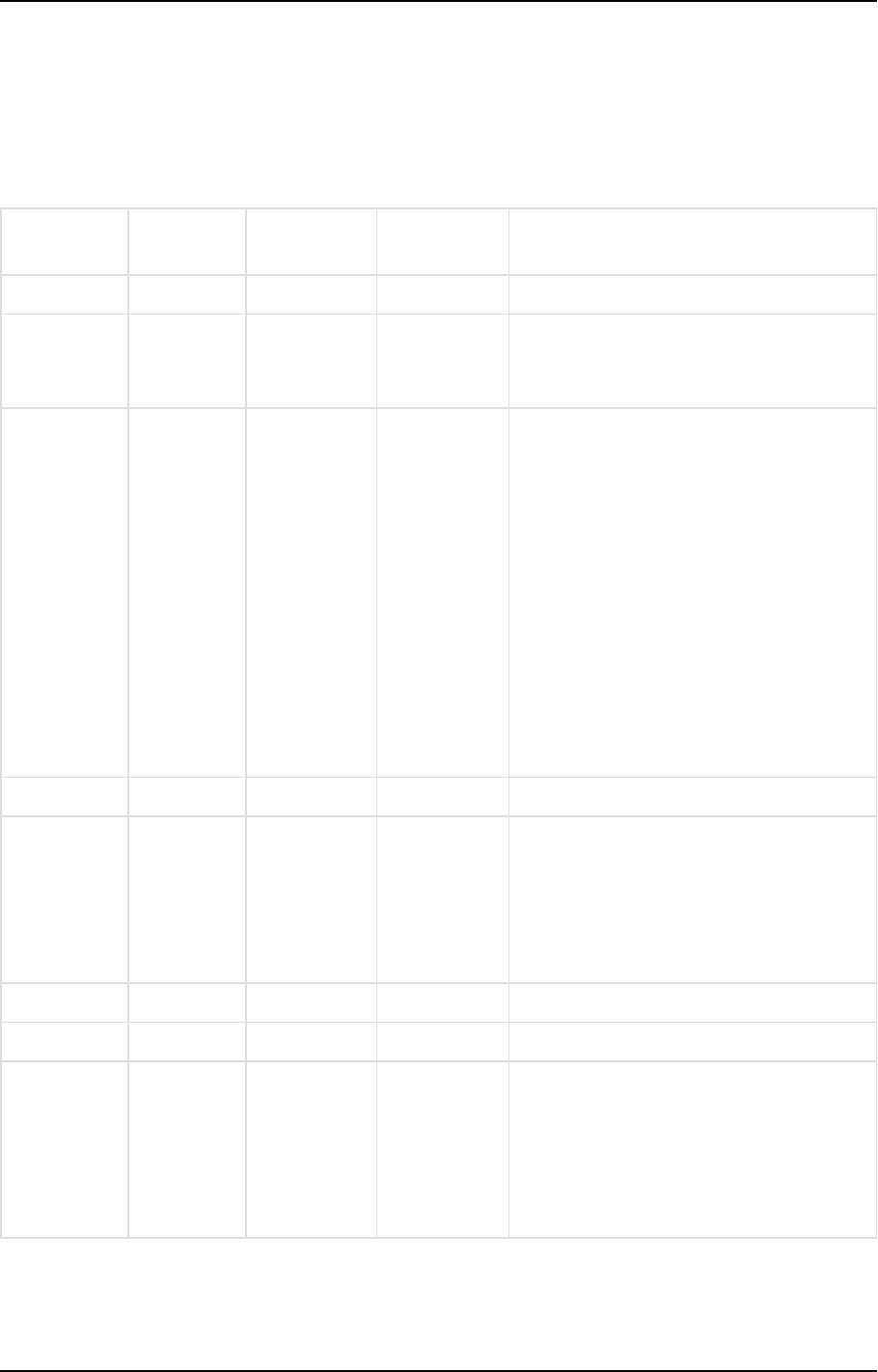

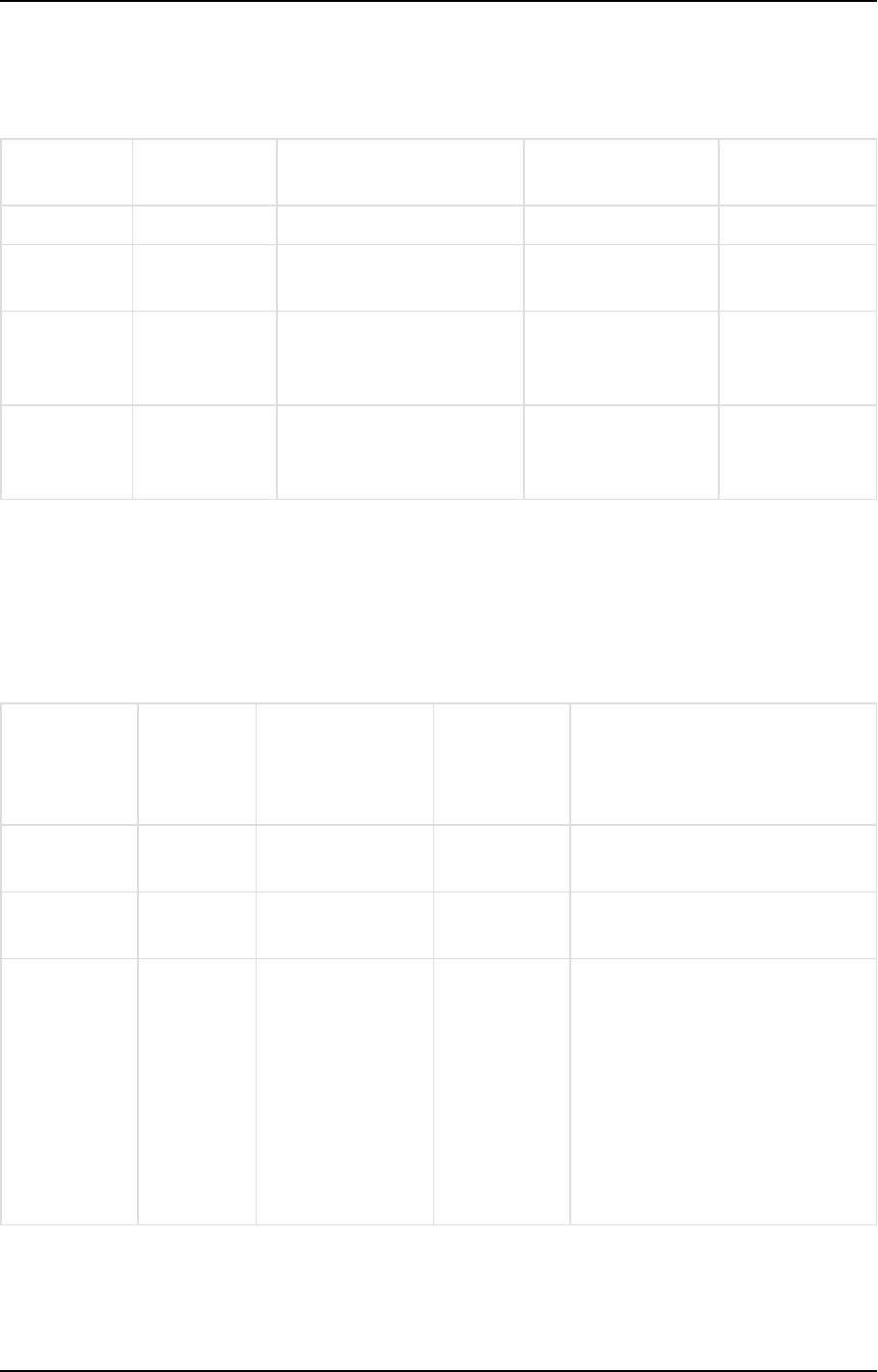

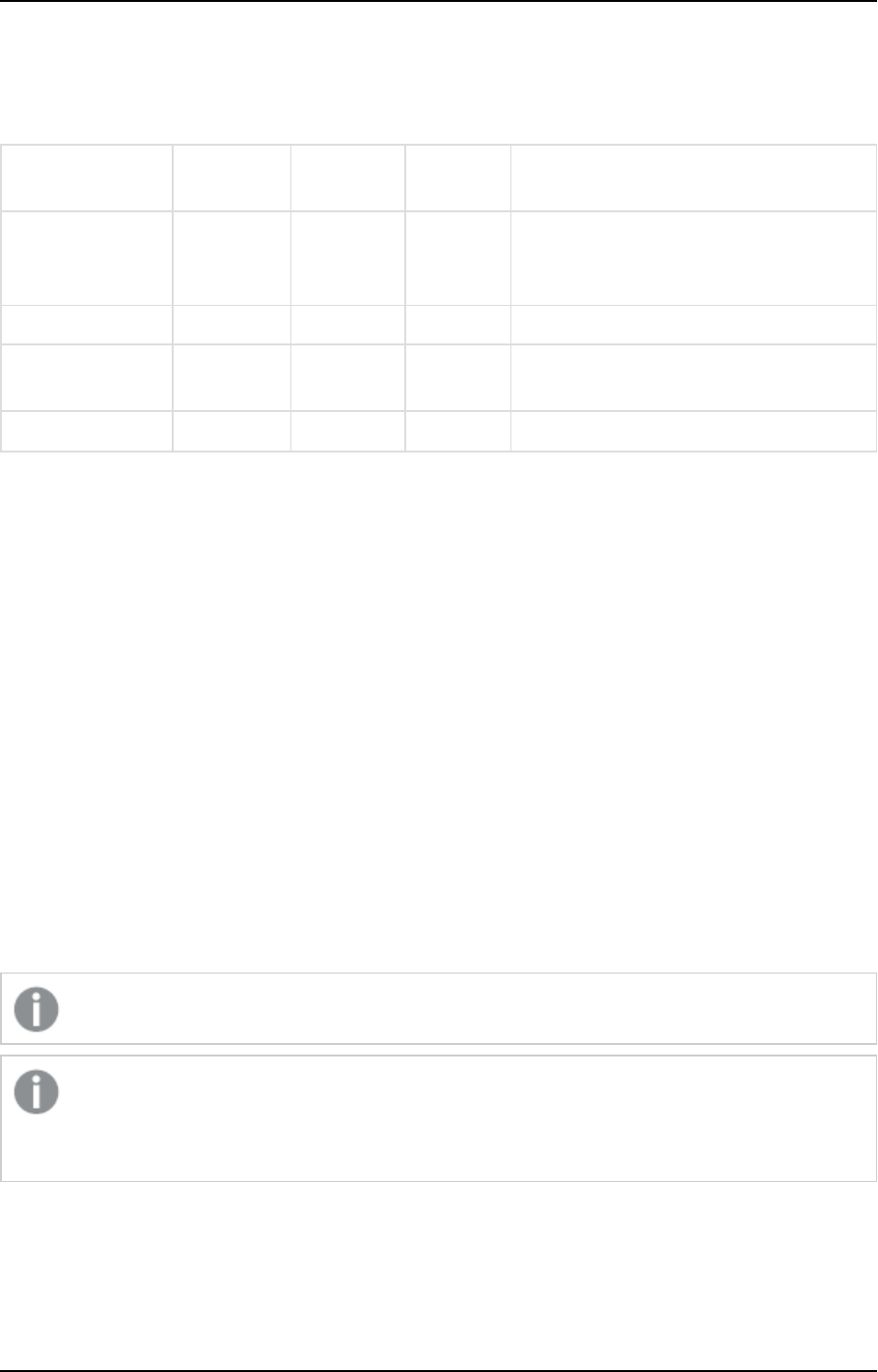

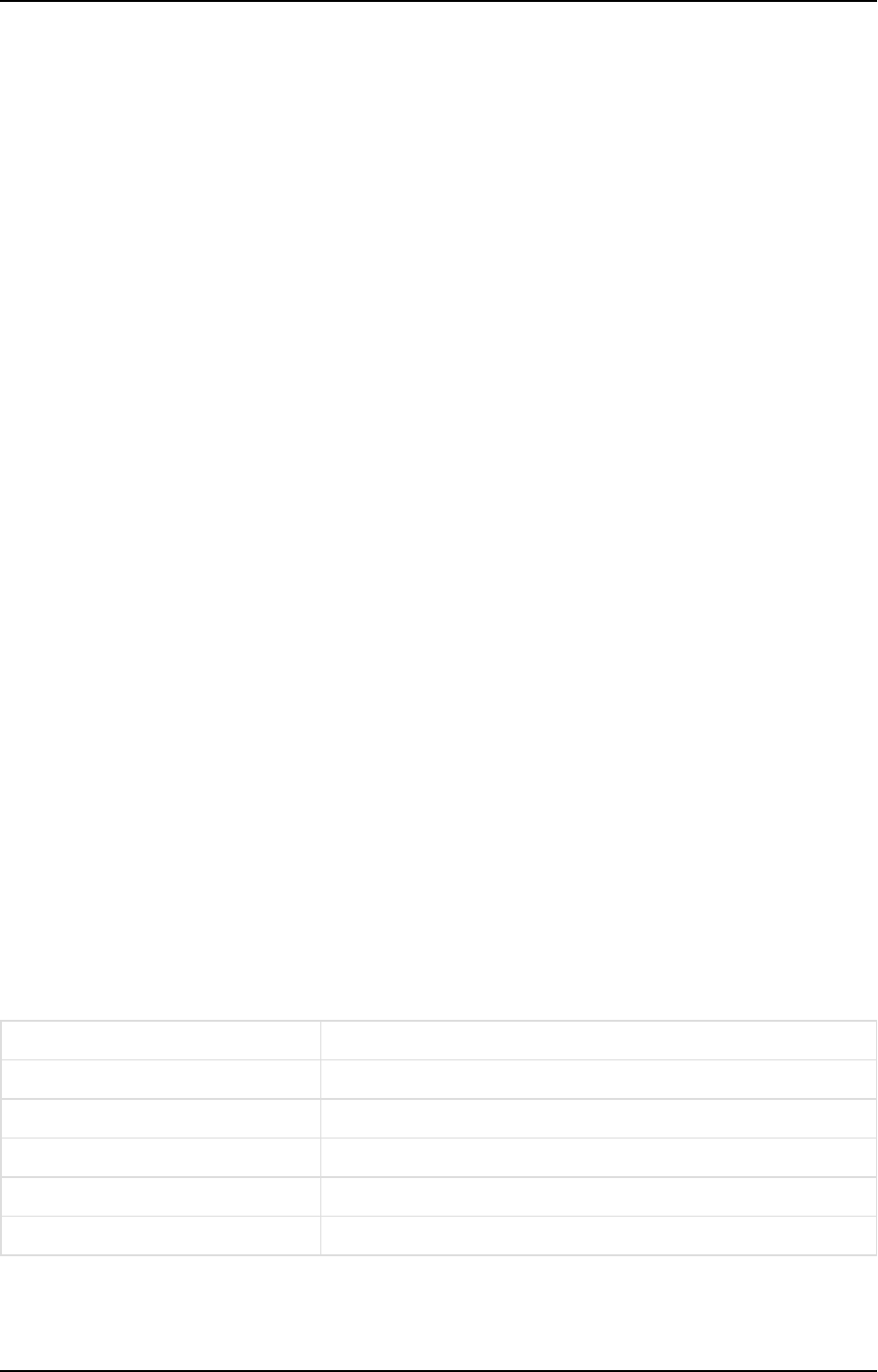

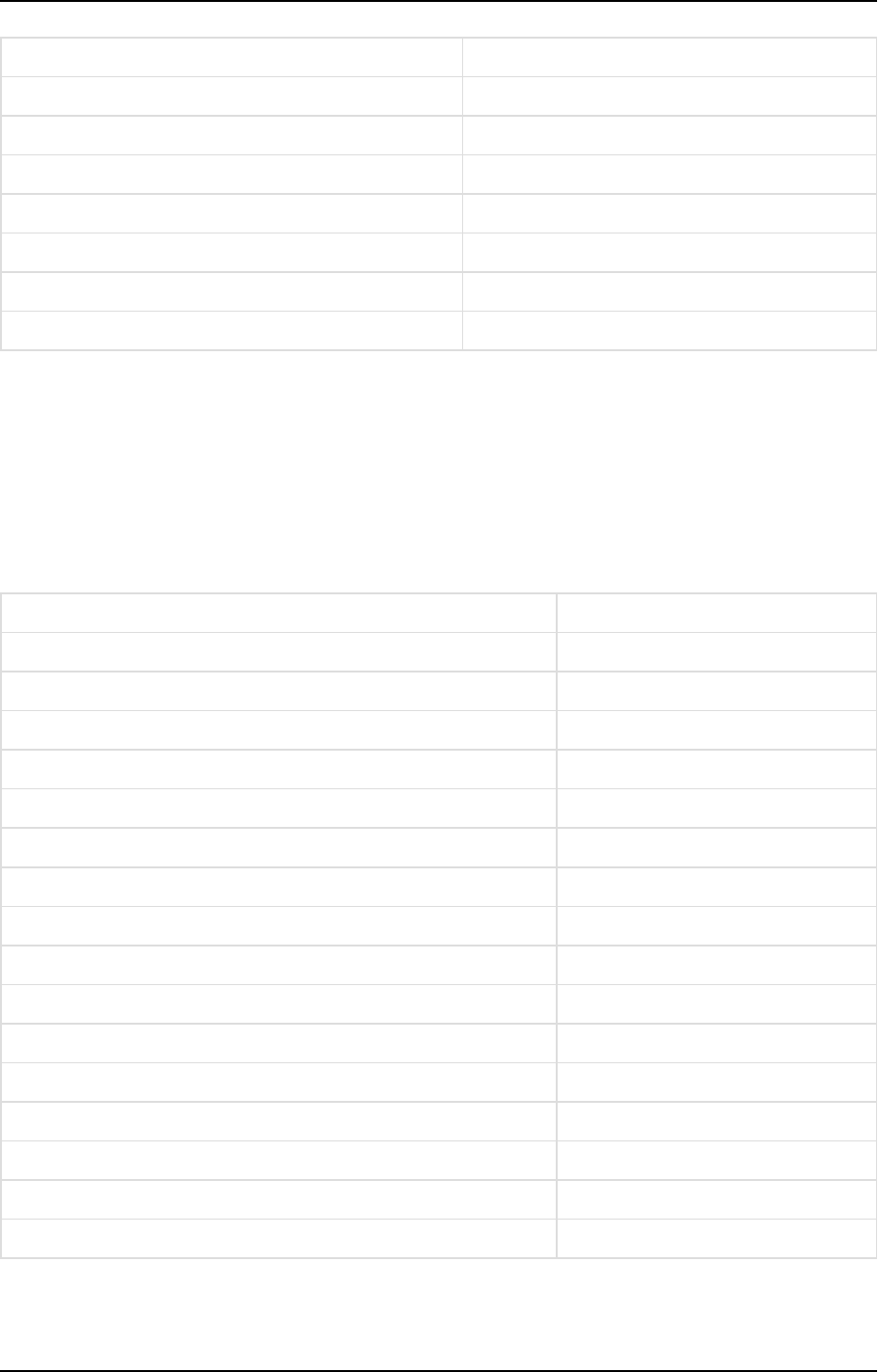

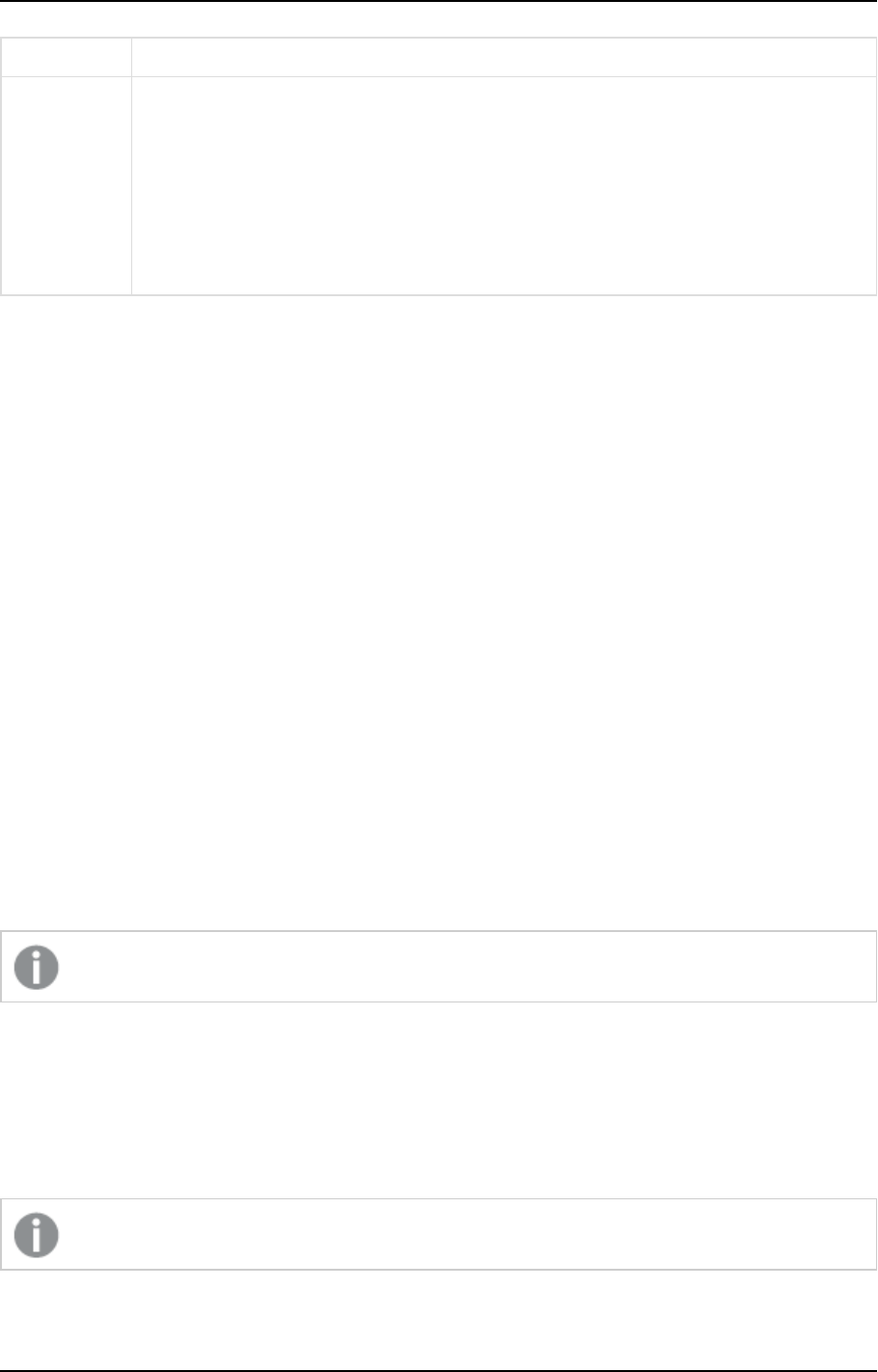

Name Description

Suffix for Replicate Change

Tables

The suffix used to identify Replicate Change Tables in the landing zone of

the data warehouse.

Prefix for data warehouse

tables

The prefix used to identify tables in the Data Warehouse.

Prefix for data warehouse

views

The prefix used to identify views in the Data Warehouse.

Suffix for archived Replicate

Change Tables

The suffix used to identify archived Change Tables in the specified database.

For more information on archiving Change Tables, see After applying

changes.

Prefix for data mart tables The prefix used to identify tables in the data mart.

Suffix for exception mart

tables

The suffix used to identify error tables in the data warehouse. These tables

contain data that was rejected by a data quality rule.

Suffix for hub tables The suffix used to identify hub tables in the Data Warehouse. Hub tables

contain History Type 1 columns. History Type 1 column do not contain any

version history as opposed to History Type 2 columns that do.

Suffix for satellite tables The suffix used to identify satellite tables in the Data Warehouse. Satellite

tables contain History Type 2 columns. History Type 2 columns keep a

history of the data version by adding a new row whenever the data is

updated.

Name management options

Setup and User Guide - Qlik Compose, May 2022 42

5 Data Warehouse projects

Name Description

"From Date" column name The name of the "From Date" column. This column is added to tables that

contain attributes (columns) with a History Type 2. The column is used to

delimit the range of dates for a given record version.

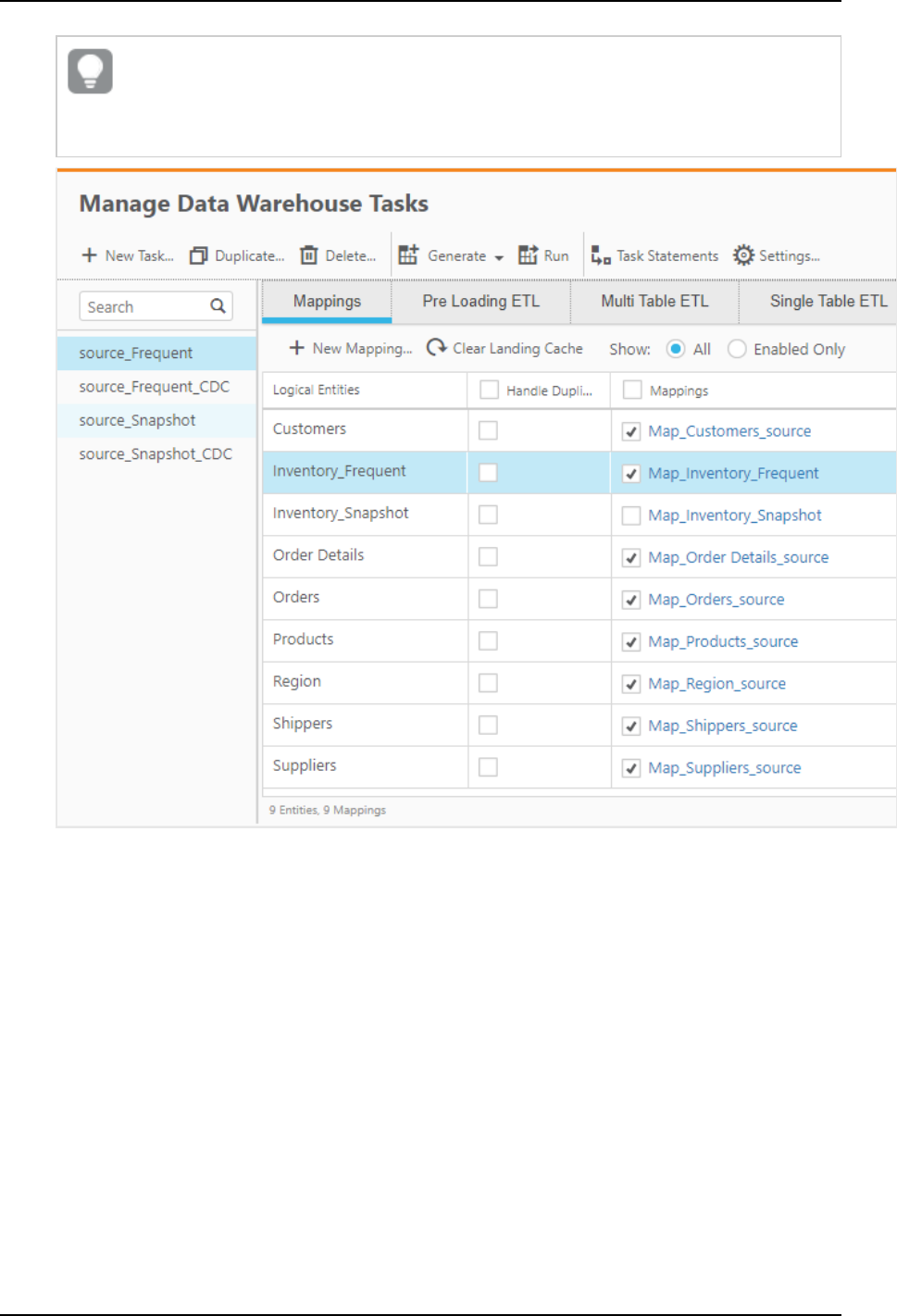

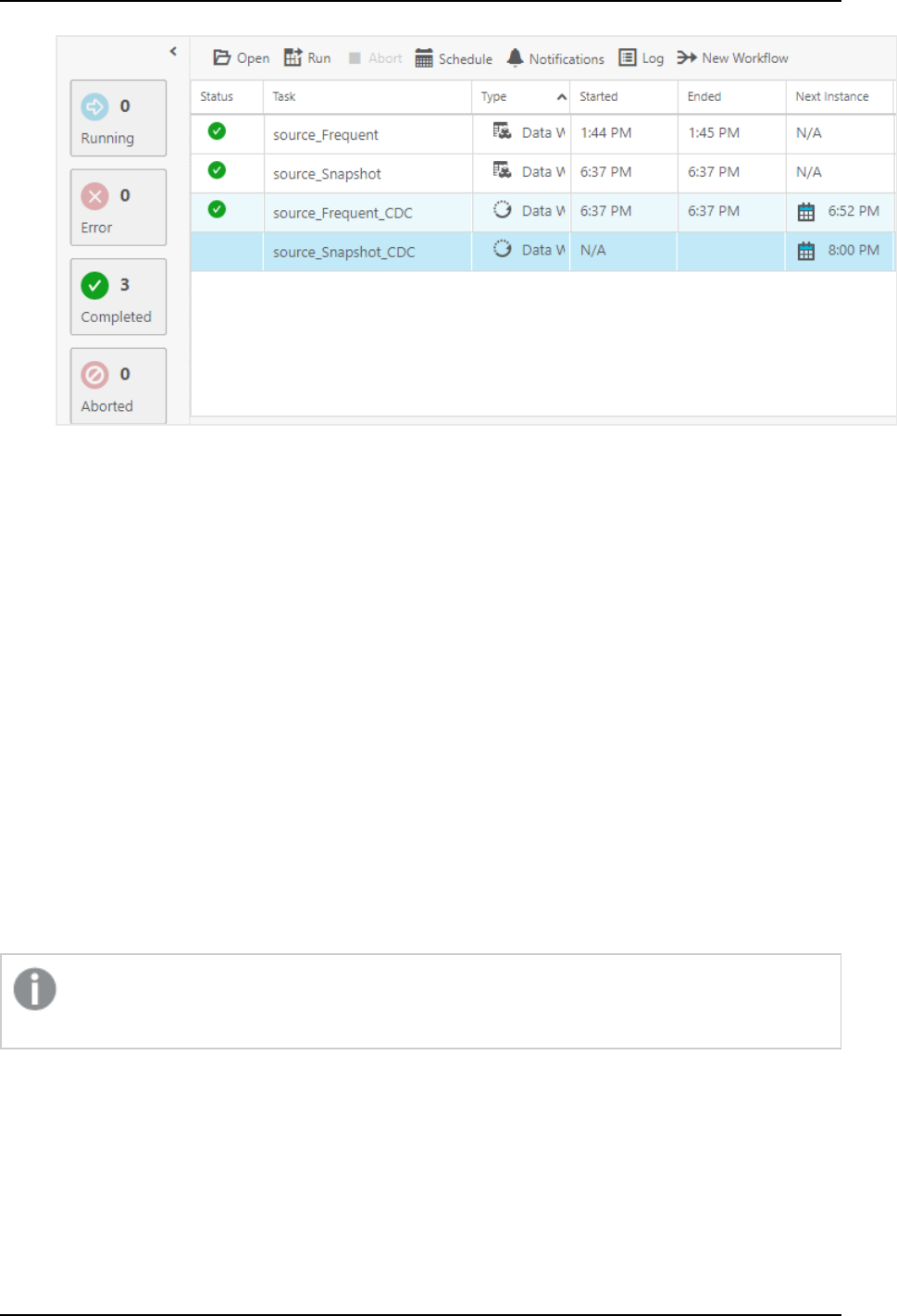

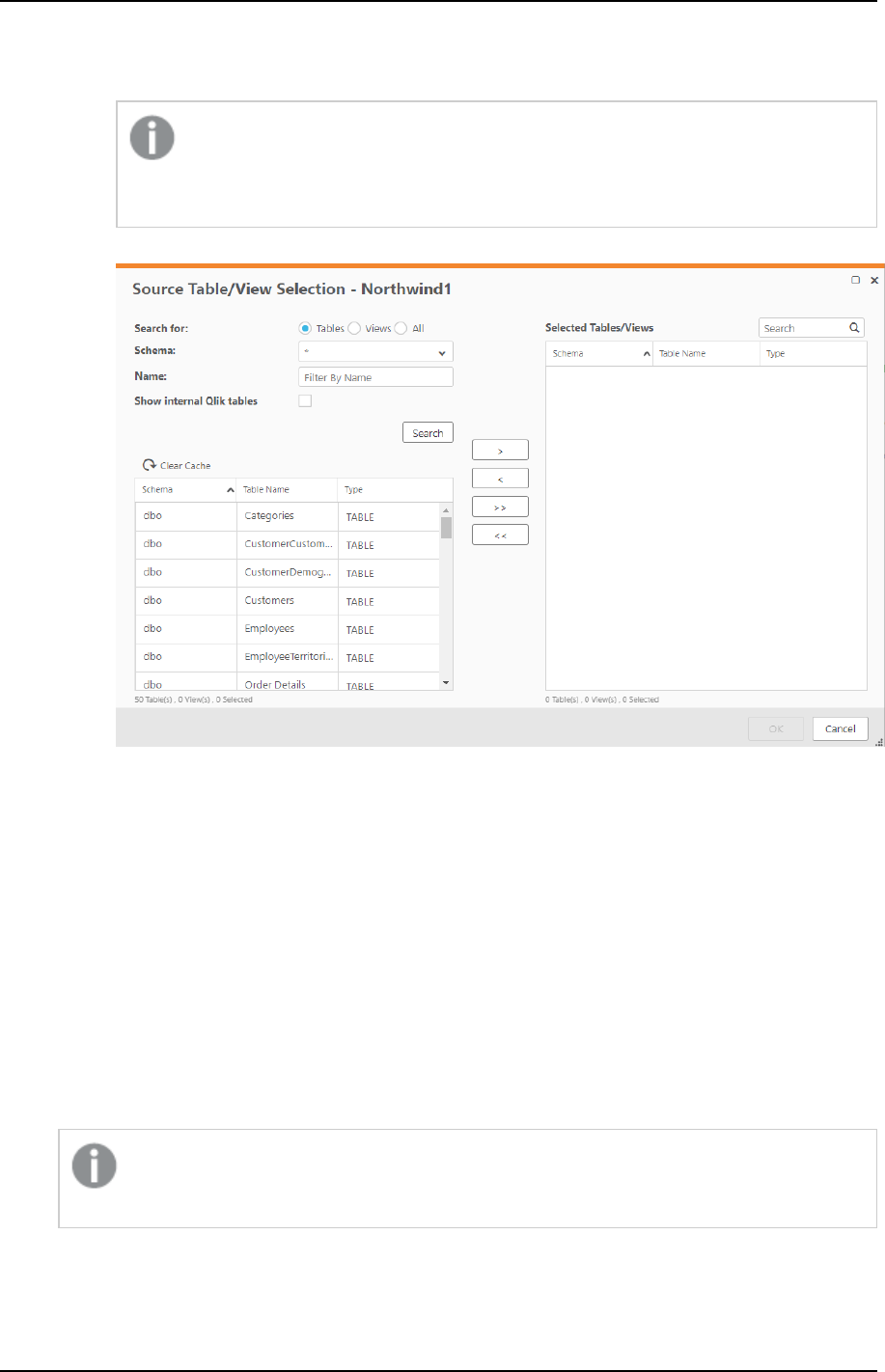

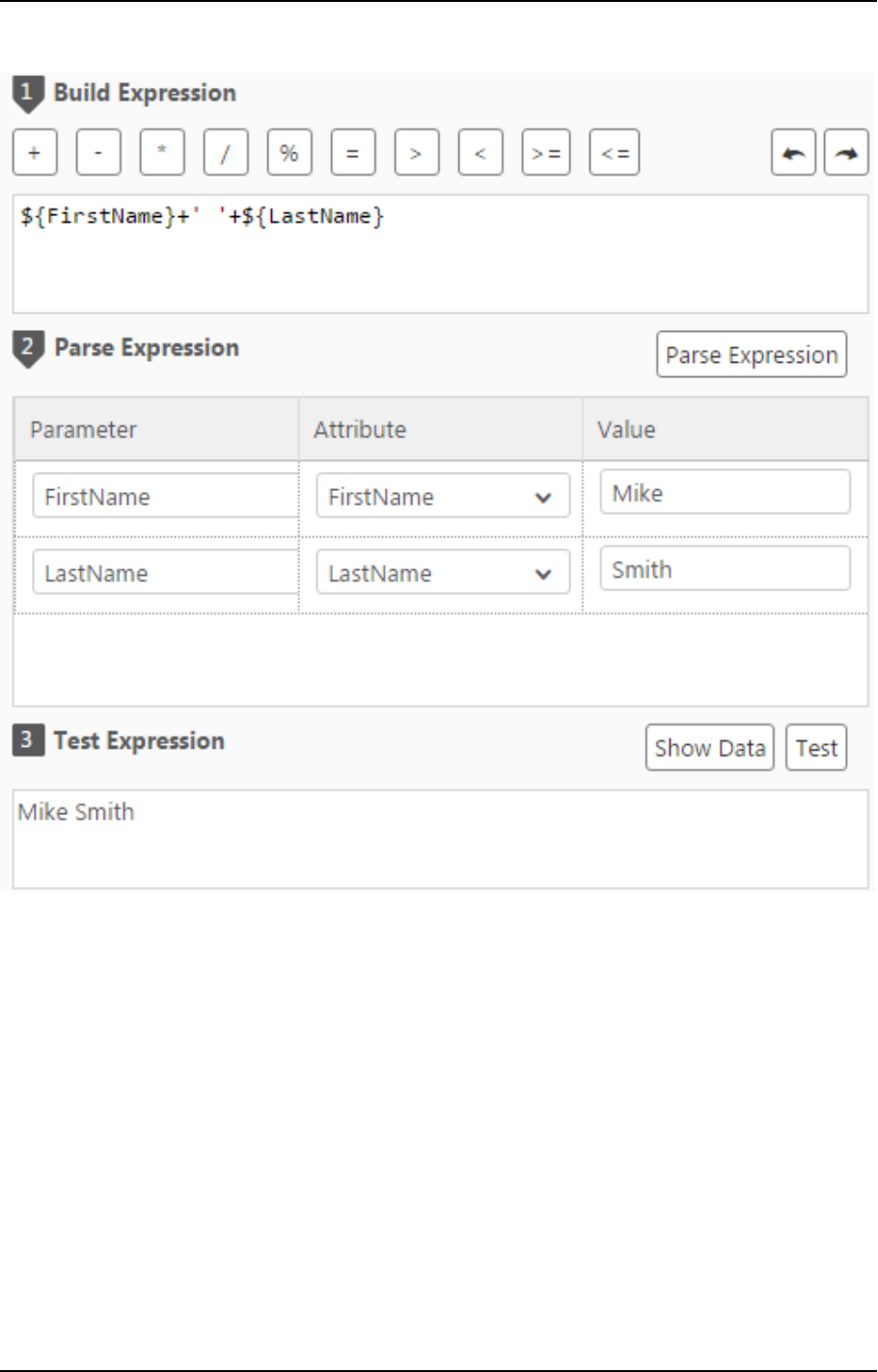

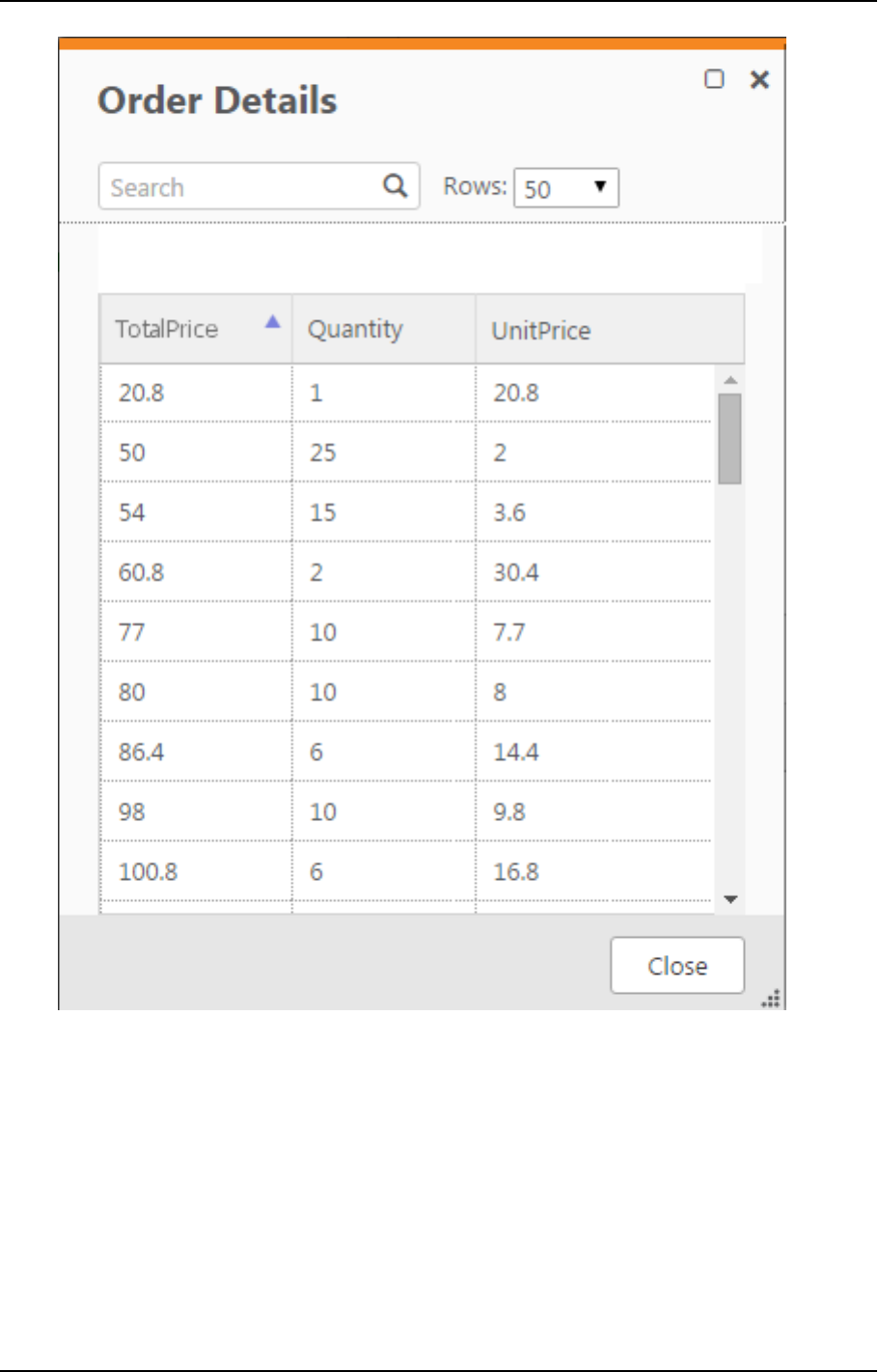

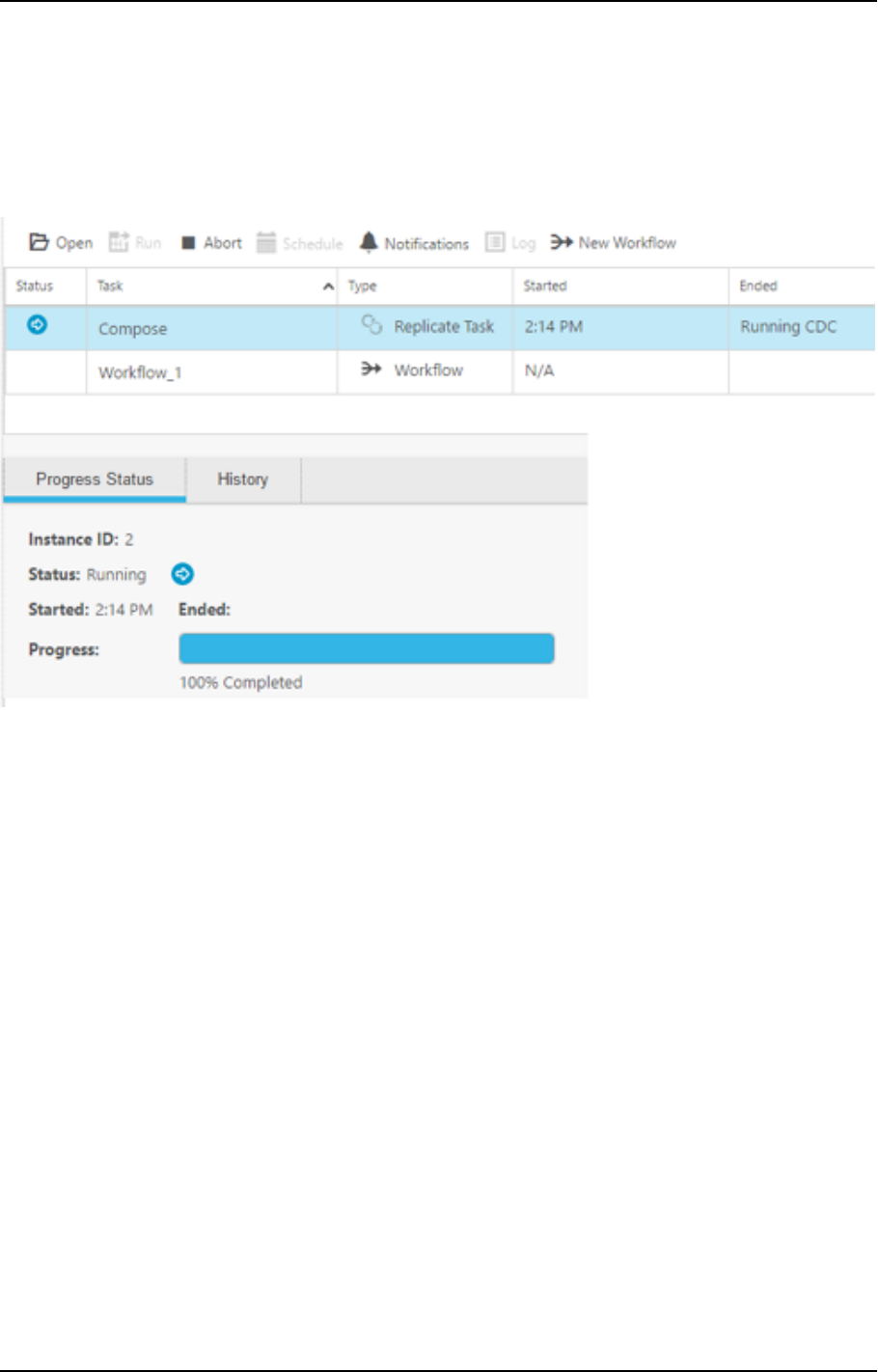

This name cannot be used in other columns.